Docker is an amazing tool for developers. It allows us to build and replicate images on any host, removing the inconsistencies of dev environments and reducing onboarding timelines considerably.

To provide an example of how you might move to containerized development, I built a simpletodoAPI using NodeJS, Express, and PostgreSQL using Docker Compose for development, testing, and eventually in my CI/CD pipeline.

In a two-part series, I will cover the development and pipeline creation steps. In this post, I will cover the first part: developing and testing with Docker Compose.

[Tweet “Exploring development and pipeline creation steps for a simple API using NodeJS and Docker Compose.”]Requirements for This Tutorial

This tutorial requires you to have a few items before you can get started.

- Install Docker Community Edition

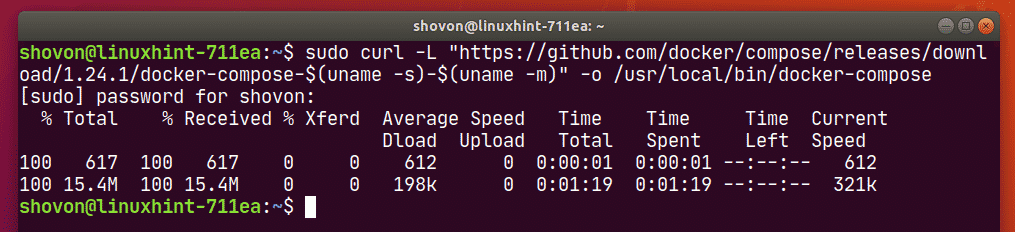

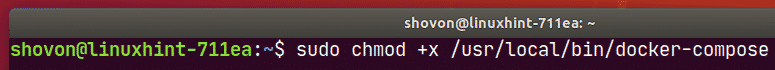

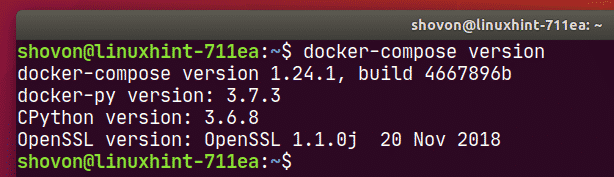

- Install Docker Compose

- Download Todo App Example – Non- Docker branch

The todo app here is essentially a stand-in, and you could replace it with your own application. Some of the setup here is specific for this application, and the needs of your application may not be covered, but it should be a good starting point for you to get the concepts needed to Dockerize your own applications.

Once you have everything set up, you can move on to the next section.

Creating the Dockerfile

At the foundation of any Dockerized application, you will find aDockerfile. TheDockerfilecontains all of the instructions used to build out the application image. You can set this up by installing NodeJS and all of its dependencies; however the Docker ecosystem has an image repository (the Docker Store) with a NodeJS image already created and ready to use.

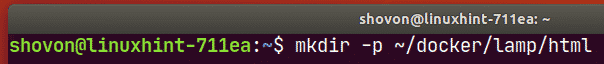

In the root directory of the application, create a newDockerfile.

/> touch DockerfileOpen the newly createdDockerfilein your favorite editor. The first instruction,FROM, will tell Docker to use the prebuilt NodeJS image. There are several choices, but this project uses thenode:7.7.2-alpineimage. For more details about why I’m usingalpinehere over the other options, you can read this post.

FROM node:7.7.2-alpineIf you rundocker build ., you will see something similar to the following:

Sending build context to Docker daemon 249.3 kB

Step 1/1 : FROM node:7.7.2-alpine

7.7.2-alpine: Pulling from library/node

709515475419: Pull complete

1a7746e437f7: Pull complete

662ac7b95f9d: Pull complete

Digest: sha256:6dcd183eaf2852dd8c1079642c04cc2d1f777e4b34f2a534cc0ad328a98d7f73

Status: Downloaded newer image for node:7.7.2-alpine

---> 95b4a6de40c3

Successfully built 95b4a6de40c3With only one instruction in the Dockerfile, this doesn’t do too much, but it does show you the build process without too much happening. At this point, you now have an image created, and runningdocker imageswill show you the images you have available:

REPOSITORY TAG IMAGE ID CREATED SIZE

node 7.7.2-alpine 95b4a6de40c3 6 weeks ago 59.2 MBTheDockerfileneeds more instructions to build out the application. Currently it’s only creating an image with NodeJS installed, but we still need our application code to run inside the container. Let’s add some more instructions to do this and build this image again.

This particular Docker file usesRUN,COPY, andWORKDIR. You can read more about those on Docker’s reference page to get a deeper understanding.

Let’s add the instructions to theDockerfilenow:

FROM node:7.7.2-alpine

WORKDIR /usr/app

COPY package.json .

RUN npm install --quiet

COPY . .Here is what is happening:

- Set the working directory to

/usr/app - Copy the

package.jsonfile to/usr/app - Install

node_modules - Copy all the files from the project’s root to

/usr/app

You can now rundocker build .again and see the results:

Sending build context to Docker daemon 249.3 kB

Step 1/5 : FROM node:7.7.2-alpine

---> 95b4a6de40c3

Step 2/5 : WORKDIR /usr/app

---> e215b737ca38

Removing intermediate container 3b0bb16a8721

Step 3/5 : COPY package.json .

---> 930082a35f18

Removing intermediate container ac3ab0693f61

Step 4/5 : RUN npm install --quiet

---> Running in 46a7dcbba114

### NPM MODULES INSTALLED ###

---> 525f662aeacf

---> dd46e9316b4d

Removing intermediate container 46a7dcbba114

Step 5/5 : COPY . .

---> 1493455bcf6b

Removing intermediate container 6d75df0498f9

Successfully built 1493455bcf6bYou have now successfully created the application image using Docker. Currently, however, our app won’t do much since we still need a database, and we want to connect everything together. This is where Docker Compose will help us out.

Docker Compose Services

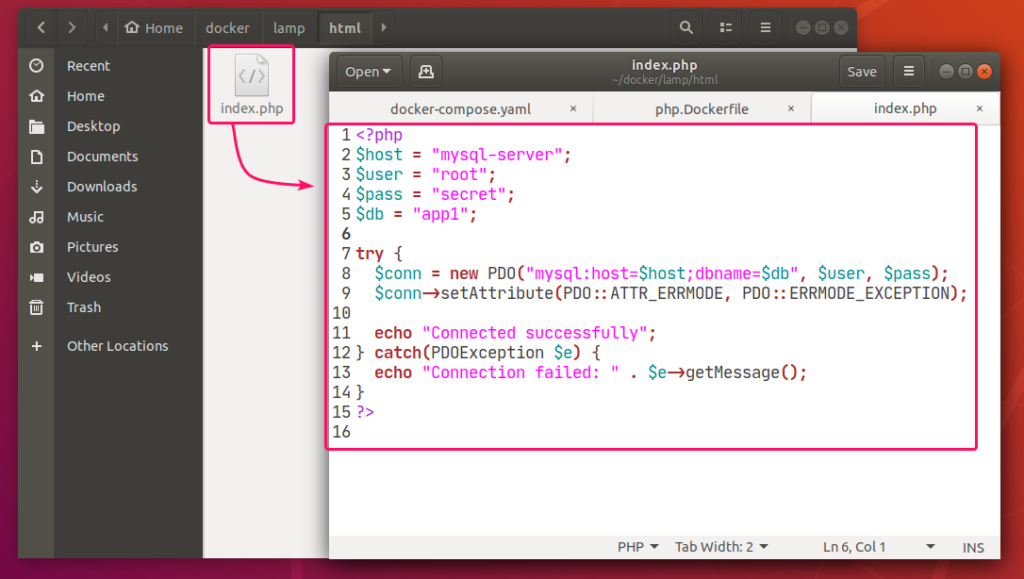

Now that you know how to create an image with aDockerfile, let’s create an application as a service and connect it to a database. Then we can run some setup commands and be on our way to creating that new todo list.

Create the filedocker-compose.yml:

/> touch docker-compose.ymlThe Docker Compose file will define and run the containers based on a configuration file. We are using compose file version 2 syntax, and you can read up on it on Docker’s site.

An important concept to understand is that Docker Compose spans “buildtime” and “runtime.” Up until now, we have been building images usingdocker build ., which is “buildtime.” This is when our containers are actually built. We can think of “runtime” as what happens once our containers are built and being used.

Compose triggers “buildtime” — instructing our images and containers to build — but it also populates data used at “runtime,” such as env vars and volumes. This is important to be clear on. For instance, when we add things likevolumesandcommand, they will override the same things that may have been set up via the Dockerfile at “buildtime.”

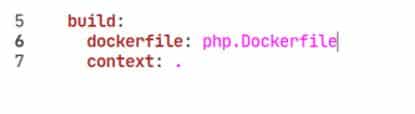

Open yourdocker-compose.ymlfile in your editor and copy/paste the following lines:

version: '2'

services:

web:

build: .

command: npm run dev

volumes:

- .:/usr/app/

- /usr/app/node_modules

ports:

- "3000:3000"

depends_on:

- postgres

environment:

DATABASE_URL: postgres://todoapp@postgres/todos

postgres:

image: postgres:9.6.2-alpine

environment:

POSTGRES_USER: todoapp

POSTGRES_DB: todosThis will take a bit to unpack, but let’s break it down by service.

The web service

The first directive in the web service is tobuildthe image based on ourDockerfile. This will recreate the image we used before, but it will now be named according to the project we are in,nodejsexpresstodoapp. After that, we are giving the service some specific instructions on how it should operate:

command: npm run dev– Once the image is built, and the container is running, thenpm run devcommand will start the application.volumes:– This section will mount paths between the host and the container..:/usr/app/– This will mount the root directory to our working directory in the container./usr/app/node_modules– This will mount thenode_modulesdirectory to the host machine using the buildtime directory.environment:– The application itself expects the environment variableDATABASE_URLto run. This is set indb.js.ports:– This will publish the container’s port, in this case3000, to the host as port3000.

TheDATABASE_URLis the connection string.postgres://todoapp@postgres/todosconnects using thetodoappuser, on the hostpostgres, using the databasetodos.

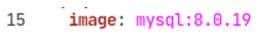

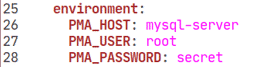

The Postgres service

Like the NodeJS image we used, the Docker Store has a prebuilt image for PostgreSQL. Instead of using abuilddirective, we can use the name of the image, and Docker will grab that image for us and use it. In this case, we are usingpostgres:9.6.2-alpine. We could leave it like that, but it hasenvironmentvariables to let us customize it a bit.

environment:– This particular image accepts a couple environment variables so we can customize things to our needs.POSTGRES_USER: todoapp– This creates the usertodoappas the default user for PostgreSQL.POSTGRES_DB: todos– This will create the default database astodos.

Running The Application

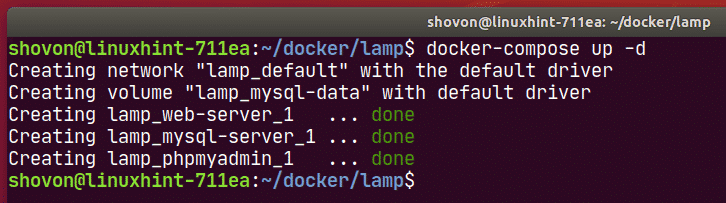

Now that we have our services defined, we can build the application usingdocker-compose up. This will show the images being built and eventually starting. After the initial build, you will see the names of the containers being created:

Pulling postgres (postgres:9.6.2-alpine)...

9.6.2-alpine: Pulling from library/postgres

627beaf3eaaf: Pull complete

e351d01eba53: Pull complete

cbc11f1629f1: Pull complete

2931b310bc1e: Pull complete

2996796a1321: Pull complete

ebdf8bbd1a35: Pull complete

47255f8e1bca: Pull complete

4945582dcf7d: Pull complete

92139846ff88: Pull complete

Digest: sha256:7f3a59bc91a4c80c9a3ff0430ec012f7ce82f906ab0a2d7176fcbbf24ea9f893

Status: Downloaded newer image for postgres:9.6.2-alpine

Building web

...

Creating nodejsexpresstodoapp_postgres_1

Creating nodejsexpresstodoapp_web_1

...

web_1 | Your app is running on port 3000At this point, the application is running, and you will see log output in the console. You can also run the services as a background process, usingdocker-compose up -d. During development, I prefer to run without-dand create a second terminal window to run other commands. If you want to run it as a background process and view the logs, you can rundocker-compose logs.

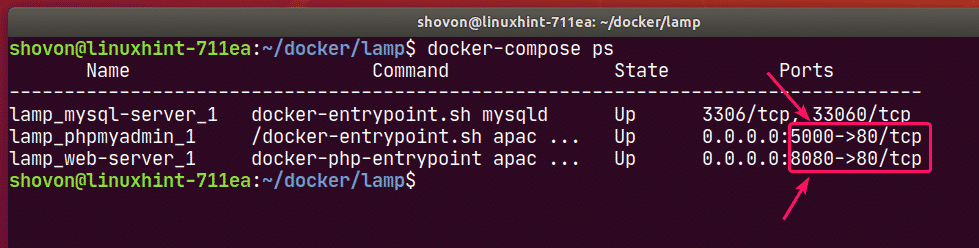

At a new command prompt, you can rundocker-compose psto view your running containers. You should see something like the following:

Name Command State Ports

------------------------------------------------------------------------------------------------

nodejsexpresstodoapp_postgres_1 docker-entrypoint.sh postgres Up 5432/tcp

nodejsexpresstodoapp_web_1 npm run dev Up 0.0.0.0:3000->3000/tcpThis will tell you the name of the services, the command used to start it, its current state, and the ports. Noticenodejsexpresstodoapp_web_1has listed the port as0.0.0.0:3000->3000/tcp. This tells us that you can access the application usinglocalhost:3000/todoson the host machine.

/> curl localhost:3000/todos

[]Thepackage.jsonfile has a script to automatically build the code and migrate the schema to PostgreSQL. The schema and all of the data in the container will persist as long as thepostgres:9.6.2-alpineimage is not removed.

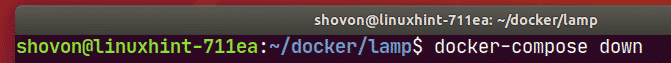

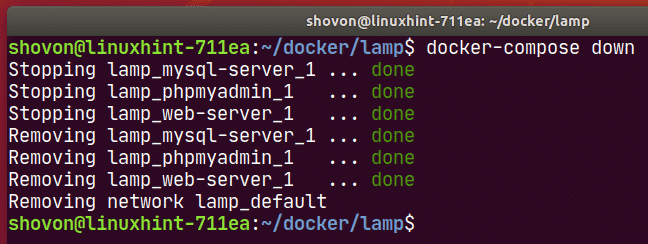

Eventually, however, it would be good to check how your app will build with a clean setup. You can rundocker-compose down, which will clear things that are built and let you see what is happening with a fresh start.

Feel free to check out the source code, play around a bit, and see how things go for you.

Testing the Application

The application itself includes some integration tests built usingjest. There are various ways to go about testing, including creating something likeDockerfile.testanddocker-compose.test.ymlfiles specific for the test environment. That’s a bit beyond the current scope of this article, but I want to show you how to run the tests using the current setup.

The current containers are running using the project namenodejsexpresstodoapp. This is a default from the directory name. If we attempt to run commands, it will use the same project, and containers will restart. This is what we don’t want.

Instead, we will use a different project name to run the application, isolating the tests into their own environment. Since containers are ephemeral (short-lived), running your tests in a separate set of containers makes certain that your app is behaving exactly as it should in a clean environment.

In your terminal, run the following command:

/> docker-compose -p tests run -p 3000 --rm web npm run watch-testsYou should seejestrun through integration tests and wait for changes.

Thedocker-composecommand accepts several options, followed by a command. In this case, you are using-p teststo run the services under thetestsproject name. The command being used isrun, which will execute a one-time command against a service.

Since thedocker-compose.ymlfile specifies a port, we use-p 3000to create a random port to prevent port collision. The--rmoption will remove the containers when we stop the containers. Finally, we are running in thewebservicenpm run watch-tests.

Conclusion

At this point, you should have a solid start using Docker Compose for local app development. In the next part of this series about using Docker Compose for NodeJS development, I will cover integration and deployments of this application using Codeship.

Is your team using Docker in its development workflow? If so, I would love to hear about what you are doing and what benefits you see as a result.

Source: Using Docker Compose for NodeJS Development – via @codeship