\sloppy

1 Network Information Theory (From Cover’s Elements of Information Theory textbook)

A system with many senders and receivers contains many new elements in communications problem: interference, cooperation, and feedback.

These are the issues that are the domain of network information theory. These are the issues that are the domain of network information theory. The general problem is easy to state. Given many senders and receivers and a channel transition matrix that describes the effects of the interference and noise in network, decide whether or not the sources can be transmitted over the channel. The problem involves distributed source coding (data communication) as well as distributed communication (finding the capacity region of the network). This general problem has not yet been solved, so we consider various special cases in this chapter.

Examples of large communication networks include computer networks, satellite networks, and the phone system. Even within a single computer, there are various components that talk to each other. A complete theory of network information would have wide implications for the design of communication and computer networks.

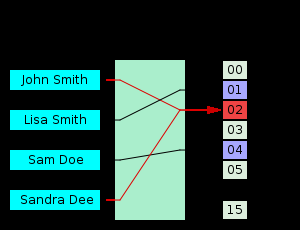

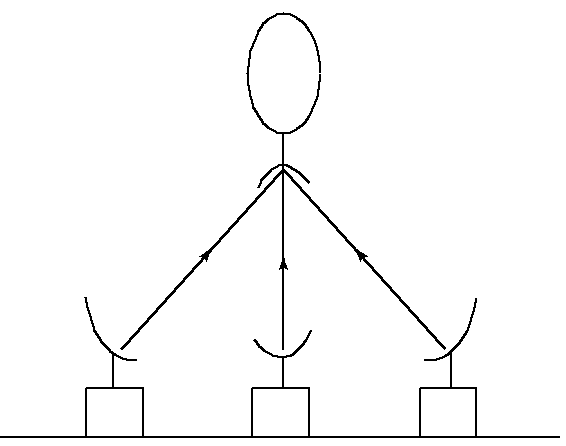

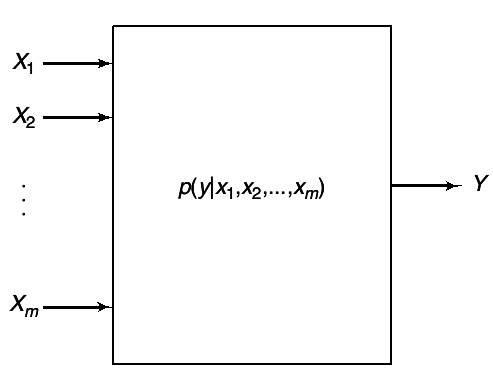

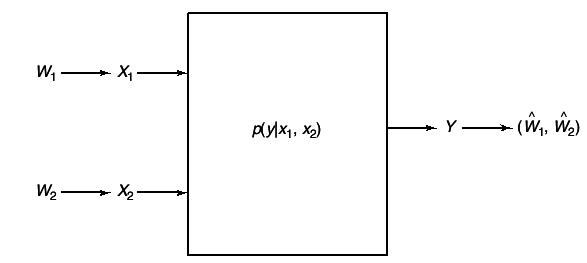

Suppose that

m stations wish to communicate with a common satellite over a common channel, as shown in

1↓. This is known as a

multiple-access channel.

How do the various senders cooperate with each other to send information to the receiver? What rates of communication are achievable simultaneously? What limitations does interference among the senders put on the total rate of communication? This is the best understood mutiuser channel, and the above questions have satisfying answers.

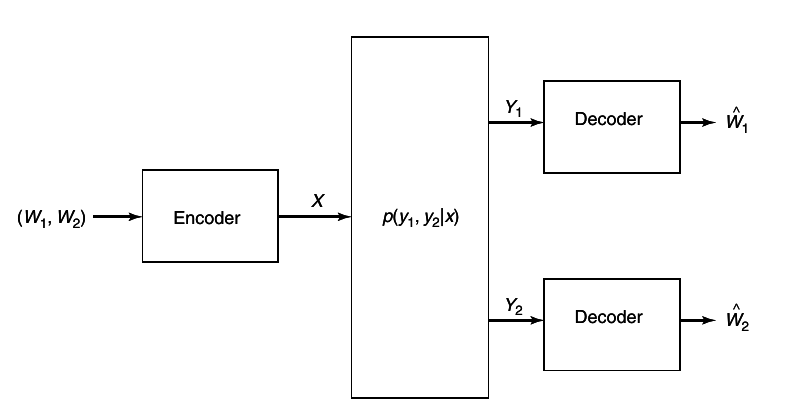

In contrast, we can reverse the network and consider one TV station sending information to

m TV receivers, as in

2↓.

How does the sender encode information meant for different receivers in common signal? For this channel, the answers are known only in special cases. There are other channels, such as the relay channel where there is one source and one destination, but one or more intermediate sender-receiver pairs that achts as relays to facilitate the communications between the source and the destination), the interference channel (two senders and two receivers with crosstalk), and the two-way channel (two sender-receiver pairs sending information to each other). For all these channels, we have only some of the answers to questions about achievable communication rates and the appropriate coding strategies.

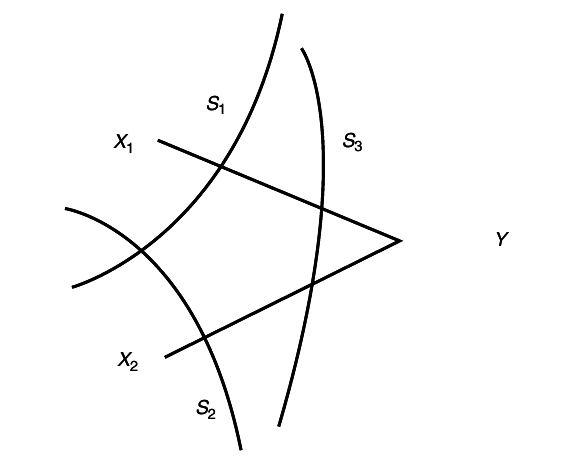

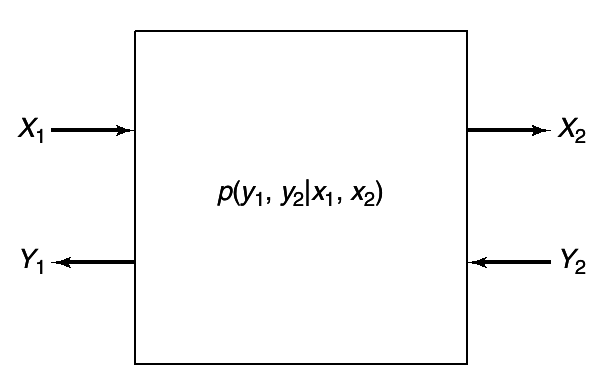

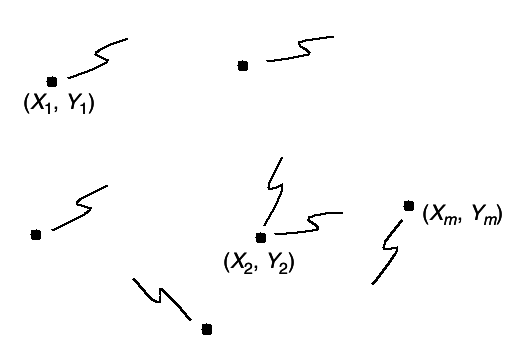

All these channels can be considered special cases of a general communication network that consists of

m nodes trying to communicate with each other, as shown in

3↓.

At each instant of time, the i -th node sends a symbol xi that depends on the messages that it wants to send and the past received symbols at the node. The simultaneous transmission of the symbols (x1, x2, ..., xm) resulsts in random received symbols (Y1, Y2, ...Ym) drawn according to the conditonal probability distribution p(y(1), y(2), ..., y(m)|x(1), x(2), ..., x(m)). Here p(⋅|⋅) expresses the effects of the noise and interference present in the network. If p(⋅|⋅) takes only the values 0 and 1 ,the network is deterministic.

Associated with some of the nodes in the network are stochastic data sources, which are to be communicated to some of the other nodes in the network. If the sources are independent, the messages sent by the nodes are also independent. However, for full generality, we must allow the sources to be dependent. How does one take advantage of the dependence to reduce the amount of information transmitted? Given the probability distribution of the sources and the channel transition function, can one transmit these sources over the channel and recover the sources at the destinations with the appropriate distortion?

We consider various special cases of network communication. We consider the problem of source coding when the channels are noiseless and without interference. In such cases, the problem reduces to finding the set of rates associated with each source such that the required sources can be decoded at the destination with a low probability of error (or appropriate distortion). The simplest case for distributed source coding is the Slepian-Wolf source coding problem, where we have two sources that must be encoded separately, but decoded together at common node. We consider extensions to this theory when only one of the two sources needs to be recovered at the destination.

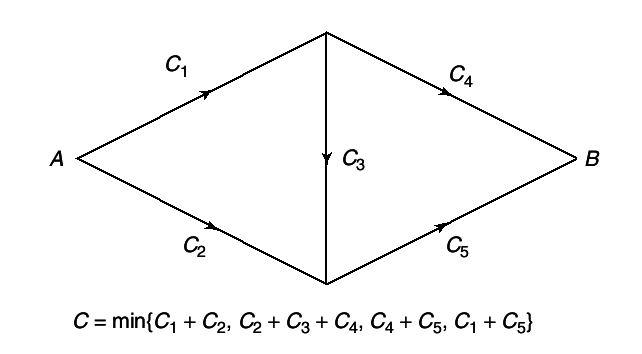

The theory of flow in networks has satisfying answers in such domains as circuit theory and the flow of water in pipes. For example, for the single-source single-sink network of pipes shown in

4↓, the maximum flow form

A to

B can be computed easily from the Ford-Fulerson theorem.

Assume that the edges have capacities Ci as shown. Clearly, the maximum flow across any cut set cannot be greater than the sum of the capacities of the cut edges. Thus minimizing the maximum flow across cut sets yields an upper bound on the capacity of the network. The Ford-Flulkerosn Theorem shows that this capacity can be achieved.

The theory of information flow in networks doesn’t have the sae simple answer as the theory of flow of water in pipes. Allthough we prove an upper bound on the rate of informaion flow across any cut set, these bounds are not achievable in general. However, it is gratifying that some problems, such as the relay channel and the cascade channel, admit a simple max-flow min-cut interpretation. Another subtle problem in the seach for general theory is the absence of a source-channel separation theorem, which we touch on briefly in Section 15.10. A complete theory combining distributed source coding and network channel coding is still distant goal.

In the next section we consider Gaussian examples of some of the basic channels of network information theory. The physically motivated Gaussian channel lends itself to concrete and easily interpreted answers. Later we prove some of the basic results about joint typicality that we use to prove the theorems of multiuser information theory. We then consider various problems in detail: the multiple-access channel, the coding of correlated sources (Slepian-Wolf data compression), the broadcast channel, the relay channel, the coding of a random variable with side information, and the rate distortion problem with side information. We end with an introduction to the general theory of informaion flow in networks. There are a number of open problems in the area, and there does not yet exist a comprehensive theory of information networks. Even if such a theory is found, it may be too complex for easy implementation. But the theory will be able to tell communication designers how close they are to optimality and perhaps suggest some means of improving the communication rates.

1.1 Gaussian Multiple-user channels

Gaussian multiple-user channels illustrate some of the important features of network information theory. The intuition gained in Chapter 9 on the Gaussian channel should make this section a useful introduction. Here the key ideas for establishing the capacity regions of the Gaussian multiple access, broadcast, relay, and two-way channels will be given without proof. The proofs of the coding theorems for the discrete memory-less counterparts to these theorems are given in letter sections of the chapter.

The basic discrete-time additive white Gaussian noise channel with input power P and noise variance N is modeled by:

where the Zi are i.i.d Gaussian random variables with mean 0 and variance N. The signal X = (X1, X2, ..., Xn) has power constraint

The Shannon capacity C is obtained by maximizing I(X;Y) over all random variables X such that EX2 ≤ P and is given by

In this chapter we restrict our attention to discrete-time memoryless channels; the results can be extended to continuous-time Gaussian channels.

1.1.1 Single-User Gaussian Cahnnel

We first review the single-user Gaussian channel studied in Chapter 9. Here Y = X + Z. Chose a rate R < (1)/(2)⋅log⎛⎝1 + (P)/(N)⎞⎠. Fix a good (2nR, n) codebook of power P. Choose an index w in the set 2nR. Send the w-th codeword X(w) from the code-book generated above. The receiver observes Y = X(w) + Z and then finds the index ŵ of the codeword closest to Y. If n is sufficiently large, the probability of error Pr(w ≠ ŵ) will be arbitrarily small. As can be seen form the definition of joint typicality, this minimum-distance decoding scheme is essentially equivalent to finding the codeword in code-book that is jointly typical with the received vector Y.

1.1.2 Gaussian Multiple-Access Channel with m Users

We consider m transmitters each with a power P. Let

Let

denote the capacity of a single-user Gaussian channel with signal to noise ratio (P)/(N) . The achievable rate region for the Gaussian channel takes on the simple form given in the following equations:

Note that when all the rates are the same, the last inequality dominates the others.

Here we need m codebooks, the i-th codebook having 2nRi codewords of power P. Here we need m codebooks, the i-th codebook having 2nRi codewords of power P . Transmission is simple. Each of the independent transmitters chooses an arbitrary codeword form its own codebook. The users send the vectors simultaneously. The receiver sees the codewors added together with the Gaussian noise Z.

Optimal decoding consists of looking for the m codewords, one from each codebook, such that the vector sum is closest to Y in euclidean distance. If (R1, R2, ...Rm) is in the capacity region given above, the probability of error goes to 0 as n tends to infinity.

It is exiting to see in this problem that the sum of the rates of the users C(mP ⁄ N) goes to infinity with m. Thus, in a cocktail party with m celebrants of power P in the presence of ambient noise N, the intended listener receives an unbounded amount of information as the number of people grows to infinity. A similar conclusion holds, of course, for ground communications to a satellite. Apparently, the increasing interference as the number of senders m → ∞ does not limit the total received information.

It is also interesting to note that the optimal transmission scheme here does not involve time-division multiplexing. In fact, each of the transmitters uses all of the bandwidth all of the time.

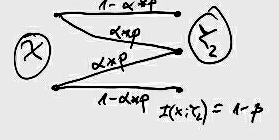

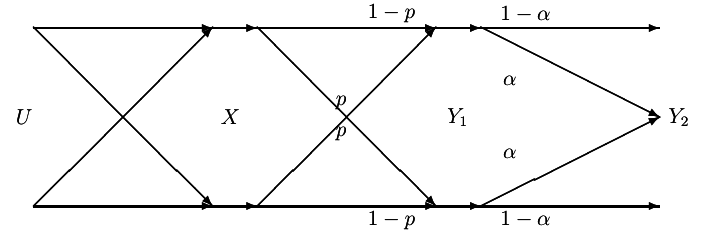

1.1.3 Gaussian Broadcast Channel

Here we assume that we have a sender of power P and two distant receivers, one with Gaussian noise power N1 and the other with Gaussian noise power N2. Without loss of generality, assume that N1 < N2. Thus, receiver Y1 is less noisy than receiver Y2. The model for the channel is Y1 = X + Z1 and Y2 = X + Z2, where Z1 and Z2 are arbitrarily correlated Gaussian random variables with variances N1 and N2, respectively. The sender wishes to send independent messages at rates R1 and R2 to receivers Y1 and Y2, respectively.

Fortunately, all scalar Gaussian broadcast channels belong to the class of degraded broadcast channels discussed in Section 15.6.2. Specializing that work, we find that the capacity region of the Gaussian broadcast channel is:

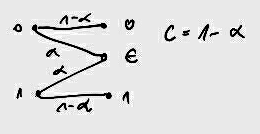

where α may be arbitrarily chosen (0 ≤ α ≤ 1) to trade off rate R1 for rate R2 as transmitter wishes.

To encode the messages, the transmitter generates two codebooks, one with power αP at rate R1, and another codebook with power αP

α = 1 − α

at rate R2, where R1 and R2 lie in the capacity region above. Then to send an index w1 ∈ {1, 2, ...2nR1} and w2 ∈ {1, 2, ..2nR2} to Y1 and Y2, respectively, the transmitter takes the codeword X(w1) from the first codebook and codeword X(w2) from the second codebook and computes the sum. He sends the sum over the channel.

The receivers must now decode the messages. First consider the bad receiver Y2. He merely looks through the second codebook to find the closest codeword to the received vector Y2. His effective signal-to-noise ratio is αP ⁄ (αP + N2), since Y1 's message acts as noise to Y2. (This can be proved).

The good receiver Y1 first decodes Y2 's codeword, which he can accomplish because of his lower noise N1. He subtracts this codeword X̂2 from Y1. He then looks for the codeword in the first codebook closest to Y1 − X̂2. The resulting probability of error can be made as low as desired.

A nice dividend of optimal encoding for degraded broadcast channels is that the better receiver Y1 always knows the message intended for receiver Y2 in addition to the message intended for himself.

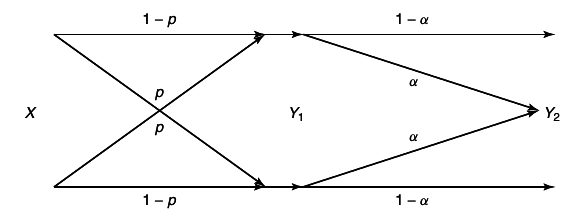

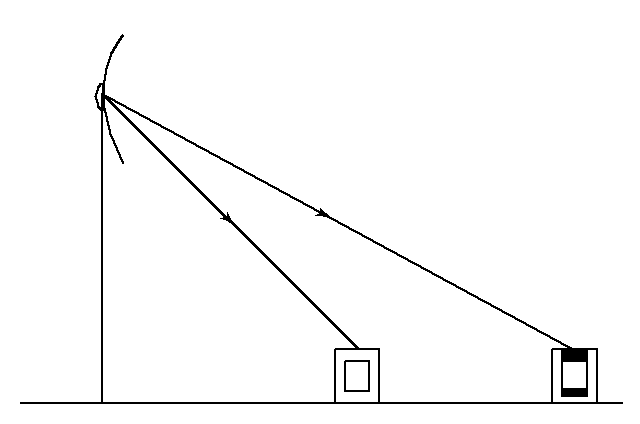

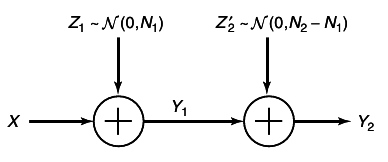

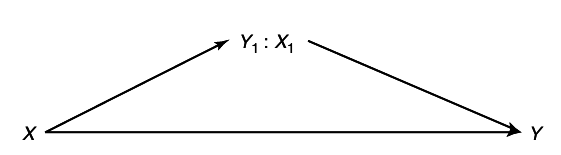

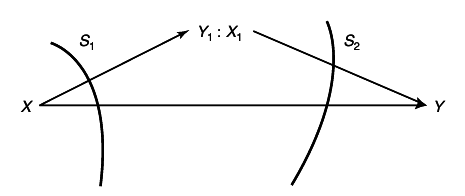

1.1.4 Gaussian Relay Channel

For the relay channel, we have a sender

X and an ultimate intended receiver

Y. Also present is the relay channel, intended solely to help the receiver. The Gaussian relay channel

5↓ is given by

where Z1 and Z2 are independent zero-mean Gaussian random variables with variance N1 and N2, respectively. The encoding allowed by the relay is the causal sequence

Sender X has power P and sender X1 has power P1. The capacity is

where α = 1 − α. Note that if

(P + P1 + 2√(αPP1))/(N1 + N2) = ((√(αP) + √(P1))2)/(N1 + N2) = (√((αP)/(N1 + N2)) + √((P1)/(N1 + N2)))2 =

= ||P1 ≥ (N2P)/(N1), || ≥ (√((αP)/(N1 + N2)) + √((N2P)/(N1(N1 + N2))))2 =

= (αP⋅N1)/(N1(N1 + N2)) + (N2P)/(N1(N1 + N2)) + 2√((αP)/(N1 + N2)⋅(N2P)/(N1(N1 + N2))) = ((αN1 + N2)P)/(N1(N1 + N2)) + 2(P√(αN1N2))/(N1(N1 + N2)) =

= ((αN1 + N2)P + 2P√(αN1N2))/(N1(N1 + N2)) = ((√(αN1) + √(N2))2P)/(N1 + N2) ≥ |α = 1| ≥ (N1 + 2√(N1N2) + N2)/(N1(N1 + N2))⋅P = ⎛⎝1 + 2(√(N1N2))/(N1 + N2)⎞⎠(P)/(N1) ≥ (P)/(N1)

It can be seen that C = C(P ⁄ N1),

Доказот е во Box-от погоре!!!

which is achieved by α = 1. The channel appears to be noise-free after the relay, and the capacity C(P ⁄ N1) from X to the relay can be achieved. Thus, the rate C(P ⁄ (N1 + N2)) without the relay is increased by the presence of the relay to C(P ⁄ N1). For large N2 and for P1 ⁄ N2 ≥ P ⁄ N1, we see that the increment in rate is form C(P ⁄ N1 + N2) ~ 0 to C(P ⁄ N1).

Let R1 < C⎛⎝(αP)/(N1)⎞⎠. Two codebooks are needed. The first codebook has 2nR1 words of power αP. The second has 2nR0 codewords of power αP. We shall use codewords form these codebooks successively to create the opportunity for cooperation by the relay. We start by sending a codeword from the first codebook. The relay now knows the index of this codeword since R1 < C(αP ⁄ N1), but the intended receiver has a list of possible codewords of size 2n(R1 − C(αP ⁄ (N1 + N2)))

Не контам од каде излегуа ова!? Ска да каже дека реалниот капацитет е разлика меѓу капацитетот меѓу предавателот и релето и капацитетот кога не би постоело релето.

. This list calculation involves a result on list codes.

M = 2nR; nR = logM; R = (1)/(n)logM; R ≤ C; (1)/(n)logM ≤ C; M ≤ 2nC

In the next block, the transmitter and the relay wish to cooperate to resolve the receiver’s uncertainty about the codeword sent previously that is on the receiver’s list. Unfortunately, they cannot be sure what this list is because they do not know the received signal Y. Thus, they randomly partition the first codebook into 2nR0 cells with and equal number of codewords in each cell. The relay, the receiver, and the transmitter agree on this partition. The relay and the transmitter find the cell of the partiotion in which the codeword from the first codebook lies and cooperatively send the codeword form second codebook with that index. That is, X and X1 send the same designated codeword. The relay, of course, must scale this codeword so that it meet his power constraint P1. They now transmit their codewords simultaneously. An important point to note here is that the cooperative information sent by the relay and the transmitter is sent coherently. So the power of the sum as seen by the receiver Y is (√(α)P + √(P1))2.

However, this does not exhaust what the transmitter does in the second block. He also chooses a fresh codeword from the first codebook, adds it „on paper” to the cooperative codeword form the second codebook, and sends the sum over the channel. The reception by the ultimate receiver Y in the second block involves first finding the cooperative index from the second codebook by looking for the closest codeword in the second codebook. He subtracts the codeword form the received sequence and then calculates a list of indices of size 2nR0 corresponding to all codewords of the first codebook that might have been sent in the second block.

Now it is time for the intended receiver to complete computi9ng the codeword form the first codebook sent in the first blcok. He takes his list of possible codewords that might have been sent in the first block and intersects it with the cell of the partition that he has learned form the cooperative relay transmission in the second block. The rates and powers have been chosen so that there is only one codeword in the intersection. This is Y’s guess about the information sent in the first block.

We are now in steady state. In each new block, the transmitter and the relay cooperate to resolve the list uncertainty form the previous block. In addition, the transmitter superimposes some fresh information from his first codebook to thistransmission form the second codebook and transmits the sum. The receiver is always one block behind, but for sufficiently many blocks, this does not affect his overall rate of reception.

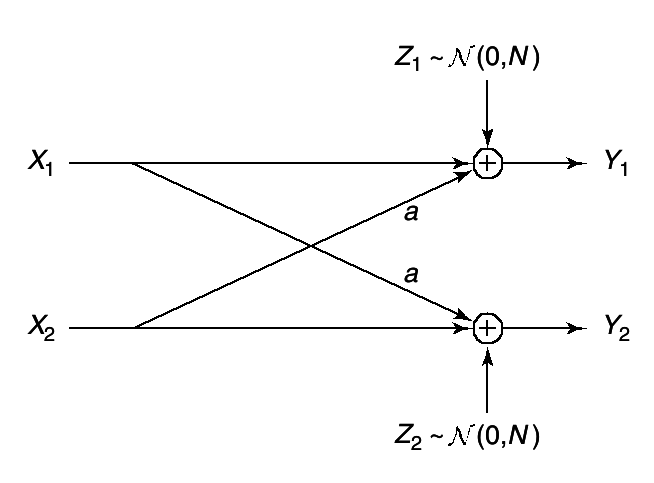

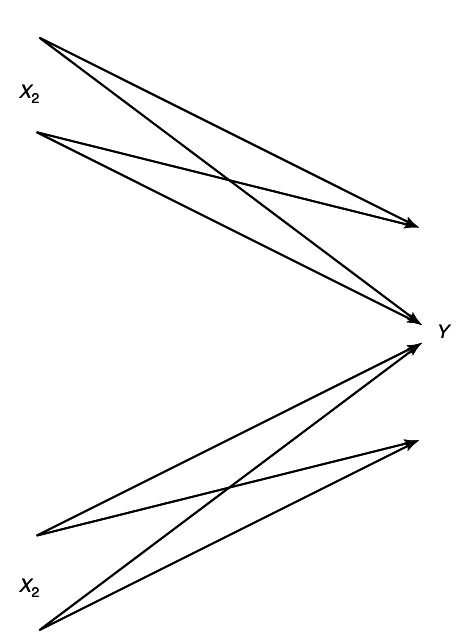

1.1.5 Gaussian Interference Channel

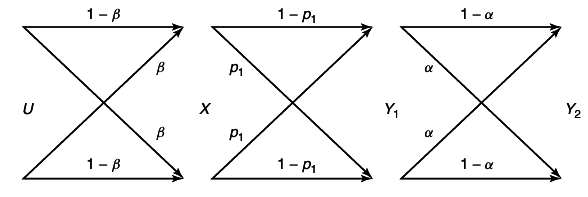

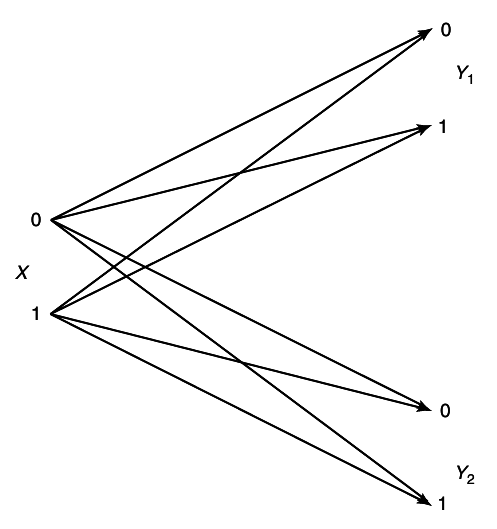

The interference channel has two senders and two receivers. Sender 1 wishes to send information to receiver 1. He does not care what receiver 2 receives or understands; similarly with sender 2 and receiver 2. Each channel interferences with the other. This channel is illustrated in

6↓.

It is not quite a broadcast channel since there is only one intended receiver for each sender, nor is it a multiple access channel because each receiver is only interested in what is being sent by the corresponding transmitter.

For symmetric interference, we have

where Z1, Z2 are independent N(0, N) random variables. This channel has not been solved in general even in Gaussian case. But remarkably, in the case of high interference, it can be shown that the capacity region of this channel is the same as if there were no interference whatsoever.

To achieve this, generate two codebooks, each with power P and rate C(P|N). Each sender independently chooses a word from his book and sends it. Now, if the interference a satisfies C(a2P ⁄ (P + N)) > C(P ⁄ N), the first transmitter understands perfectly the index of second transmitter. He finds it by the usual technique of looking for the closest codeword to his received signal. Once he finds this signal, he subtracts it form his waveform received. Now there is a clean channel between him and his sender. He then searches the sender’s codebook to find the closest codeword and declares that codeword to be the one sent.

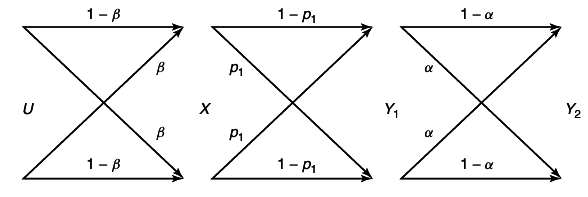

1.1.6 Gaussian Two-way channel

The two-way channel is very similar to the interference channel, with the additional provision that sender 1 is attached to receiver 2 and sender 2 is attached to receiver 1, as shown in

7↓. Hence, sender 1 can use information from previous received symbols of receiver 2 to decide what to send next. This channel introduces another fundamental aspect of network information theory: namely feedback. Feedback enables the senders to use the partial information that each has about the other’s message to cooperate with each other.

The capacity region of the two-way channel was considered by Shannon

[3], who derived upper and lower bounds on the region (Seе Problem 15.15). For Gaussian channels, these two bounds coincide and the capacity region is known; in fact, the Gausisian two-way channel decomposes into two independent channels.

Let P1 and P2 be the powers of transmitters 1 and 2, respectively, and let N1 and N2 be the noise variances of the two channels. Then the rates R1 < C(P1|N1) and R2 < C(P2|N2) can be achieved by the techniques described for the interference channel. In this case, we generate two codebooks of rates R1 and R2. Sender 1 sends a code-word from the first codebook. Receiver 2 receives the sum of the codewords sent by the two senders plus some noise. He simply subtracts out the code out the codeword of sender 2 and he has a clean channel form sender 1 (with only the noise of variance N1). Hence the two-way Gaussian channel decomposes into two independent Gaussian channels. But this is not the case for the general two-way channel; in general, there is a trade-off between the two senders so that both of them cannot send at the optimal rate at the same time.

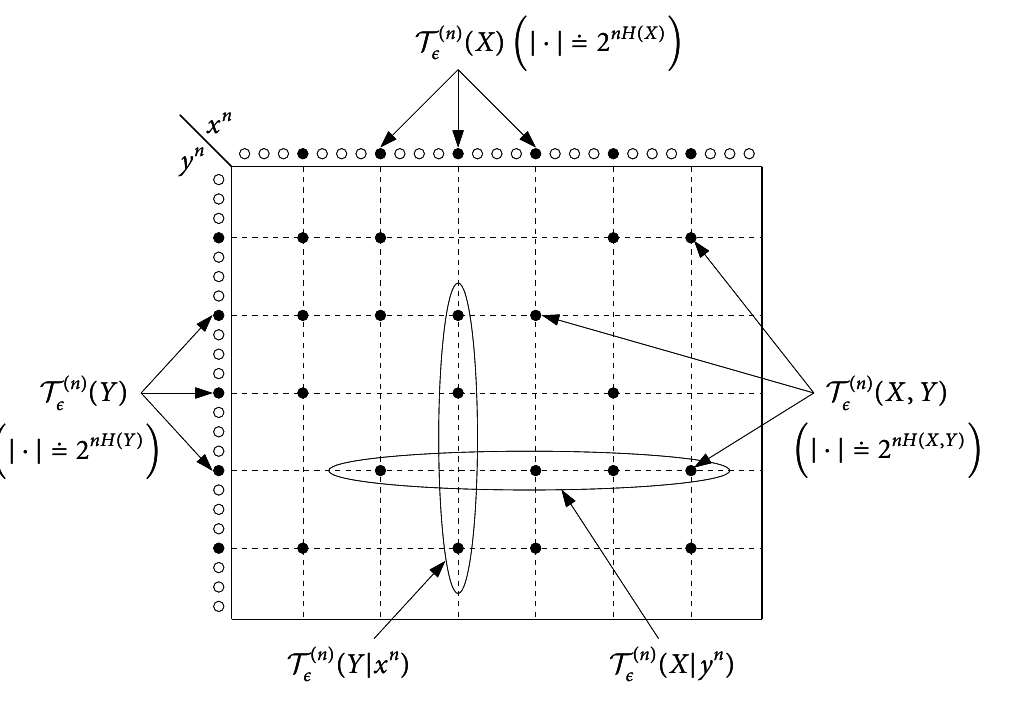

1.2 Jointly Typical sequences

We have previewer the capacity results for networks by considering multiuser Gaussian channels. We began a more detailed analysis in this section, where we extend the joint AEP provided in Chapter 7 to a form that we will use to prove the theorems of network information theory. The joint AEP will enable us to calculate the probability of error for jointly typical decoding for the various coding schemes considered in this chapter.

Let (X1, X2, ..., Xk) denote a finite collection of discrete random variables with some fixed joint distribution, p(x(1), x(2), ..., x(k)), (x(1), x(2), ..., x(k)) ∈ X1 xX2 x...xXk. Let S denote an ordered subset of these random variables and consider n independent copies of S. Thus,

For example, if S = (Xj, Xl), then

Pr{S = s} = Pr{(Xj, Xl) = (xj, xl)} = n∏i = 1p(xij, xil)

X1 ∈ (0, 1), p(X1) = ⎧⎩(1)/(2), (1)/(2)⎫⎭; X2 ∈ (0, 1), p(X2) = ⎧⎩(1)/(2), (1)/(2)⎫⎭;

n = 3

S = (X1, X2)

Pr{S = s} = Pr{(X1, X2) = ( x1, x2) = (x11x21x31, x12x22x32)} = ∏3i = 1p(xi1, xi2) =

= p(x11, x12)p(x21x22)p(x31x32)

To be explicit, e will sometimes use X(S) for S. By the law of large numbers, for any subset S of random variables,

where the convergence takes place with probability 1 for all 2k subsets S ⊆ {X(1), X(2), ..., X(k)}.

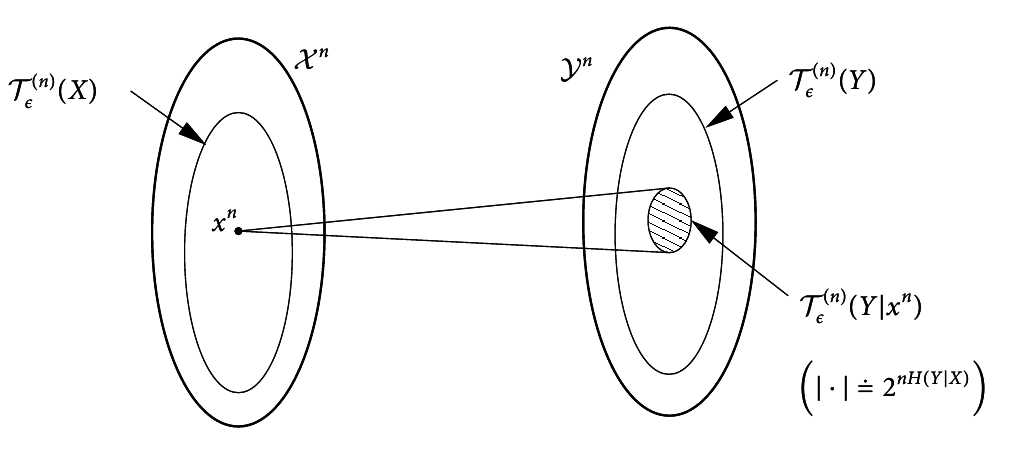

Definition (

ϵ-typical

n-sequences)

The set A(n)ϵ of ϵ-typical n-sequences (x1, x2, ..., xk) is defined by:

A(n)ϵ(X(1), X(2), ..., X(k)) = A(n)ϵ = ⎧⎩(x1, x2, ..., xk):|| − (1)/(n)logp(s) − H(S)|| < ϵ, ∀S ⊆ {X(1), X(2), ..., X(k)}⎫⎭

Аналогијата е дека X(1) одговара на Xn, a X(2) на Yn и така натака...

Let A(n)ϵ(S) denote the restriction of A(n)ϵ to the coordinates of S. Thus, if S = (X1, X2), we have

A(n)ϵ(X1, X2) = ⎧⎩(x1, x2):|| − (1)/(n)logp(x1, x2) − H(X1, X2)|| < ϵ, || − (1)/(n)logp(x1) − H(X1)|| < ϵ, || − (1)/(n)logp(x2) − H(X2)|| < ϵ⎫⎭

We will use the notation αn≐2n(b±ϵ) to mean that

for n sufficiently large.

Внимавај нема − пред (1)/(n) како во стандардната дефиниција на AEP

For any

ϵ > 0, for sufficiently large

n,

2. s ∈ A(n)ϵ(S) ⇒ p(s)≐2 − n(H(S)±ϵ).

3. \mathchoice|A(n)ϵ(S)|≐2n(H(S)±2ϵ).|A(n)ϵ(S)|≐2n(H(S)±2ϵ).|A(n)ϵ(S)|≐2n(H(S)±2ϵ).|A(n)ϵ(S)|≐2n(H(S)±2ϵ).

1. This follows form the law of large numbers for the random variable in the definition A(n)ϵ(S).

2. This follows directly from the definition of A(n)ϵ(S).

3. This follows form

→ |A(n)ϵ| ≤ 2 + n(H(S) + ϵ)

→ |A(n)ϵ| ≥ (1 − ϵ)2 + n(H(S) − ϵ)

(1 − ϵ)⋅2n(H(S) − ϵ) ≤ |A(n)ϵ| ≤ 2n(H(S) + ϵ)

Combining

25↑ and

24↑ we have

\mathchoice|A(n)ϵ|≐2n(H(S1)±2ϵ)|A(n)ϵ|≐2n(H(S1)±2ϵ)|A(n)ϵ|≐2n(H(S1)±2ϵ)|A(n)ϵ|≐2n(H(S1)±2ϵ) for sufficiently large

n.

(1 − ϵ)⋅2 + n(H(S) − ϵ) ≤ |A(n)ϵ| ≤ 2n(H(S) + ϵ) 2 + n(H(S) − ϵ) − ϵ⋅2 + n(H(S) − ϵ) ≤ |A(n)ϵ| ≤ 2n(H(S) + ϵ)

2 + n(H(S) − ϵ) ≤ |A(n)ϵ| + ϵ⋅2 + n(H(S) − ϵ) ≤ 2n(H(S) + ϵ)

\mathchoice||(1)/(n)logan − b|| < ϵ αn≐2n(b±ϵ);||(1)/(n)logan − b|| < ϵ αn≐2n(b±ϵ);||(1)/(n)logan − b|| < ϵ αn≐2n(b±ϵ);||(1)/(n)logan − b|| < ϵ αn≐2n(b±ϵ); − ϵ < − (1)/(n)logan − b < ϵ b − ϵ < − (1)/(n)logan < b + ϵ

n⋅(b − ϵ) < − logan < n(b + ϵ)

− n(b − ϵ) > logan > − n(b + ϵ) 2 − n(b + ϵ) ≤ an ≤ 2 + n(b − ϵ)

(1 − ϵ)⋅2n(H(S) − ϵ) ≤ |A(n)ϵ| ≤ 2n(H(S) + ϵ)

log(1 − ϵ) + n(H(S) − ϵ) ≤ log|A(n)ϵ| ≤ n(H(S) + ϵ)

(1)/(n)log(1 − ϵ) + (H(S) − ϵ) ≤ (1)/(n)log|A(n)ϵ| ≤ (H(S) + ϵ)

——————————————————————————–——————————————————————————–——————————

− (1)/(n)log(1 − ϵ) − (H(S) − ϵ) ≥ − (1)/(n)log|A(n)ϵ| ≥ − H(S) − ϵ;

− (1)/(n)log(1 − ϵ) − ϵ ≥ − (1)/(n)log|A(n)ϵ| ≥ − H(S) − ϵ

——————————————————————————–——————————————————————————–——————————

\cancelto − ϵ(1)/(n)log(1 − ϵ) − ϵ ≤ (1)/(n)log|A(n)ϵ| − H(S) ≤ ϵ

-2ϵ ≤ (1)/(n)log|A(n)ϵ| − H(S) ≤ ϵ \strikeout off\uuline off\uwave offAко важи за ϵ ќе важи и за2ϵ

− 2ϵ ≤ (1)/(n)log|A(n)ϵ| − H(S) ≤ 2ϵ → ||(1)/(n)log|A(n)ϵ| − H(S)|| ≤ 2ϵ → |A(n)ϵ|≐2n(H(S)±2ϵ)

Dокажано!!!

4. Let S1, S2 ⊆ {X(1), X(2), ..., X(k)}If (s1, s2) ∈ A(n)ϵ(S1, S2)then p(s1|s2) = 2 − n(H(S1|S2)±2ϵ)

For (s1, s2) ∈ A(n)ϵ(S1, S2) we have p(s1)≐2 − n(H(S1)±ϵ) and p(s1, s2)≐2 − n(H(S1S2)±ϵ) hence

p(s2| s1) = (p(s1, s2))/(p(s1)) = (2 − n(H(S1S2)±ϵ))/(2 − n(H(S1)±ϵ))≐2 − n(H(S1S2) − H(S1)±ϵ)≐2 − n(H(S2|S1)±2ϵ)

The next theorem bounds the number of conditionally typical sequences for a given typical sequence.

Let S1S2 be two subsets of X(1), X(2), ..., X(k). For any ϵ > 0, define A(n)ϵ to be the set of s1 sequences that are jointly ϵ-typical with a particular s2 sequence. If s2 ∈ A(n)ϵ(S2), then for sufficiently large n, we have

\mathchoice|A(n)ϵ(S1| s2)| ≤ 2n(H(S1|S2) + 2ϵ)|A(n)ϵ(S1| s2)| ≤ 2n(H(S1|S2) + 2ϵ)|A(n)ϵ(S1| s2)| ≤ 2n(H(S1|S2) + 2ϵ)|A(n)ϵ(S1| s2)| ≤ 2n(H(S1|S2) + 2ϵ)

and

\mathchoice(1 − ϵ)2n(H(S1|S2) − 2ϵ) ≤ ⎲⎳s2p(s2)|A(n)ϵ(S1| s2)|(1 − ϵ)2n(H(S1|S2) − 2ϵ) ≤ ⎲⎳s2p(s2)|A(n)ϵ(S1| s2)|(1 − ϵ)2n(H(S1|S2) − 2ϵ) ≤ ⎲⎳s2p(s2)|A(n)ϵ(S1| s2)|(1 − ϵ)2n(H(S1|S2) − 2ϵ) ≤ ⎲⎳s2p(s2)|A(n)ϵ(S1| s2)|

As in part 3 of Theorem 15.2.1, we have

If

n is sufficiently large we, can argue form

22↑ that

1 − ϵ ≤ \mathchoice ⎲⎳s2p(s2) ⎲⎳s1 ∈ Anϵ(S1| s2)p(s2| s1) ⎲⎳s2p(s2) ⎲⎳s1 ∈ Anϵ(S1| s2)p(s2| s1) ⎲⎳s2p(s2) ⎲⎳s1 ∈ Anϵ(S1| s2)p(s2| s1) ⎲⎳s2p(s2) ⎲⎳s1 ∈ Anϵ(S1| s2)p(s2| s1) ≤ ⎲⎳s2p(s2) ⎲⎳s1 ∈ Anϵ(S1| s2)2 − n(H(S1|S2) − 2ϵ) = ⎲⎳s2p(s2)⋅A(n)ϵ(S1| s2)⋅2 − n(H(S1|S2) − 2ϵ)

= 2 − n(H(S1|S2) − 2ϵ)⋅ ⎲⎳s2p(s2)⋅A(n)ϵ(S1| s2)

To calculate the probability of decoding error, we need to know the probability that conditionally independent sequences are jointly typical. Let S1S2, and S3 be three subsets of {X(1), X(2), ...X(k)}. If S’1 and S’2 are conditionally independent given S3’ but otherwise share the same pairwise marginals of (S1, S2, S3) we have the following probability of joint typicality.

Let A(n)ϵ denote the typical set for the probability mass function p(s1s2s3) and let

Then

P{S1’, S2’, S3’ ∈ A(n)ϵ}≐2 − n(I(S1;S2|S3)±6ϵ)

We use the

≐ notation form

21↑ to avoid calculating the upper and lower bounds separately. We have

P{(S1’, S2’, S3’) ∈ A(n)ϵ} = ⎲⎳(s1, s2, s3) ∈ A(n)ϵp(s3)p(s1|s3)p(s2|s3)≐|A(n)ϵ(S1S2S3)|2 − n(H(S3)±2ϵ)2 − n(H(S1|S3)±2ϵ)2 − n(H(S2|S3)±2ϵ)

≐2n(H(S1S2S3)±ϵ) − n(H(S3)±ϵ) − n(H(S1|S3)±2ϵ) − n(H(S2|S3)±2ϵ)≐2 − n(I(S1;S2|S3)±6ϵ)

Во книгата е 2 − n(I(S1;S2|S3)±6ϵ) но мене ми излегува со 2ϵ затоа што ги земам во предвид промените на знаците на ± . Ако не се земат во предвид тие промени тогаш се добива 6ϵ.

n(H(S1S2S3)±ϵ) − n(H(S3)±ϵ) − n(H(S1|S3)±2ϵ) − n(H(S2|S3)±2ϵ) =

n[H(S1S2S3)±ϵ − H(S3)∓ϵ − H(S1|S3)∓2ϵ − H(S2|S3)∓2ϵ]

n[H(S1S2S3) − H(S3) − H(S1|S3) − H(S2|S3)] = (*)

I(S1;S2|S3) = H(S1|S3) − H(S1|S2S3)

H(S1S2S3) = H(S3) + H(S2|S3) + H(S1|S2S3)

(*) = n[\cancelH(S3) + \cancelH(S2|S3) + H(S1|S2S3) − \cancelH(S3) − H(S1|S3) − \cancelH(S2|S3)] = − n⋅I(S1;S2|S3)

Ако се земат во предвид проментие на знаците во ± тогаш:

n[H(S1S2S3)±ϵ − H(S3)∓ϵ − H(S1|S3)∓2ϵ − H(S2|S3)∓2ϵ] = − n⋅(I(S1;S2|S3)±4ϵ)

Ако не се земат во предвид промените на знаците во ± тогаш:

n[H(S1S2S3)±ϵ − H(S3)±ϵ − H(S1|S3)±2ϵ − H(S2|S3)±2ϵ] = − n⋅(I(S1;S2|S3)±6ϵ)

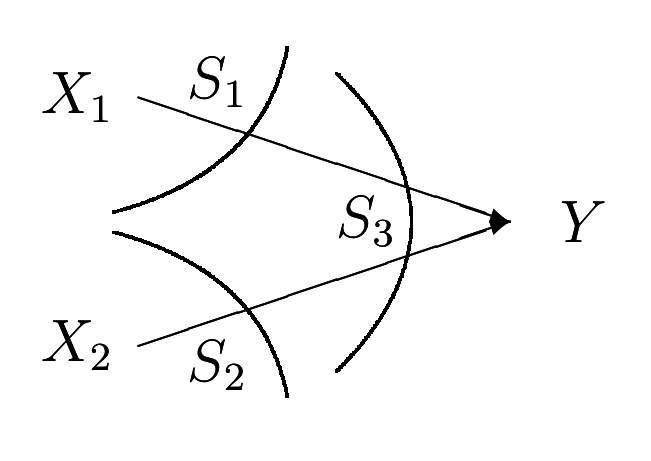

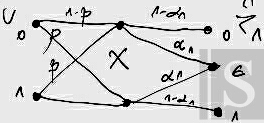

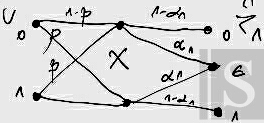

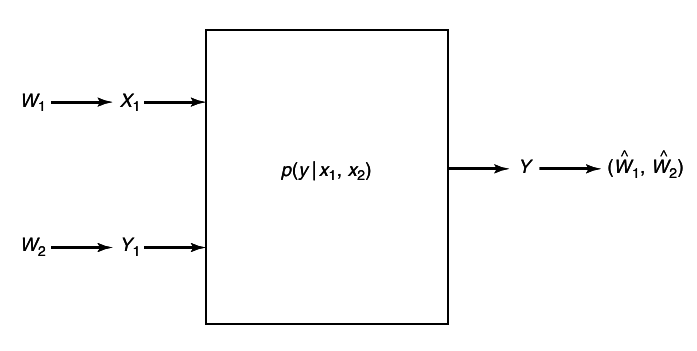

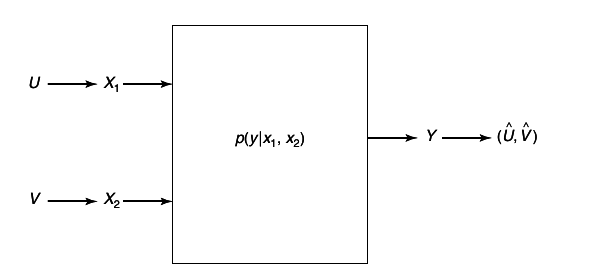

1.3 Multiple-access channel

The fist channel that we examine in detail is the multiple-access channel, in which two (or more) senders send information to a common receiver. The channel is illustrated in

10↓.\begin_inset Separator latexpar\end_inset

A common example of this channel is a satellite receiver with many independent ground stations, or a set of cell phones communicating with a base station. We see that the senders must contend not only with the receiver noise but with interference from each other as well.

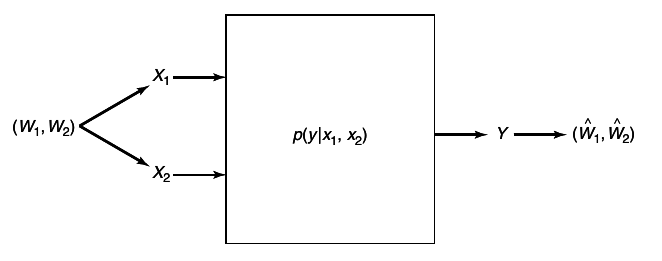

A discrete memory-less multiple-access channel consists of three alphabets , X1, X2 and Y, and a probability transition matrix p(y|x1, x2).

Definition (average probability of error)

A ((2nR12nR2), n) code for the multiple-access channel consists of two sets of integers W1 = {1, 2, ..., 2nR1} and W2 = {1, 2, ...2nR2} called message sets, two encoding functions,

and

and a decoding function,

There are two senders and one receiver for this channel. Sender 1 chooses an index W1 uniformly form the set {1, 2, ..., 2nR1} and sends the corresponding codeword over the channel. Sender 2 does likewise. Assuming that the distribution of messages over the product set W1 xW2 is uniform (i.e. the messages are independent and equally likely), we define the average probability of error for the ((2nR1, 2nR2), n) code as follows:

P(n)e = (1)/(2n(R1 + R2))⋅ ⎲⎳(w1, w2) ∈ W1 xW2Pr{g(Yn) ≠ (w1w2)|(w1w2) sent}

Definition (achievable rate pair)

A rate pair (R1, R2) is said to be achievable for the multiple access channel if there exists a sequence of ((2nR1, 2nR2), n) codes with P(n)ϵ → 0.

The capacity region of the multiple-access channel is the closure of the set of achievable (R1R2) rate pairs.

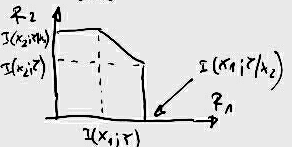

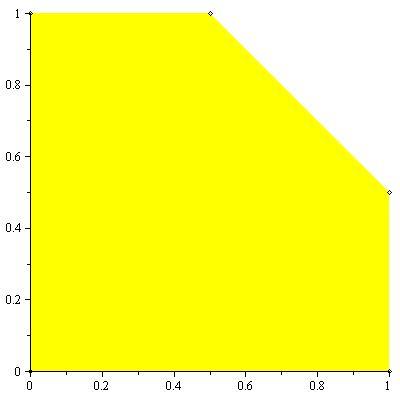

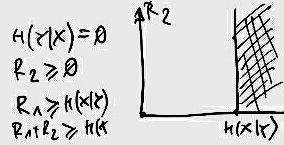

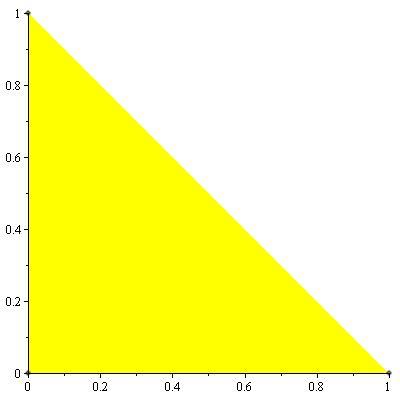

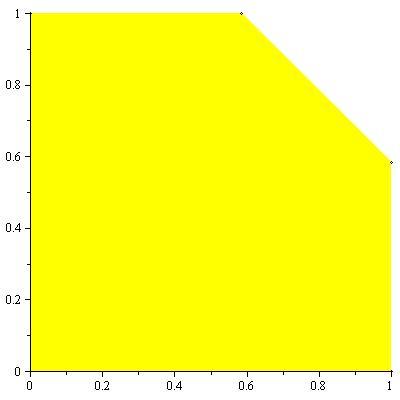

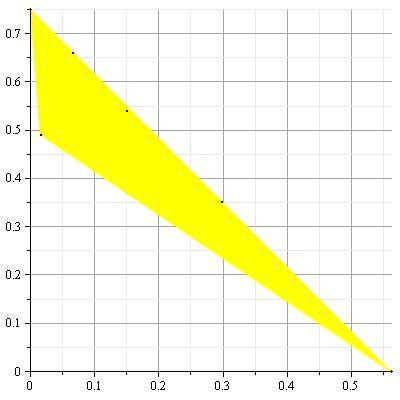

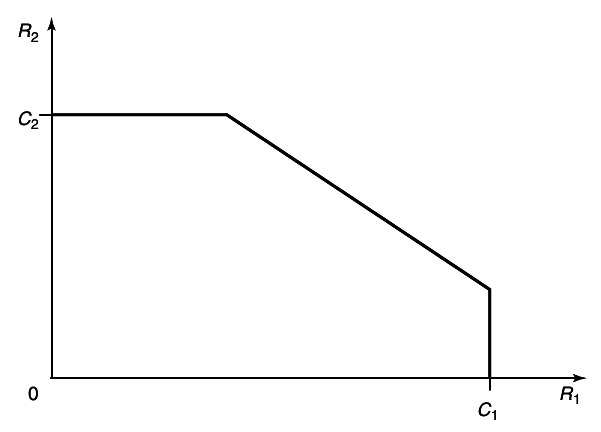

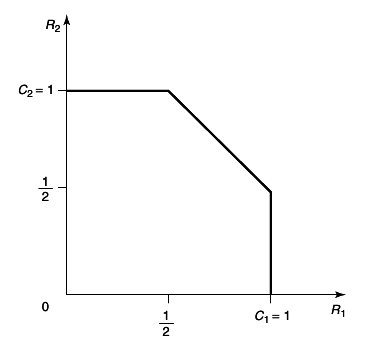

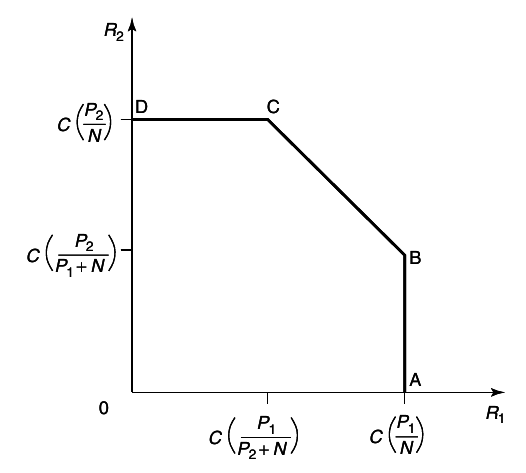

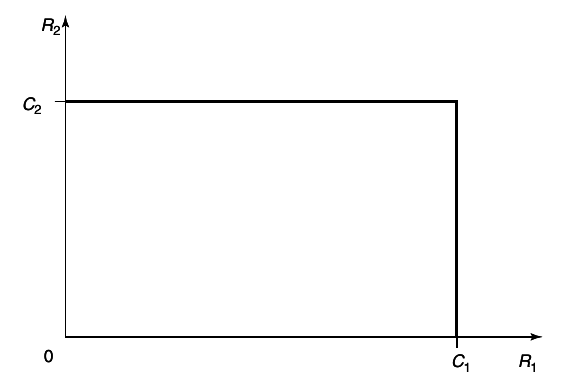

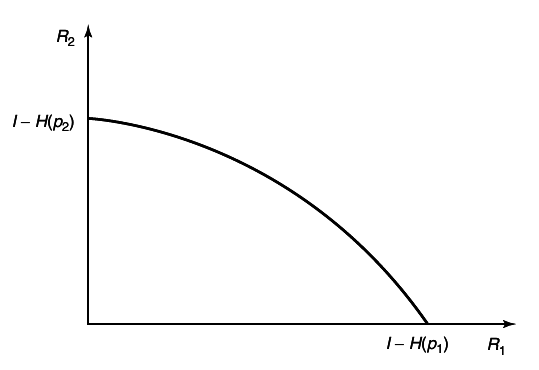

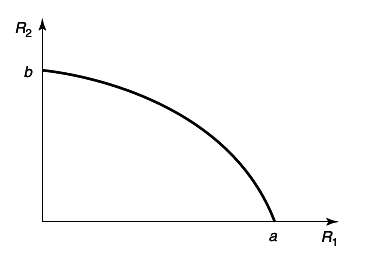

An example of the capacity region for a multiple-access channel is illustrated in figure 4

11↓. We first state the capacity region in the form of a theorem.

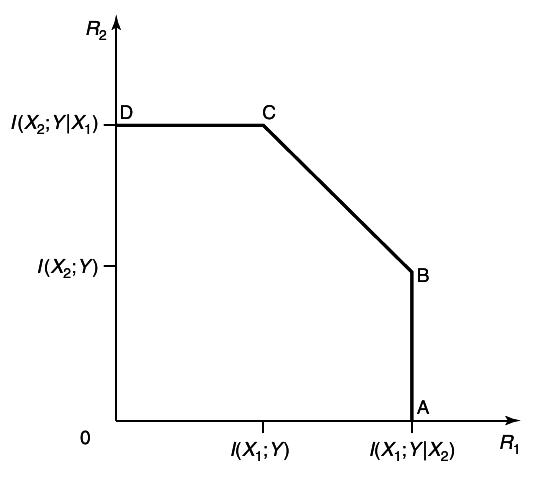

Theorem 15.3.1 (Multiple-access channel capacity)

The capacity of a multiple-access channel (X1 xX2, p(y|x1, x2), Y) is the closure of the convex hull of all (R1R2) satisfying

for some product distribution p2(x1)p2(x2) on X1 xX2.

Дефиниција од B. Grunbaum, Convex Polytopes за конвексна лушпа (convex hull) е: The convex hull conv(A) of subset A of Rd is the intersection of all the convex sets in Rd which contain A .

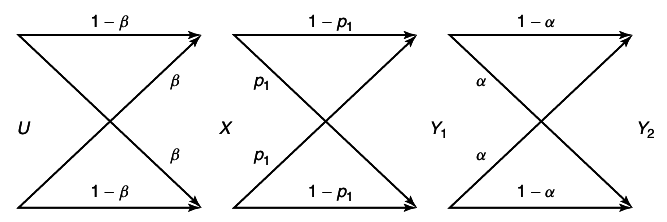

Before we prove that this is the capacity region of the multiple-access channel, let us consider a few examples of multiple-access channels:

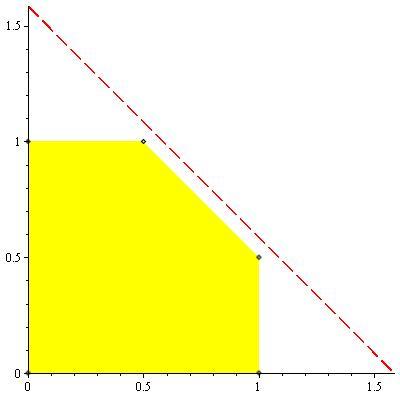

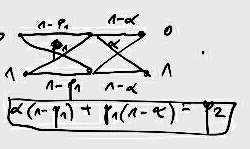

Example 15.3.1 (Independent binary symmetric channel)

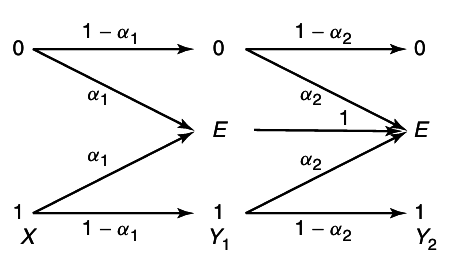

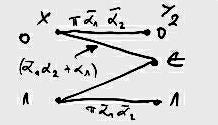

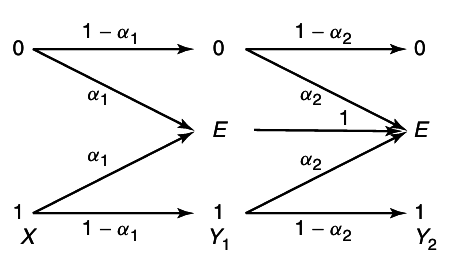

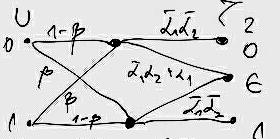

Assume that we have two independent binary symmetric channels one from sender 1 and other from sender 2 as shown in

12↓. In this case, it is obvious from the results of Chapter 7 that we can sent at rate

1 − H(p1) over the first channel and at rate

1 − H(p2) over the second channel. Since the channels are independent, there is no interference between the senders. The capacity region in this case is shown in

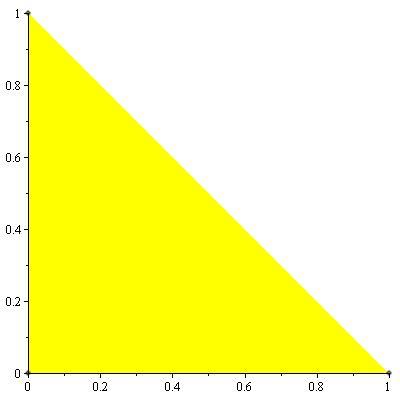

Example 15.3.2 (Binary multiplier channel)

Consider a multiple-access channel with binary inputs and outputs

Such channel is called

binary multiplier channel. It is easy to see that by setting

X2 = 1, we can send at a rate of

1 bit per transmission form sender

1 to the receiver. Similarly, setting

X1 = 1, we can achieve

R2 = 1. Clearly, since the output is binary, the combined rates

R1 + R2 cannot be more that 1 bit.

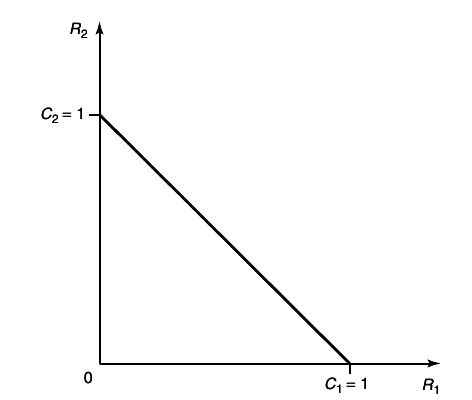

By time-sharing, we can achieve any combination of rates such that R1 + R2 = 1. Hence the capacity region is shown in

14↓

Example 15.3.3 (Binary erasure multiple-access channel)

This multiple-access channel has binary inputs, X1 = X2 = {0, 1} and ternary output Y = X1 + X2. There is no ambiguity in (X1X2) if Y = 0 or Y = 2 is received; but Y = 1 can result form either (0, 1) or (1, 0).

We now examine the achievable rates on the axes. Setting X2 = 0, we can send at rate of 1 bit per transmission from sender 1. Similarly, setting X1 = 0, we can send at a rate R2 = 1. This gives us two extreme point of the capacity region. Can we do better? Let us assume that R1 = 1, so that the codewords of X1 must include all possible binary sequences; X1 would look like a Bernoulli⎛⎝(1)/(2)⎞⎠ process. That acts as a noise for the transmission from X2.

Ова јас го замислувам како X1 да е поблиску до Y, a X2 подалеку. Со тоа во Y X1 без проблем се декодира, но воедно тој претставува шум за подалечниот X2.

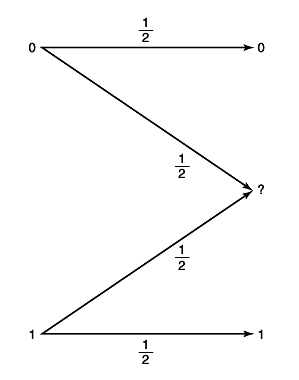

For

X2, the channel looks like the channel in

15↑. This is the binary erasure channel of Chapter 7. Recall in the results, the capacity of this channel is

(1)/(2) bits per transmission.

Hence when sending at the maximum rate 1 for sender 1, we can send an additional (1)/(2) bit form sender 2. Latter , after deriving the capacity region, we can verify that these rates are the best that can be achieved. The capacity region for a binary erasure channel is illustrated in

16↓.

1.3.1 Achievability of the Capacity Region for the Multiple-Access Channel

We now prove the achievability of he rate region in Theorem 15.3.1; the proof of the converse will be left until the next section. The proof of achievability is very similar to the proof for the single-user channel. We therefore only emphasize the points at which the proof differs form the single-user case. We begin by proving the achievability of rate pairs that satisfy

33↑ for some fixed product distribution

p(x1)p(x2). In Section 15.3.3 we extend this to prove that all points in the convex hull of

33↑ are achievable.

Proof: (Achievability in Theorem 15.3.1)

Fix \mathchoicep(x1, x2) = p1(x1)p2(x2)p(x1, x2) = p1(x1)p2(x2)p(x1, x2) = p1(x1)p2(x2)p(x1, x2) = p1(x1)p2(x2)

Generate 2nR1 independent codewords X1(i), i ∈ {1, 2, ..., 2nR1} , of length n, generating each element i.i.d. ~∏ni = 1p1(x1i). Similarly, generate 2nR2 independent codewords X2(j), j ∈ {1, 2, ..., 2nR2}, generating each element i.i.d ~ ∏ni = 1p2(x2i). These codewords form the code-book which is revealed to sender and the receiver.

To send index i, sender 1 sends the codeword X1(i). Similarly, to send j sender 2 sends X2(j).

Let A(n)ϵ denote the set of typical (x1 x2, y) sequences. The receiver Yn chooses the pair (i, j) such that

if such a pair (i, j) exists and is unique; otherwise, an error is declared.

Analysis of the probability of error:

By the symmetry of the random code construction, the conditional probability of error does not depend on which pair of indices is sent. Thus, the conditional probability of error is the same as the unconditional probability of error.

Потсети се како е дефинирана условната веројатност на грешка во Chapter 7 и како е дефинирана веројантоста на грешка. Ако сите условни веројатности се исти тогаш вкупнтата веројантост на грешка е еднакава на условната веројатност на грешка.

So without loss of generality, we can assume that (i, j) = (1, 1) was sent.

We have an error if either the correct codewords are not typical with the received sequence or there is a pair of incorrect codewords that are typical with the received sequence. Define the events

Then by the union of events bound,

where P is the conditional probability given that (1, 1) was sent. From the AEP, P(Ec11) → 0. By Theorems 15.2.1 and 15.2.3, for i ≠ 1 we have

\mathchoiceP(Ei1)P(Ei1)P(Ei1)P(Ei1) = Pr((X1(i), X2(1), Y) ∈ A(n)ϵ) = ⎲⎳(x1, x2, y) ∈ A(n)ϵp(x1)p(x2, y) ≤ |A(n)ϵ|2 − n(H(X1) − ϵ)2 − n(H(X2Y) − ϵ)

≤ 2n(H(X1X2Y) + 2ϵ)2 − n(H(X1) − ϵ)2 − n(H(X2Y) − ϵ) = 2 − n(H(X1) − ϵ + H(X2Y) − ϵ − H(X1X2Y) − 2ϵ) =

= 2 − n(H(X1) − ϵ + H(X2Y) − ϵ − H(X1X2Y) − 2ϵ) =

= 2 − n(H(X1) + H(X2Y) − H(X1X2Y) − 4ϵ) = |check box bellow| = 2 − n(I(X1;X2Y) − 4ϵ)\overset(a) = \mathchoice2 − n(I(X1;Y|X2 − 4ϵ)2 − n(I(X1;Y|X2 − 4ϵ)2 − n(I(X1;Y|X2 − 4ϵ)2 − n(I(X1;Y|X2 − 4ϵ)

Од Теорема 15.2.3 следи

P(S1’ = s1, S2’ = s2, S3’ = s3) = ∏ni = 1p(s1i|s3i)p(s2i|s3i)p(s3i)

∑(x1, x2, y) ∈ A(n)ϵp(x1|y)p(x2|y)p(y) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2, y)

∑(x1, x2, y) ∈ A(n)ϵp(x1, x2, y) = ∑(x1, x2, y) ∈ A(n)ϵp(x1, x2)p(y|x1x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2)p(y|x1x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2y|x1)

Всушност ако се има во предвид Theorem 15.2.3 и се замисли дека X2 = S3

\mathchoice∑(x1, x2, y) ∈ A(n)ϵp(x2)⋅p(y, x1| x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x2)⋅p(x1| x2)p(y|x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)⋅p(x2)p(y|x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2, y)∑(x1, x2, y) ∈ A(n)ϵp(x2)⋅p(y, x1| x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x2)⋅p(x1| x2)p(y|x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)⋅p(x2)p(y|x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2, y)∑(x1, x2, y) ∈ A(n)ϵp(x2)⋅p(y, x1| x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x2)⋅p(x1| x2)p(y|x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)⋅p(x2)p(y|x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2, y)∑(x1, x2, y) ∈ A(n)ϵp(x2)⋅p(y, x1| x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x2)⋅p(x1| x2)p(y|x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)⋅p(x2)p(y|x2) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2, y)

докажано!!!

Ова се докажува и без Теорема 15.2.3

p(y, x1, x2) = p(x2)⋅p(y, x1| x2) = p(x2)⋅p(y|x2)p(x1| x2y) = p(x2)⋅p(y|x2)p(x1)

ако се претпостави дека x1 не зависи од y (Тоа важи ако имаш марков ланец X1 → X2 → Y).

|A(n)ϵ(S)|≐2n(H(S)±2ϵ)

\mathchoiceI(X1;X2Y) = H(X2Y) − H(X2Y|X1) = H(X2Y) − H(X2Y|X1) − H(X1) + H(X1) = H(X1) + H(X2Y) − H(X1X2Y)I(X1;X2Y) = H(X2Y) − H(X2Y|X1) = H(X2Y) − H(X2Y|X1) − H(X1) + H(X1) = H(X1) + H(X2Y) − H(X1X2Y)I(X1;X2Y) = H(X2Y) − H(X2Y|X1) = H(X2Y) − H(X2Y|X1) − H(X1) + H(X1) = H(X1) + H(X2Y) − H(X1X2Y)I(X1;X2Y) = H(X2Y) − H(X2Y|X1) = H(X2Y) − H(X2Y|X1) − H(X1) + H(X1) = H(X1) + H(X2Y) − H(X1X2Y)

Where equivalence in (a) follows form the independence of X1 and X2, and consequently

I(X1;X2Y) = \cancelto0I(X1;X2) + I(X1;Y|X2) = I(X1;Y|X2)

Similarly, for j ≠ 1,

Во овој случај соласно теорема 15.2.3 ќе земеш X1 = S3

Од Теорема 15.2.3 следи

P(S1’ = s1, S2’ = s2, S3’ = s3) = ∏ni = 1p(s1i|s3i)p(s2i|s3i)p(s3i)

Всушност ако се има во предвид Theorem 15.2.3 и се замисли дека X2 = S3

\mathchoice∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2|x1)p(y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2)p(y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x2)p(x1, y)∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2|x1)p(y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2)p(y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x2)p(x1, y)∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2|x1)p(y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2)p(y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x2)p(x1, y)∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2|x1)p(y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x1)p(x2)p(y|x1) = ∑(x1, x2, y) ∈ A(n)ϵp(x2)p(x1, y)

|A(n)ϵ(S)|≐2n(H(S)±2ϵ)

(a) I(X2;X1Y) = H(X1Y) − H(X1Y|X2) = H(X1Y) − H(X1Y|X2) − H(X2) + H(X2) = H(X2) + H(X1Y) − H(X1X2Y)

\mathchoiceP(Ei1)P(Ei1)P(Ei1)P(Ei1) = P((X1(i), X2(1), Y) ∈ A(n)ϵ) = ∑(x1, x2, y) ∈ A(n)ϵp(x2)p(x1, y) ≤ |A(n)ϵ|2 − n(H(X2) − ϵ)2 − n(H(X1Y) − ϵ)

≤ 2n(H(X1X2Y) + 2ϵ)2 − n(H(X2) − ϵ)2 − n(H(X1Y) − ϵ) = 2 − n(H(X2) − ϵ + H(X1Y) − ϵ − H(X1X2Y) − 2ϵ)

= 2 − n(H(X2) + H(X1Y) − H(X1X2Y) − 4ϵ) = |(a)| = 2 − n(I(X2;X1Y) − 4ϵ)\overset(b) = \mathchoice2 − n(I(X2;Y|X1 − 4ϵ)2 − n(I(X2;Y|X1 − 4ϵ)2 − n(I(X2;Y|X1 − 4ϵ)2 − n(I(X2;Y|X1 − 4ϵ)

(b) I(X2;X1Y) = \cancelto0I(X1;X2) + I(X2;Y|X1) = I(X2;Y|X1)

and for i ≠ 1, j ≠ 1

P(Ei1) = P((X1(i), X2(1), Y) ∈ A(n)ϵ) = ∑(x1, x2, y) ∈ A(n)ϵp(x1 x2, y) ≤ |A(n)ϵ|2 − n(H(X1) − ϵ)2 − n(H(X2Y) − ϵ)

∑(x1, x2, y) ∈ A(n)ϵp(x1x2y) = ∑(x1, x2, y) ∈ A(n)ϵp(y)p(x1|y)p(x2|x1y) = ∑(x1, x2, y) ∈ A(n)ϵp(y)p(x1|y)p(x2|y) ≤

≤ 2n(H(X1X2Y) + 2ϵ)2 − n(H(Y) − ϵ)2 − n(H(X1|Y) − 2ϵ) − n(H(X2|Y) − 2ϵ) = 2 − n( − H(X1X2Y) − 7ϵ + H(Y) + H(X1|Y) + H(X2|Y))

I(X1X2;Y) = H(X1X2) − H(X1X2|Y) = H(X1X2) − H(X1|Y) − H(X2|Y) + H(Y|X1X2) − H(Y|X1X2) = H(X1X2Y) − H(X1|Y) − H(X2|Y) − H(Y|X1X2)

I(X1X2;Y) = H(X1X2) − H(X1X2|Y) = H(X1X2) − H(X1X2|Y) + H(Y) − H(Y) = H(X1X2) − H(Y) − H(X1X2|Y) + H(Y) = H(X1X2) − H(Y, X1, X2) + H(Y)

__________________________________________________________________________________

H(X1|Y) + H(X2|Y) = H(X1X2|Y) = H(X1X2Y) − H(Y)

I(X1X2;Y) = H(X1X2) − H(X1X2|Y) = H(X1X2) − H(X1X2|Y) + H(Y|X1X2) − H(Y|X1X2) = H(X1X2Y) − H(X1X2|Y) − H(Y|X1X2)

——————————————————————————–—————————————————–

∑(x1, x2, y) ∈ A(n)ϵp(x1x2y) = ∑(x1, x2, y) ∈ A(n)ϵp(y)⋅p(x1x2|y) = ≤ 2n(H(X1X2, Y) − 2ϵ)2 − n(H(Y) − ϵ)2 − n(H(X1X2|Y) − ϵ)

2n(H(X1X2, Y) − 2ϵ)2 − n(H(Y) − ϵ)2 − n(H(X1|Y) + H(X2|Y) − ϵ)

I(X1X2;Y) = H(Y) − H(Y|X1X2) = H(X1X2Y) − H(Y|X1X2) − H(X1|Y) − H(X2|Y) = H(X1X2Y) − H(Y|X1X2) − H(X1X2|Y)

——————————————————————————–——————————————————

∑(x1, x2, y) ∈ A(n)ϵp(x1x2y) = ∑(x1, x2, y) ∈ A(n)ϵp(x1x2)⋅p(y|x1x2) ≤ 2n(H(X1X2, Y) − ϵ)2 − n(H(X1X2) − ϵ)2 − n(H(Y|X1X2) − ϵ)

I(X1X2;Y) = H(Y) − H(Y|X1X2) = H(X1X2Y) − H(Y|X1X2) − H(X1|Y) − H(X2|Y) = H(X1X2Y) − H(Y|X1X2) − H(X1X2|Y)

H(X1X2Y) = H(X1) + H(X2|X1) + H(Y|X1X2) = H(Y) + H(X1|Y) + H(X2|YX1) = H(Y) + H(X1|Y) + H(X2|Y)

It follows that

I(X1;Y|X2) − 3ϵ − R1 ≥ 0 → R1 ≤ I(X1;Y|X2) − 3ϵ → R1 < I(X1;Y|X2)

Since ϵ ≥ 0 is arbitrary, the conditions of the theorem imply that each term tends to 0 as n → ∞. Thus the probability of error, conditioned on a particular codeword being sent, goes to zero if the conditions of the theorem are met. The above bound shows that the average probability of error, which by symmetry is equal to the probability for an individual codeword, averaged over all choices of codebooks in the random code construction, is arbitrarily small. Hence there exists at least one code C* with arbitrary small probability of error.

This completes the proof of achievability of region in

33↑ for a fixed input distribution. Later, in Section 15.3.3 we show that time-sharing allows any

(R1R2) in the convex hull to be achieved, completing the proof of the forward part of the theorem.

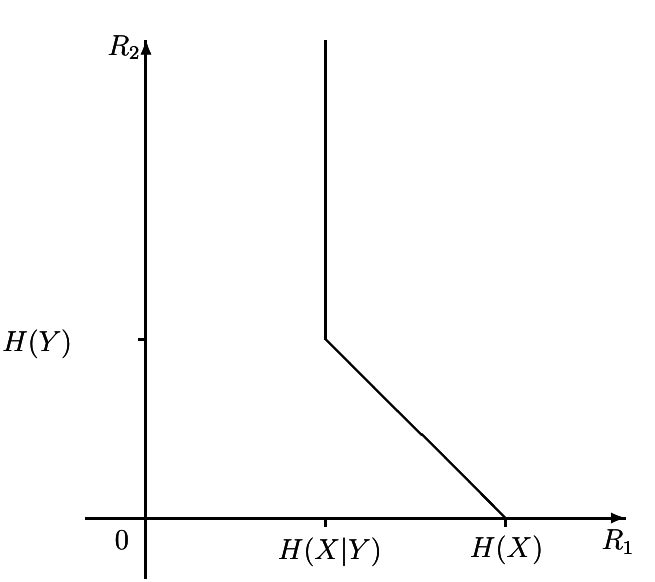

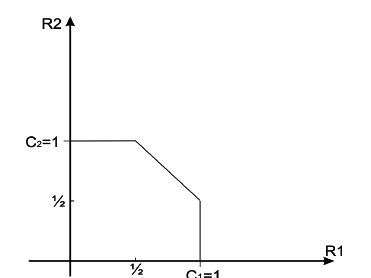

1.3.2 Comments on the Capacity Region for the Multiple-Access Channel

We have now proved the achievability of the capacity region of the multiple-access channel, which is the closure of the convex hull of the set of points (R1, R2) satisfying

for some distribution

p(x1)p(x2) on

X1 xX2. For a particular

p(x1)p(x2) the region is illustrated in

17↓\begin_inset Separator latexpar\end_inset

I(X2;Y|X1) = H(X2|X1) − H(X2|X1Y) = H(X2) − H(X2|X1Y) ≥ H(X2) − H(X2|Y) = I(X2;Y);

\mathchoiceI(X2;Y|X1) ≥ I(X2;Y)I(X2;Y|X1) ≥ I(X2;Y)I(X2;Y|X1) ≥ I(X2;Y)I(X2;Y|X1) ≥ I(X2;Y)

\mathchoice\overset(a)I(X2;Y|X1) + \overset(b)I(X1;Y) = I(X1X2;Y)\overset(a)I(X2;Y|X1) + \overset(b)I(X1;Y) = I(X1X2;Y)\overset(a)I(X2;Y|X1) + \overset(b)I(X1;Y) = I(X1X2;Y)\overset(a)I(X2;Y|X1) + \overset(b)I(X1;Y) = I(X1X2;Y)

(a) - максимална брзина што може да ја постигне предавателот 2

(b)- максимална брзина што може да ја постигне предавателот 1, а притоа предавателот 2 пренесува со максиламанта брзина

Оттука произлегува дека вкупната брзина е R = R1 + R2 < I(X1X2;Y).

Let us now interpret the corner points in the region.

Point A corresponds to the maximum rate achievable form sender 1 to receiver when sender 2 is not sending any information. This is

Now for any distribution p1(x1)p2(x2),

Логично е неравенството зошто сумата претставува средна вредност, а средната вредност е секогаш помала од максималната вредност.

since the average is less than the maximum.

Therefore, the maximum in 44↑ is attained when we set X2 = x2, where x2 is the value that maximizes conditional mutual information between X1 and Y. The distribution of

X1 is chosen to maximize this mutual information. Thus,

X2 must facilitate the transmission of

X1 by setting

X2 = x2.

The point B corresponds to the maximum rate at which sender 2 can send as long as sender 1 sends at his maximum rate. This is the rate that is obtained if X1 is considered as noise for the channel from X2 to Y

Види го примерот со 15.3.3 многу јасно е објаснато ова!!!

. In this case using the results form single-user channels,

X2 can send at a rate

I(X2;Y). The receiver now knows which

X2 code-word was used and can „subtract” its effect form the channel. We can consider the channel now to be an indexed set of single-user channels, where the index is the

X2 symbol used. The

X1 rate achieved in this case is the average mutual information where the average is over these channels, and each channel occurs as many times as the corresponding

X2 symbol appears in the codewords

Многу ефективно објаснувње!!!

. Hence, the rate achieved is

Points C and D correspond to B and A respectively, with the role of the senders reversed. The non-corner points can be achieved by time-sharing. Thus, we have given a single-user interpretation and justification for the capacity region of a multiple-access channel.

The idea of considering other signals as part of the noise, decoding one signal, and then „subtracting” it form the received signal is a very useful one. We will come across the same concept again in the capacity calculations for the degraded broadcast channel.

1.3.3 Convexity of the Capacity Region of the Multiple - Access Channel

We now recast the capacity region of the multiple-access channel in order to take into account the operation of taking the convex hull by introducing a new random variable. We begin by proving that the capacity region is convex.

The capacity region C of a multiple-access channel is convex [i.e., if (R1, R2) ∈ C and (R1’, R2’) ∈ C ,then (λR1 + + (1 − λ)R1’, λR2 + (1 − λ)R2’) ∈ C for 0 ≤ λ ≤ 1].

Откако го поминав доказот на Theorem 15.3.4 ова би го парафразирал вака: Convex combination of achievable rates is achievable!

The idea is time-sharing. Given two sequences for codes at different rates R = (R1R2) and R’ = (R1’, R2’), we can construct a third codebook at rate λR + (1 − λ)R’ by using the first codebook for the first λn symbols and using the second codebook for the last (1 − λ)n symbols. The number of X1 codewords in the new code is

and hence the rate of the new code is λR + (1 − λ)R’ . Since the overall probability of error is less than the sum of the probabilities of error for each of the segments, the probability of error of the new code goes to 0 and the rate is achievable.

We can now recast the statement of the capacity region for the multiple access channel using a time-sharing random variable Q. Before we prove this result, we need to prove a property of convex sets defined by linear inequalities like those of the capacity region of the multiple-access channel.

In particular, we would like to show that the convex hull of two such regions defined by linear constraints is the region defined by the convex combination of the constraints. Initially, the equality of these two sets seems obvious, but on closer examination, there is a subtle difficulty due to the fact that some of the constraints might not be acti+ve. This is best illustrated by an example. Consider the following two sets defined by linear inequalities:

In this case, the ⎛⎝(1)/(2), (1)/(2)⎞⎠ convex combination of the constraints defines the region

It is not difficult to see that any point in C1 or C2 has x + y < 20, so any point in the convex hull of the union of C1 or C2 has x + y < 20, so any point in the convex hull of the union of C1 and C2 satisfies this property. Thus, the point (15, 15), which is in C, is not in the convex hull of (C1∪C2). This example also hints at the cause of the problem - in the definition for C1, the constraint x + y ≤ 100 is not active. If this constraint were replaced by constraint x + y ≤ a, where a ≤ 20, the above result of the equality of the two regions would be true, as we now prove.

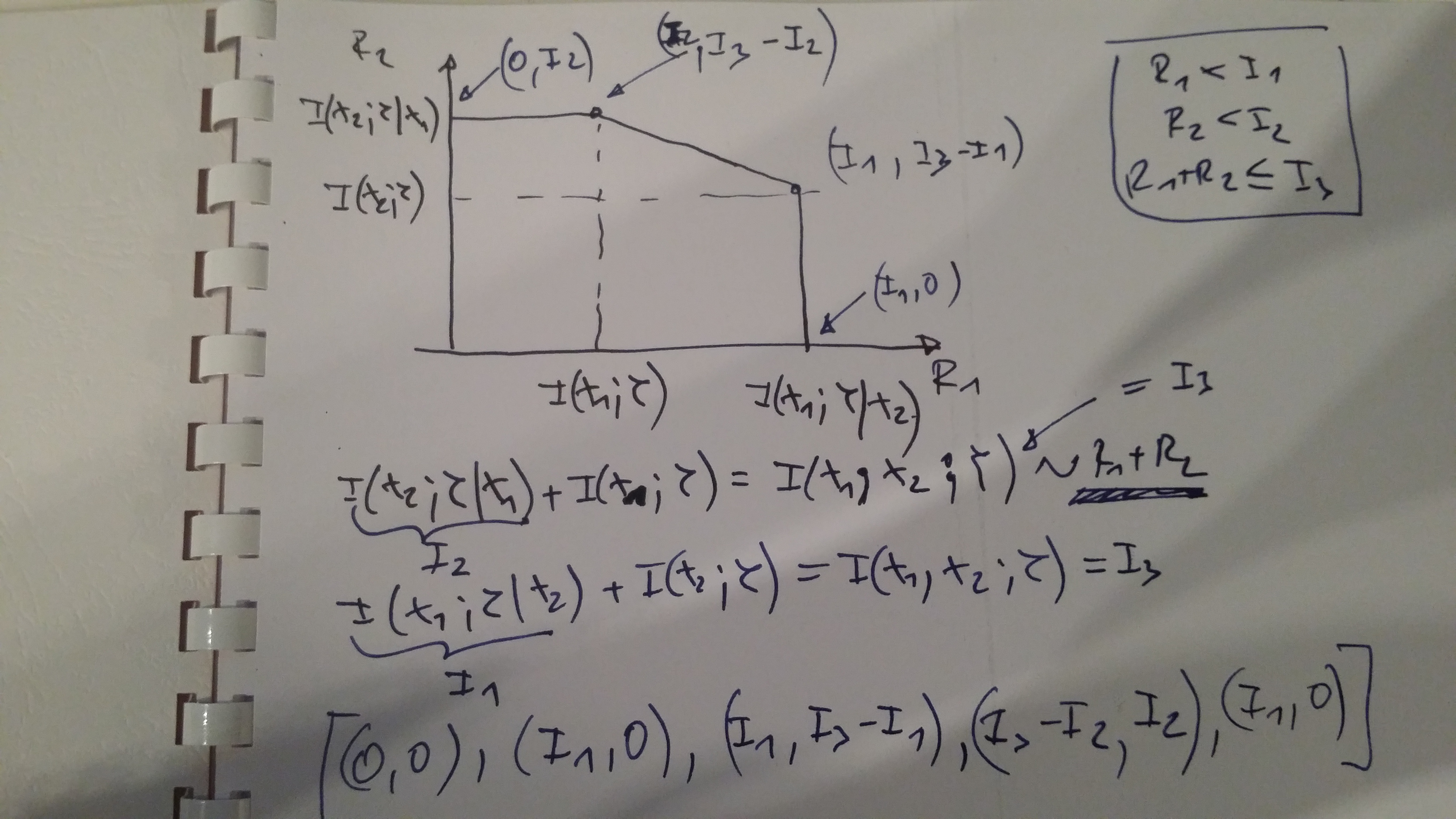

We restrict ourselves to the pentagonal regions that occur as components of the capacity region of two-user multiple-access channel. In this case, the capacity region for a fixed p(x1)p(x2) is defined by three mutual informations, I(X1;Y|X2), I(X2;Y|X1) and I(X1, X2;Y), which we shall call I1, I2 and I3, respectively. For each p(x1)p(x2) , there is a corresponding vector, I = (I1, I2, I3), and a rate region defined by

Also, since for any distribution p(x1)p(x2), we have

\mathchoiceI(X2;Y|X1)I(X2;Y|X1)I(X2;Y|X1)I(X2;Y|X1) = H(X2|X1) − H(X2|Y, X1) = H(X2) − H(X2|Y, X1) = I(X2;Y, X1) = I(X2;Y) + I(X2;X1|Y)\mathchoice ≥ I(X2;Y) ≥ I(X2;Y) ≥ I(X2;Y) ≥ I(X2;Y)

Ова истово го докажав на сличен начин во 1.3.2↑. Суштински е да имаш во предвид дека X1 и X2 се независни.

and therefore

I(X1;Y|X2) + I(X2;Y|X1) ≥ I(X1;Y|X2) + I(X2;Y) = I(X1X2;Y) → \mathchoiceI(X1;Y|X1) + I(X2;Y|X1) ≥ I(X1, X2;Y)I(X1;Y|X1) + I(X2;Y|X1) ≥ I(X1, X2;Y)I(X1;Y|X1) + I(X2;Y|X1) ≥ I(X1, X2;Y)I(X1;Y|X1) + I(X2;Y|X1) ≥ I(X1, X2;Y)

we have for all vectors I that \mathchoiceI1 + I2 ≥ I3I1 + I2 ≥ I3I1 + I2 ≥ I3I1 + I2 ≥ I3 . This property will turn out to be critical for the theorem.

Let

I1, I2 ∈ R3 be two vectors of mutual informations that define rate regions

CI1 and

CI2, respectively, as given in

51↑. For

0 ≤ λ ≤ 1 define

Iλ = λI1 + (1 − λ)I2 and let

CIλ be the rate region defined by

Iλ. Then

We shall prove this theorem in two parts. We first show that any point in the (λ, 1 − λ) mix of the sets CI1 and CI2 satisfies the inequalities for I1 and point in CI2 satisfies the inequalities for I2, so the (λ, 1 − λ) mix of these points will satisfy the (λ, 1 − λ) mix of the constraints. Thus, it follows that

To prove the reverse inclusion, we consider the extreme points of the pentagonal regions.

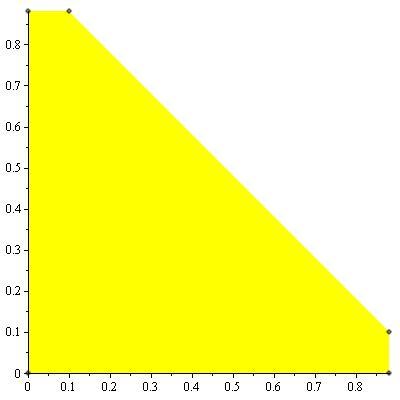

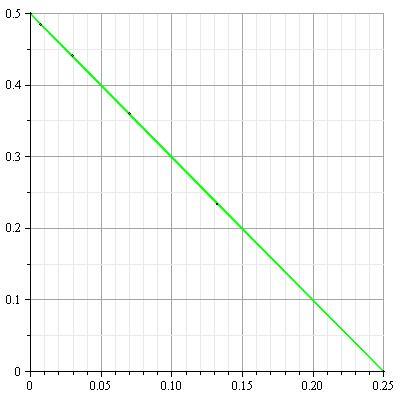

It is not difficult to see that the rate regions defined in 51↑ are always in the form of a pentagon, or in the extreme case when I3 = I1 + I2 in the form of a rectangle

Ова е добро илустрирано во Maple worksheet-от

. Thus, the capacity region

CI can be also defined as a convex hull of five points:

Ова многу добро го илустрирав во соодветниот Maple worksheet.

Consider the region defined by Iλ ; it, too, is defined by five points. Take any one of the points, say (I(λ)3 − I(λ)2, I(λ)2). This point can be written as the (λ, 1 − λ) mix of the points (I(1)3 − I(1)2, I(1)2) and (I(2)3 − I(2)2, I(2)2), and therefore lies in the convex mixture of CI1 and CI2. Thus, all extreme points of the pentagon CIλ lie in the convex hull of CI1 and CI2, or

Combining the two parts we have the theorem.

In the proof of the theorem, we have implicitly used the fact that all the rate regions are defined by five extreme points (at worst, some of the points are equal). all five points defined by the

I vector were within the rate region. If the condition

I3 ≤ I1 + I2 is not satisfied, some of the points in

54↑ may be outside the rate region and the proof collapses.

As an immediate consequence of the above lemma, we have the following theorem:

The convex hull of the union of the rate regions defined by individual I vectors is equal to the rate region defined by the convex hull of the I vectors.

These arguments on the equivalence of the convex hull operation on the rate regions with the convex combinations of the mutual informations can be extended to the general

m-user multiple-access channel. A proof along these lines using the theory of polymatroids is developed in

[4].

The set of achievable rates of a discrete memory-less multiple-access channel is given by the closure of the set of all (R1R2) pairs satisfying

Во ваква форма ги сведуваат во Capacity Theorem.

R1 < I(X1;Y|X2, Q) ≤ I(X1;Y|X2)

R2 < I(X2;Y|X1, Q) ≤ I(X2;Y|X1)

R1 + R2 < I(X1, X2;Y|Q) ≤ I(X1X2;Y)

Интерпретацијата е едноставна. Q e time-sharing променлива. Во генерален случај на тој начин било која условена неизвесност или заедничка информација можеш да ја претставиш како сума од поединечните условени ентропии или заеднички ентропии. Преку веројатноста p(Q) = (1)/(n) се дефинира веројатноста за пренос во „еден тајмслот” од вкупноте n. Ова некако ми оди на статистичко мултиплексирање наместо на статичко. Не ги вртиш сите трансмисии по реден број туку оставаш веројатноста тоа да го одлучи. Може во екстремен случај сите n тајмслоти да ги зафати еден предевател, зошто случајноста така одлучила.

for some choice of the joint distribution \mathchoicep(q)p(x1|q)p(x2|q)p(y|x1x2)p(q)p(x1|q)p(x2|q)p(y|x1x2)p(q)p(x1|q)p(x2|q)p(y|x1x2)p(q)p(x1|q)p(x2|q)p(y|x1x2) with |Q| ≤ 4 .

We will show that every rate pair lying in the region defined in

56↑ is achievable (i.e., it lies in the convex closure of the rate pairs satisfying Theorem 15.3.1). We also show that every point in the convex closure of the region in Theorem 15.3.1 is also in the region defined in

56↑.

Consider a rate point

R satisfying the inequalities

56↑ of the theorem. We can rewrite the right-hand side of the first inequality as

where m is the cardinality of the support set of Q. We can expand the other mutual informations similarly.

For simplicity in notation we consider a rate pair as a vector and denote a pair satisfying the inequalities in

56↑ for a specific input product distribution

p1q(x1)p2q(x2) as

Rp1p2 as

Rq. Specifically, let

Rq = (R1q, R2q) be a rate pair satisfying

Then by Theorem 15.3.1.,

Rq = (R1q, R2q) is achievable. Then since

R satisfies

56↑ and we can expand the right-hand sides as in

58↑, there exists a

set of pairs Rq satisfying

61↑ such that

Ова го разбирам како проширување на 52↑ CIλ = λCI1 + (1 − λ)CI2

Since a convex combination of achievable rates is achievable, so is R. Hence, we have proven the achievability of the region in the theorem.

The same argument can be used to show that every point in the convex closure of the region in

33↑ can be written as the mixture of points satisfying

61↑ and hence can be written in the form

56↑.

The converse is proved in the next section.

The converse shows that all achievable rate pairs are of the form 56↑ and hence establishes that this is the capacity region of the multiple-access channel. The cardinality bound on the

time-sharing random variable Q is a consequence of Caratheodory’s theorem on convex sets. See discussion below.

The proof of the convexity of the capacity region shows that any convex combination of achievable rate pairs is also achievable.

We can continue this process, taking convex combinations of more points.

Мене одма ми заличи на ова изразот 62↑

Do we need to use an arbitrary number of points? Will the capacity region be increased? The following theorems says no.

Theorem 15.3.5 (Caratheodory) (MMV)

Any point in the convex closure of a compact set A in a d-dimensional euclidean space can be represented as a convex combination of d + 1 or fewer points in the original set A.

Формулатцијата на оваа теорема во книгата Convex Polytopes е:

If A is subset of Rd then every x ∈ conv(A)

conv(A) значи дека x e точка од convex hull од A

is expressible in the form:

x = ∑di = 0αixi where xi ∈ A, ai ≥ 0 and ∑di = 0αi = 1

The proof may be found in Eggleston

[5] and Grunbaum

[6].

This theorem allows us to restrict attention to a certain finite convex combination when calculating the capacity region. This is an important property because without it, we would not be able to compute the capacity region in

56↑, since we would never know whether using a larger alphabet

Q would increase the region.

In the multiple-access channel, the bounds define a connected compact set in three dimensions. Therefore, all points in its closure can be defined as the convex combination of at most four points. Hence, we can restrict the cardinality of Q to at most 4 in the above definition of the capacity region.

Many of the cardinality bounds may be slightly improved by introducing other considerations. For example, if we are only interested in the boundary of the convex hull of A as we are in capacity theorems, a point on the boundary can be expressed as a mixture of d points of A, since a point on the boundary lies in the intersection of A with a (d − 1) - dimensional support hyperplane.

1.3.4 Converse for the Multiple-Access Channel

We have so far proved the achievability of the capacity region. In this section we prove the converse.

Proof: (Converse to Theorems 15.3.1 and 15.3.4)

We must show that given any sequence of \mathchoice((2nR1, 2nR2), n)((2nR1, 2nR2), n)((2nR1, 2nR2), n)((2nR1, 2nR2), n) codes with P(n)e → 0 the rates must satisfy

for some choice of random variable Q defined on {1, 2, 3, 4} and joint distribution p(q)p(x1|q)p(x2|q)p(y|x1x2). Fix n. Consider the given code of block length n. The joint distribution on W1 xW2 xXn1 xXn2 xYn is well defined. The only randomness is due to the random uniform choice of indices W1 and W2 and the randomness induced by the channel. The joint distribution is

where p(xn1|w1) is either 1 or 0, depending on whether xn1 = x1(w1), the codeword corresponding to w1, or not, and similarly, p(xn2|w2) = 1 or 0, according to whether xn2 = x2(w2) or not. The mutual informations that follow are calculated with respect to this distribution.

By the code construction, it is possible to estimate (W1, W2) from the received sequence Yn with low probability of error. Hence, the conditional entropy of (W1W2) given Yn must be small. By Fano’s inequality,

H(W1, W2|Yn) ≤ n(R1 + R2)P(n)e + H(P(n)e)≜nϵn

It is clear that ϵn → 0 as P(n)e. Then we have

We can now bound the rate R1 as

= H(Xn1(W1)) − H(X(n)1(W1)|Yn)\overset(c) ≤ \mathchoiceH(X(n)1(W1)|X(n)2(W2)) − H(X(n)1(W1)|Yn, X(n)2(W2)) + nϵnH(X(n)1(W1)|X(n)2(W2)) − H(X(n)1(W1)|Yn, X(n)2(W2)) + nϵnH(X(n)1(W1)|X(n)2(W2)) − H(X(n)1(W1)|Yn, X(n)2(W2)) + nϵnH(X(n)1(W1)|X(n)2(W2)) − H(X(n)1(W1)|Yn, X(n)2(W2)) + nϵn =

= I(X(n)1(W1);Yn|X2(W2)) + nϵn = H(Y(n)|Xn2(W2)) − H(Yn|X(n)2(W2), X(n)1(W1)) + nϵn =

\overset(d) = H(Yn|Xn2(W2)) − n⎲⎳i = 1H(Yi|Yi − 1Xn2(W2), X(n)1(W1)) + nϵn =

\overset(e) = H(Yn|X(n)2(W2)) − n⎲⎳i = 1H(Yi|X1i, X2i) + nϵn\overset(f) ≤ n⎲⎳i = 1H(Yi|Xn2(W2)) − n⎲⎳i = 1H(Yi|X1i, X2i) + nϵn ≤

where

(a) follows from Fanno inequality

Веројатно на аспектот од фано дека H(W1|Yn) е многу мало.

(b) follows data-processing inequality

(c) follows from the fact that since W1 and W2 are independent, so are X(n)1(W1) and X(n)2(W2), and hence H(Xn1(W1)) = H(X(n)1(W1)|X(n)2(W2)) and H(Yn|X(n)2(W2), X(n)1(W1)) ≤ H(Yn|X(n)2(W2)) by conditioning.

(d) follows from the chain rule

(e) follows form the fact that Yi depends only on X1i and X2i by the memoryless property of the channel

(f) follows from the chain rule and removing conditioning

(g) follows from removing conditioning

Hence, we have

Similarly, we have

To bound the sum of the rates, we have

where

(a) follows from Fano’s inequality

(b) follows form the data-processing inequality

(c) follows form the chain rule

(d) follows form the fact that Yi depends only on X1i and X2i and is conditionally independent of everything else

(e) follows form the chain rule and removing conditioning

Hence we have

Овој доказ секогаш најмногу ми кажува и ме учи. Логично е се ова ако се има во предвид дека бројот на елементи во (W1W2) e 2nR1⋅2nR2. Максималната ентропија е

log(2nR1⋅2nR2) = n(R1 + R2). Од овој иницијален резултат со помош на изведувањата од

71↑ до

74↑ одма доаѓаш до резултатот во

75↑. Оригинален е начинот на добивање на изразот

69↑ и тоа делот означен во зелено т.е.

(c).

The expressions in

69↑,

70↑ and

75↑ are the averages of the mutual informaitons calculated at the empirical distributions in column

i of the codebook. We can rewrite these equations with the new variable

Q, where

Q = {1, 2, ..., n} with probability

(1)/(n). The equations become

R1 ≤ (1)/(n)n⎲⎳i = 1I(X1i;Yi|X2i) + ϵn

= (1)/(n)n⎲⎳i = 1I(X1q;Yq|X2q, Q = i) + ϵn

(1)/(n)∑ni = 1I(X1q;Yq|Q = i)

I(X1Q;YQ|Q) = H(X1Q|Q) − H(X1Q|Q, YQ)

H(X1Q|Q) = (1)/(n)∑nq = 1H(X1q|Xq − 11q) = |independence of X1qfrom Xq − 11| = (1)/(n)⋅∑nq = 1H(X1q|q)

Всушност мислам дека генијално се ослободува од сумата со користење на помошната променлива. Ама секако и без неа може да се ослободи од сумата заради независнота на X1 и X2.

24.06.2013

Во чланакот Capacity Theorem одат ушт еден чекор понатаму

R1 ≤ I(X1;Y|X2, Q) + ϵn ≤ I(X1;Y|X2) + ϵn

where X1≜X1Q, X2≜X2Q and Y≜YQ are new random variables whose distributions depend on Q in the same way as the distributions of X1i, X2i and Yi depend on i. Since W1 and W2 are independent, so are X1i(W1) and X2i(W2), and hence

Hence, taking the limit as n → ∞, P(n)e → 0, we have the following converse:

for some choice of joint distribution p(q)p(x1|q)p(x2|q)p(y|x1x2). As in Section 15.3.3, the region is unchanged if we limit the cardinality of Q to 4.

This completes the proof of the converse.

Thus, the achievability of the region of Theorem 15.3.1 was proved in Section 15.3.1. In Section 15.3.3 we showed that every point in region defined by

63↑ was also achievable.

In the converse, we showed that the region in 63↑ was best we can do, establishing that this is indeed the capacity region of the channel. Thus, the region in

33↑ cannot be any larger than the region in

63↑, and this is the capacity region of the multiple-access channel.

Во Capacity Theorem чланакот продолжуваат понатаму за релеен канал и го сведуваат на следнава форма

R1 < I(X1;Y|X2, Q) ≤ I(X1;Y|X2)

R2 < I(X2;Y|X1, Q) ≤ I(X2;Y|X1)

R1 + R2 < I(X1, X2;Y|Q) ≤ I(X1X2;Y)

Ова дефинитивно фали да се додаде и во книгата.

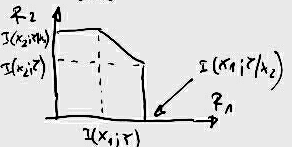

1.3.5 m-User Multiple-Access Channels

We will now generalize the result derived for two senders to

m senders,

m ≥ 2. The multiple-access channel in this case is shown in

19↓.

We send independent indices w1, w2, ..., wm over the channel form the senders 1, 2, ..., m respectively. The codes, rates, and achievability are all defined in exactly the same way as in the tow-sender case.

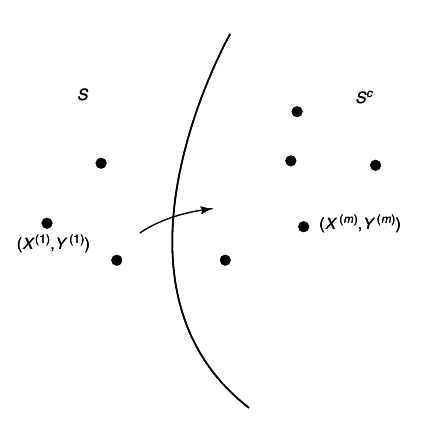

Let S ⊂ {1, 2, ..., m} , respectively. Let Sc denote the complement of S. Let R(S) = ∑i ∈ SRi and let X(S) = {Xi:i ∈ S}. Then we have the following theorem.

The capacity region of the m-user multiple-access channel is the closure of the convex hull of the rate vectors satisfying

for some product distribution p1(x1)p2(x2)...pm(xm) .

The proof contains no new ideas. There arе now 2m − 1 terms in the probability of error in the achievability proof and equal number of inequalities in the proof of the converse.

In general, the region in

78↑ is a beveled box.

1.3.6 Gaussian Multiple-Access Channels (MMV)

We now discuss the Gaussian multiple-access channel of Section 15.1.2. in somewhat more detail.

Two senders, X1 and X2, communicate to the single receiver, Y. The received signal at time i is:

where

{Zi} is sequence of independent, identically distributed, zero-mean Gaussian random variables with variance

N 20↓

We assume that there is a power constraint Pj on sender j; that is, for each sender for all messages we must have

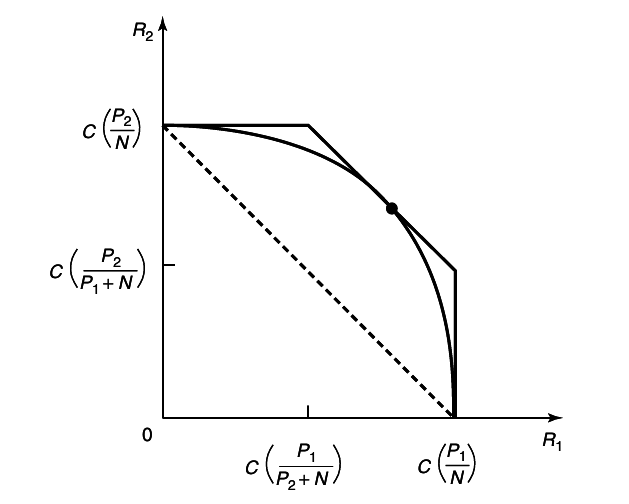

Just as the proof of achievability of channel capacity for the discrete case (Chapter 7) was extended to the Gaussian channel (Chapter 9) we can extend the proof for the discrete multiple-access channel to the Gaussian multiple-access channel. The converse can also be extended similarly, so we expect the capacity region to be the convex hull of the set of rate pairs satisfying

for some input distribution f1(x1)f2(x2) satisfying EX21 ≤ P1 and EX22 ≤ P2.

Now we can expand the mutual information in terms of relative entropy, and thus

\mathchoiceI(X1;Y|X2) = h(Y|X2) − h(Y|X1X2) = h(X1 + X2 + Z|X2) − h(X1 + X2 + Z|X1X2) = I(X1;Y|X2) = h(Y|X2) − h(Y|X1X2) = h(X1 + X2 + Z|X2) − h(X1 + X2 + Z|X1X2) = I(X1;Y|X2) = h(Y|X2) − h(Y|X1X2) = h(X1 + X2 + Z|X2) − h(X1 + X2 + Z|X1X2) = I(X1;Y|X2) = h(Y|X2) − h(Y|X1X2) = h(X1 + X2 + Z|X2) − h(X1 + X2 + Z|X1X2) =

(a) - follows from the fact that

Z is independent of

X1 an

X2, and (b) from the fact that normal maximizes entropy for a given second moment. Thus, the maximizing distribution is

X1 ~ N(0, P1) and

X2 ~ N(0, P2) with

X1 and

X2 independent. This distribution simultaneously maximizes the mutual information bounds in

81↑-

83↑.

We define the channel capacity function

corresponding to the channel capacity of Gaussian white-noise channel with signal-to-noise ratio

x (

21↓). Then we write the bound on

R1 as

and

(1)/(2)⋅log⎛⎝(N + P1)/(N)⎞⎠ + (1)/(2)⋅log⎛⎝(N + P2)/(N)⎞⎠ = ??

I(X1;Y) = (1)/(2)⋅log(E[X21] + E[X22] + N) − (1)/(2)log(E[X22] + N)

I(X1;Y|X2) = (1)/(2)⋅log(E[X21] + N) − (1)/(2)log(N) = (1)/(2)log⎛⎝(P1 + N)/(N)⎞⎠

не може вака туку треба:

I(X1X2;Y) = H(Y) − H(Y|X1X2) = H(X1 + X2 + Z) − H(X1 + X2 + Z|X1X2) ≤ (1)/(2)log(2πe)(P1 + P2 + N) − (1)/(2)log(2πe)(N) = C⎛⎝(P1 + P2)/(N)⎞⎠

I(X2;Y) = I(X1X2;Y) − I(X1;Y|X2) = (1)/(2)log⎛⎝(N + P1 + P2)/(N)⎞⎠ − (1)/(2)⋅log⎛⎝(N + P1)/(N)⎞⎠ = (1)/(2)log⎛⎝⎛⎝(N + P1 + P2)/(\cancelN)⎞⎠⎛⎝(\cancelN)/(N + P1)⎞⎠⎞⎠ = (1)/(2)log⎛⎝(N + P1 + P2)/(N + P1)⎞⎠ = (1)/(2)log⎛⎝1 + (P2)/(N + P1)⎞⎠ = C⎛⎝(P2)/(N + P1)⎞⎠

These upper bounds are achieved when X1 ~ N(0, P1) and X2 = N(0, P2) and define the capacity region. The surprising fact about these inequalities is that the sum of the rates can be as large as C⎛⎝(P1 + P2)/(N)⎞⎠ which is the rate achieved by a single transmitter sending with a power equal to the sum of the powers.

The interpretation of the corner points is very similar to the interpretation of the achievable rate pairs for a discrete multiple-access channel for a fixed input distribution. In the case of the Gaussian channel, we can consider decoding as a two-stage process:

In the fist stage, the receiver decodes the second sender, considering the first sender as part of the noise. This decoding will have low probability of error if R2 < C⎛⎝(P2)/(P1 + N)⎞⎠.

After the second sender has been decoded successfully, it can be subtracted out and the first sender can be decoded correctly if R1 ≤ C⎛⎝(P1)/(N)⎞⎠. Hence, this argument shows that we can achieve the rate pairs at the corner points of the capacity region by means of single-user operations. This process called

onion-peeling, can be extended to any number of users.

Види го завршетокот на оваа глава.

Суштината на onion-peeling е постапката каде еден сигнал се декодира, а сите други (недекодирани до тогаш) се сметаат за шум. Декодираниот сигнал се одзема од резултантниот и се продолжува со декодирање на преостанатите сигнали.

If we generalize this to

m senders with equal power, the total rate is

C⎛⎝(mP)/(N)⎞⎠ which goes to

∞ as

m → ∞ .

The average rate per sender (1)/(m)⋅C⎛⎝(mP)/(N)⎞⎠ goes to 0. Thus, when the total number of senders is very large, so that there is a lot of interference,

we can still send a total amount of information that is arbitrarily large even though the rate per individual sender goes to 0.

The capacity region described above corresponds to code-division multiple access CDMA, where separate codes are used for the different senders and the receiver decodes them one by one. In many practical situations, though, simpler schemes, such as frequency-division multiplexing or time-division multiplexing, are used. With frequency-division multiplexing, the rates depend on the bandwidth allotted to each sender. Consider the case of two senders with powers P1 and P2 using non-intersecting frequency bands with bandwidths W1 and W2, where W1 + W2 = W (Total bandwidth). Using the formula for the capacity of a single-user band-limited channel, the following rate pair is achievable:

As we vary

W1 and

W2, we trace out the curve as shown in

23↓.

This curve touches the boundary of the capacity region at one point, which corresponds to allotting bandwidth to each channel proportional to the power in that channel. We conclude that no allocation of frequency bands to radio stations can be optimal unless the allocated powers are proportional to the bandwidths.

In time-division multiple access (TDMA), time is divided into slots, and each user is allotted a slot during which only that user will transmit and every other user remains quiet. If there are two users, each of power P, rate that each sends when the other is silent is C(P ⁄ N) . Now if time is divided into equal-length slots, and every odd slot is allocated to user 1 and every even slot to user 2, the average rate that each user achieves is (1)/(2)C(P ⁄ N) . This system is called naive time-division multiple access (TDMA). However it is possible to do better if we notice that since user 1 is sending only half the time, it is possible for him to use twice the power during his transmissions and still maintain the same average power constraint. With this modification, it is possible for each user to send information at a rate (1)/(2)C(2P ⁄ N). By varying the lengths of the slots allotted to each sender (and the instantaneous power used during the slot), we can achieve the same capacity region as FDMA with different bandwidth allocations.

As

23↑ illustrates, in general the capacity region is larger than that achieved by time- or frequency-division multiplexing.

But note that the multiple-access capacity region derived above is achieved by use of a common decoder for all the senders. However, it is also possible to achieve the capacity region by onion-peeling, which removes the need for a common decoder and instead, uses a sequence of single-user codes. CDMA achieves the entire capacity region, and in addition, allows new users to be added easily without changing the codes of the current users.

On the other hand, TDMA and FDMA systems are usually designed for a fixed number of users and it is possible that either some slots are empty (if actual users is less than the number of slots) or some users are left out (if the number of users is greater than the number of slots). However, in many practical systems, simplicity of design is an important consideration, and the improvement in capacity due to the multiple-access ideas presented earlier may not be sufficient to warrant the increased complexity.

For a Gaussian multiple-access system with m sources with powers P1, P2, ..., Pm and ambient noise of power N, we can state the equivalent of Gauss’s law for аny set S in the form

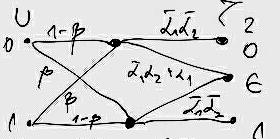

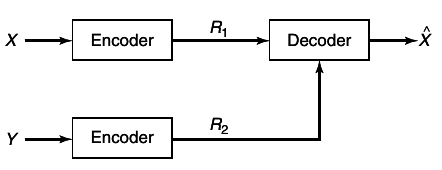

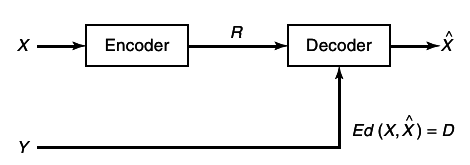

1.4 Encoding of correlated sources

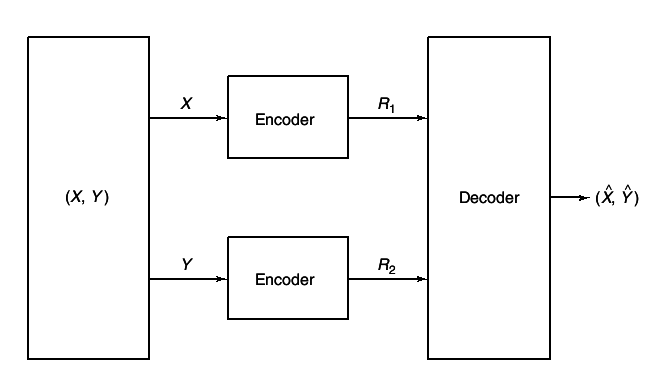

We now turn to distributed data compression. This problem is in many ways the data compression dual to the multiple-access channel problem. We know now to encode a source

X. A rate

R ≥ H(X) is sufficient. Now suppose that there are two source

(X, Y) ~ p(x, y). A rate

H(X, Y) is sufficient if we are encoding them together.

Откако го поминав проблемот 15.1 т.е. 15.2 ми стана јасна една фундаментална работа. H(X, Y)е дефиниран на множетво со следниве елементи XxY кое има |XxY| = 2nR12nR2 = 2n(R1 + R2) елементи. Затоа овде вели дека R1 + R2 ≥ H(X, Y). Си замислуваш трета променлива која ги содржи елементите од XxY кои елементи се дистрибуирани по p(X, Y).

But what if the

X and

Y sources must be described separately for some user who wishes to reconstruct both

X and

Y. It is seen that rate

R = Rx + Ry > H(X) + H(Y) is sufficient. However, in a surprising an fundamental paper by Slepian and Wolf

[7],

it is shown that a total rate R = H(X, Y) is sufficient even for separate encoding of correlated sources.

Let

(X1, Y1), (X2Y2), ... be a sequence of jointly distributed random variables i.i.d~

p(x, y). Assume that the

X sequence is available at location

A and the

Y sequence is available at a location

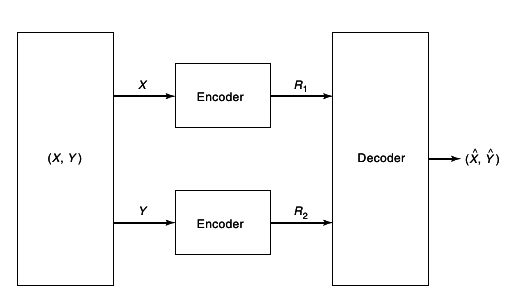

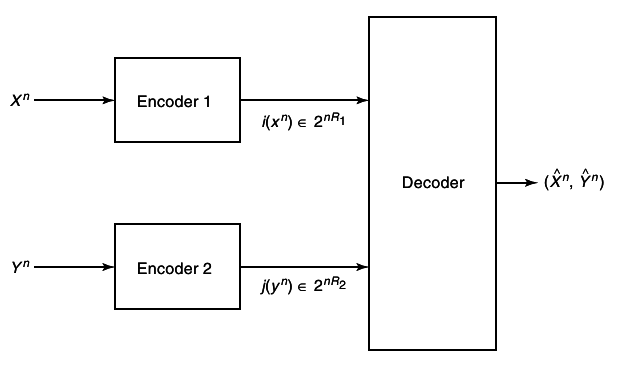

B. The situation is illustrated in

24↓.

Before we proceed to the proof of this result, we will give a few definitions.

A ((2nR1, 2nR2), n) distributed source code for the joint source (X, Y) consists of two encoder maps,

and decoder map,

g:{1, 2, ...2nR1} x{1, 2, ..., 2nR2} → XnxYn

Here f1(Xn) is the index corresponding to Xn, f2(Yn) is index corresponding to Yn, and (R1R2) is the rate pair of the code.

The probability of error for a distributed source code is defined as

A rate pair (R1R2) is said to be achievable for a distributed source if there exists a sequence of ((2nR12nR2), n) distributed source codes with probability of error P(n)e → 0. The achievable rate region is the closure of the set of achievable rates.

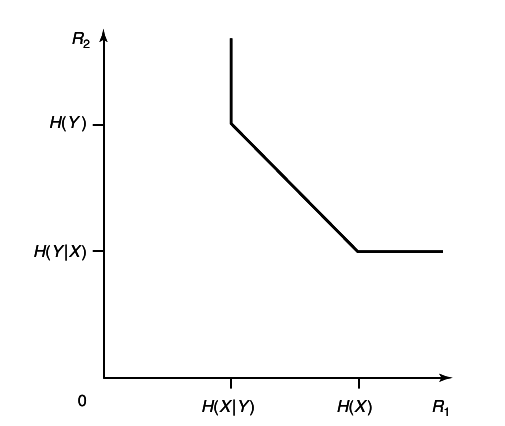

Theorem 15.4.1 (Slepian-Wolf)

For the distributed source coding problem for the source (X, Y) drawn i.i.d ~p(x, y), the achievable rate region is given by

Значи со помалку бити можеш да го опишеш X и Y поединечно но и заедно. На пример во случај на X наместо да користиш H(X) може да користиш H(X|Y) бити (Види Source Coding with side information).

Let as illustrate the result with some examples.

Consider the weather in Gotham and Metropolis. For the purposes of our example, we assume that Gotham is sunny with probability 0.5 and that the weather in Metropolis is the same as in Gotham with probability 0.89. The joint distribution of weather is given as

X − Gotham

Y − Metropolis

X ∈ {sunny, cloudy}p(X) = ⎧⎩(1)/(2), (1)/(2)⎫⎭; Y ∈ {sunny, cloudy};

p(Y|X)

sunny

cloudy

sunny

0.89

0.11

cloudy

0.11

0.89

p(X, Y) = p(X)⋅p(Y|X);

p(X, Y)

sunny

cloudy

sunny

0.445

0.055

cloudy

0.055

0.445

Assume that we wish to transmit 100 days of weather information to the National Weather Service headquarters in Washington. We could send all the 100 bits of the weather in both places, making 200 bits in all. If we decided to compress the information independently, we would still need 100⋅H(0.5) = 100 bits of information from each place, for a total of 200 bits. If, instead, we use Slepian-Wolf encoding, we need only H(X, Y)⋅100 = 150 bits total.

Сепак овде се поставува прашање со каков код ќе можеш да ја постигнеш оваа брзина. Тука само се кажува дека тоа е минималната брзина со кој можеш да ги пренесеш информациите за времето за овие два извори.

H(Y|X) = ∑(x, y)p(x, y)log(p(y|x)) = 0.89⋅log⎛⎝(1)/(0.89)⎞⎠ + 0.11⋅log⎛⎝(1)/(0.11)⎞⎠

0.89⋅log2⎛⎝(1)/(0.89)⎞⎠ + 0.11⋅log2⎛⎝(1)/(0.11)⎞⎠ = 0.5

H(X, Y) = H(X) + H(Y|X) = 1 + 0.5 = 1.5

- Ако X и Y се независни тогаш нема спас мора да пратиш 200 бити

p(Y|X)

sunny

cloudy

sunny

0.5

0.5

cloudy

0.5

0.5

H(X, Y) = H(X) + H(Y) = 1 + 1 = 2

или

H(X, Y) = 4⋅(1)/(2)⋅log2 = 2

Мене ми е апсолутно логично корелирани извори да може да се пренесат со помала брзини од некорелирани!!!

Consider the following joint distribution

p(u, v)

0

1

0

(1)/(3)

(1)/(3)

1

0

(1)/(3)

H(U, V) = 3⋅(1)/(3)⋅log3 = 1.58

In this case, the total rate required for the transmission of this source is H(U) + H(U|V) = log3 = 1.58 bits rather than the 2 bits that would be needed if the sources were transmitted independently without Slepian-Wolf encoding.

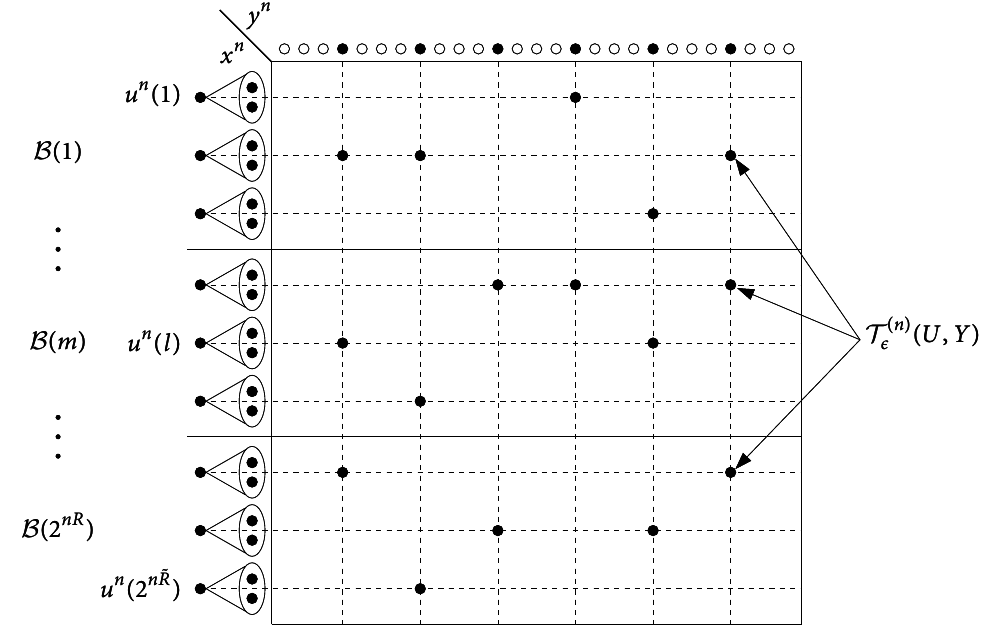

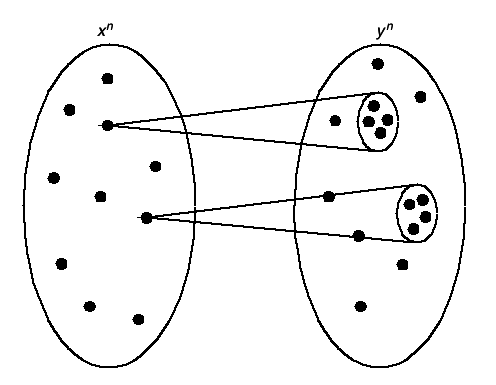

1.4.1 Achievability of the Slepian-Wolf Theorem (random bins)

We now prove the achievability of the rates in the Slepian-Wolf theorem. Before we proceed to the proof,

we introduce a new coding procedure using random bins.

The essential idea of random bins is very similar to hash functions: We choose a large random index for each source sequence. If the set of typical source sequences is small enough (or equivalently, the range of the hash function is large enough), then with high probability, different source sequences have different indices, and we can recover the source sequence from the index.

From Wikipedia

A hash function is any function that maps data of arbitrary length to data of a fixed length. The values returned by a hash function are called hash values, hash codes, hash sums, checksums or simply hashes.

Let us consider the application of this idea to the encoding of a single source. In Chapter 3 the method that we consider was to index all elements of the typical set and not bother about elements outside the typical set. We will now describe the random binning procedure, which indexes all sequences but rejects untypical sequences at a later stage.

Consider the following procedure: For each sequence Xn, draw an index at random from {1, 2, ...2nR}. The set of sequences Xn which have the same index are said to form a bin, since this can be viewed as first laying down a row of bins and then throwing the Xn’s at random into the bins. For decoding the source frоm the bin index, we look for a typical Xn sequence in the bin. If there is one an only one typical Xn sequence in the bin, we declare it to be the estimate X̂n of the source; otherwise, an error is declared.

The above procedure defines a source code. To analyze the probability of error for this code, we will now divide the Xn sequences into two types, typical sequences and non-typical sequences. If the source sequence is typical, the bin corresponding to this source sequence will contain at least one typical sequence (the source sequence itself). Hence there will be an error only if there is more than one typical sequence in the bin. If the source sequence is non-typical, there will aways be an error. But if the number of bins is much larger than the number of typical sequences, the probability that there is more than one typical sequence in a bin is very small, and hence the probability that a typical sequence will result in an error is very small.

Formally, let f(Xn) be the bin index corresponding to Xn. Call the decoding function g. The probability of error (averaged over the random choice of code f) is:

P(g(f(X)) ≠ X) ≤ P(X ≠ A(n)ϵ) + ⎲⎳xP(∃x’ ≠ x:x’ ∈ A(n)ϵ, f(x’) = f(x))p(x)

≤ ϵ + ⎲⎳x ⎲⎳\oversetx’x’ ≠ xP(f(x’) = f(x))p(x) ≤ ϵ + ⎲⎳x ⎲⎳x’ ∈ A(n)ϵ2 − nRp(x) = ϵ + ⎲⎳x’ ∈ A(n)ϵ2 − nR ⎲⎳xp(x) ≤ ϵ + ⎲⎳x’ ∈ A(n)ϵ2 − nR ≤ ϵ + 2n(H(X) + ϵ)2 − nR ≤ 2ϵ

if \mathchoiceR > H(X) + ϵR > H(X) + ϵR > H(X) + ϵR > H(X) + ϵ and n is sufficiently large. Hence, if the rate of the code is greater that the entropy, the probability of error is arbitrarily small and the code achieves the same results as the code described in Chapter 3.