\sloppy

Paper- T.Cover, Capacity theorem for the Relay Channels

Abstract- Relay channel consist of an input x1, a relay output y1, a channel output y , and a relay sender x2 (whose transmission is allowed to depend on the past symbols y1). The dependence of the received symbols upon the inputs is given by p(y, y1|x1, x2). The channel is assumed to be memoryless. In this paper following capacity theorems are proved.

1. If y is a degraded form of y1, then

C = maxp(x1, x2)min{I(X1, X2;Y), I(X1;Y1|X2)}.

2. If

y1 is degraded form of

y, then

C = maxp(x1)maxx2{I(X1;Y|x2).

3. If

p(y, y1|x1, x2) is an

arbitrary relay channel with feedback from

(y, y1) to both

x1 and

x2, then

C = maxp(x1, x2)min{I(X1, X2;Y), I(X1; Y, Y1|X2)}

4. For a general relay channel

\mathchoiceC ≤ maxp(x1, x2)minI(X1, X2;Y), I(X1;Y, Y1|X2)C ≤ maxp(x1, x2)minI(X1, X2;Y), I(X1;Y, Y1|X2)C ≤ maxp(x1, x2)minI(X1, X2;Y), I(X1;Y, Y1|X2)C ≤ maxp(x1, x2)minI(X1, X2;Y), I(X1;Y, Y1|X2)

Од овој израз може да сконташ дека капацитетот на генерален рејлиев канал е аналоген на max-flow min-cut алгоритамот. Го сконтав откако ја помина вглавата 15.7 од EIT.

Во EIT книгата наместо X2 користат X1, а наместо X1 користат X. Таму кпацитетот на генерален рејлиев канал е:

C ≤ supp(x, x1)min{I(X, X1;Y), I(X;Y, Y1|X1)}

1 Introduction

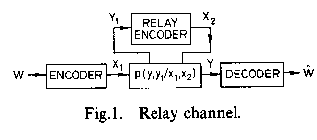

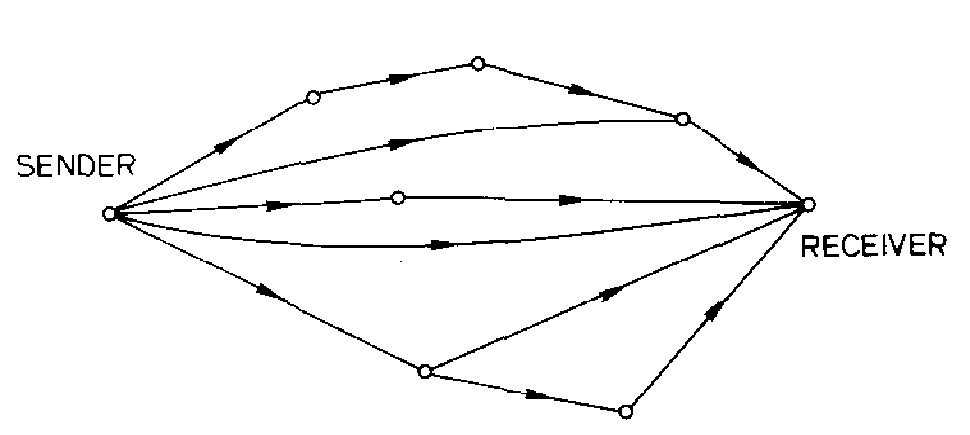

THE Discrete memoryless relay channel denoted as

(X1x X2 , p(y, y1|x1, x2), YxY2) consist of four finite sets:

X\mathnormal1, X\mathnormal2, Y, Y\mathnormal1 and a collection of probability distributions

p(., .|x1, x2) on

YxY\mathnormal1one for each

(x1, x2) ∈ X\mathnormal1xX\mathnormal2. The interpretation is that

x1 is the input to the channel and

y is the output,

y1 is the relay output and

x2 is the input symbol chosen by the relay as shown on

1↑. The channel is memoryless in the sense that the current received symbols

(Yi2, Yi3) and the message and past symbols

(m, Xi−11, Xi−12, Yi−12, Yi−13) are conditionally independent given the current transmitted symbols

(Xi1, Xi2). The problem is to find the capacity of the channel between the sender

x1 and the receiver

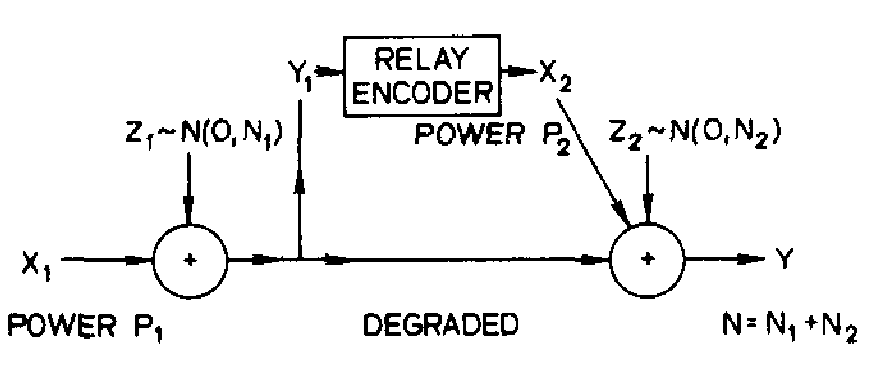

y. The model that motivates our investigation of degraded relay channels is perhaps best illustrated in the Gaussian case (

4↓). Suppose the transmitter

x1 has power

P1 and the relay transmitter has power

P2. The relay receiver

\mathchoicey1y1y1y1 sees

\mathchoicex1 + z1x1 + z1x1 + z1x1 + z1,

z1 = N(0, N1). The intended receiver

y sees the sum of the relay signal

x2 and a corrupted version of y1, i.e.,

y = x2 + y1 + z2 z2 ~ N(0, N2).

How should x2 use his knowledge of x1 (obtained through y1) to help y understand x1?

За да сватиш зошто се вакви ознаките треба да го видиш чланакот од van der Meulen

We shell show that capacity is given with

C* = max0 ≤ α ≤ 1min⎧⎩C⎛⎝⎛⎝(P1 + P2 + 2√( α⋅P1P2))/(N1 + N2)⎞⎠, C⎛⎝(αP)/(N1)⎞⎠⎞⎠⎫⎭

where C(x) = (1)/(2)log(1 + x). An interpretation consistent with achieving C* in this example is that y1 discovers x1 perfectly, then x2 and x1 cooperate coherently in the next block to resolve the remaining y uncertainty about x1.

Многу убаво кажано H(x1|y) неизвесност колку добро го знаеш x1 ако го знеш y

However, in this next block, fresh x1 information is superimposed, thus resulting in a steady-state resolution of the past uncertainty and infusion of new information.

An (M, n) code for the relay channel consist of a set of integers:

a set of relay functions

{fi}ni = 1 such that:

Дефиницијата на симболот што се испраќа одговара на дефиницијата на стратегиите во релето во чланакот од van der Meulen. Со други зборови овде сака да каже дека сигналот што го испраќа релето во i-от тајмслот зависи од (i − 1)-те претходно примените сигнали во релето.

For generality, the encoding functions x1(⋅), fi(⋅) and decoding function g(⋅) are allowed to be stochastic functions.

Note that the allowed relay encoding functions actually form part of the definition o the relay channel because of the non-anticipatory relay condition. The relay channel input x2i is allowed to depend only on the past \mathchoiceyi1 = (y11, yi1, …, y1 i − 1)yi1 = (y11, yi1, …, y1 i − 1)yi1 = (y11, yi1, …, y1 i − 1)yi1 = (y11, yi1, …, y1 i − 1). The channel is memory-less in the sense that (yi, y1 i) depends on the past (xi1, xi2) only through the current transmitted symbols (x1 i, x2 i). Thus, for any choice p(w), w ∈ M, and code choice x1: W → X\mathnormaln\mathnormal1 and relay functions {fi}ni = 1, the joint probability mass function on W\mathnormalxX\mathnormaln\mathnormal1\mathnormalxX\mathnormaln\mathnormal2\mathnormalxY\mathnormaln\mathnormalxY\mathnormaln\mathnormal1 is given by:

n = 2

p(w, x1, x2, y, y1) = p(w)∏2i = 1p(x1i|w)p(x2i|y11, y12, …, y1i − 1)⋅p(yi, y1i|x1i, x2i)

= p(w)⋅p(x11|w)p(x21)⋅p(y1, y11|x11, x21)p(x12|w)p(x22|y11)⋅p(y2, y12|x12, x22)

Сака да каже: X1 зависи од W, X2 зависи од Y1 a (Y, Y1) зависат од (X1, X2)

If the message

w ∈ W is sent, let

Потсетување од EIT на Cover

λ(w) = Pr(g(Yn) ≠ w|Xn = xn(w)) = ⎲⎳ynP(yn|xn(w))⋅I(g(yn) ≠ w)

denote the conditional probability of error. We define the average probability of error of the code to be:

The probability of error is calculated under a special distribution - uniform distribution over the codewords w ∈ [1, M]. Finally let:

be the maximal probability of error for the (M, n) code.

The rate R of an (M, n) code is defined by:

The rate R is said to be achievable by a relay channel if, for any ϵ > 0 and for all n sufficiently large, there exist an (M, n)code with

R − ϵ ≤ (1)/(n)⋅log(M); n⋅(R − ϵ) ≤ log(M); 2n⋅(R − ϵ) ≤ M

such as that λn ≤ ϵ. The capacity C of the relay channel is the supremum of the set of achievable rates.

мое прво резонирање:

log(M) ≥ nR; R ≤ (1)/(n)⋅log(M)

(This is bit different definition of achievability compared to the definition in the T. Cover textbook.)

Кога го преведував за докторатот, ми стана логичен на крај изразот

9↑. Се сетив на noisy typewriter. Таму дактилографката ја греши секоја втора буква.

M = 28 а

R = log(14)

M ≥ 2log(14) = 14. Значи ако имаш безгрешна дактилографка тогаш M = 28 = 2log(|X|) = 2log(28).

We now consider a family of relay channels in which the relay receiver y1 is better than the ultimate receiver y in the sense defined below.

Definition(degraded): (current)

The relay channel

(X\mathnormal1xX\mathnormal2, \mathnormalp(y, y1|x1, x2), YxY\mathnormal1) is said to be

degraded if

p(y, y1|x1, x2) can be written in the form

Equivalently, we see by inspection of

10↑ that a relay channel is degraded if

p(y|x1, x2, y1) = p(y|x2, y1) i.e.,

\mathchoiceX1 → (X2, Y1) → YX1 → (X2, Y1) → YX1 → (X2, Y1) → YX1 → (X2, Y1) → Y form Markov chain

.

Дефиницијата сака да каже дека за дадени x2 i y1, y не зависи од x1.

p(X, Z|Y) = (p(X, Y, Z))/(P(Y)) = (p(X, Y)⋅p(Z|X, Y))/(P(Y)) = (p(X, Y)⋅p(Z|Y))/(P(Y)) = p(X|Y)⋅p(Z|Y) (ова ако добро се сеќавам е дефинција за Markov chain X → Y → Z)

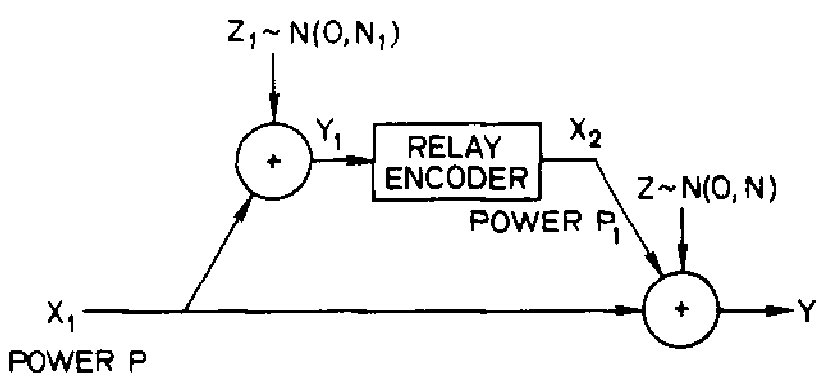

The previously discussed Gaussian channel is therefore degraded. For the reader familiar with the definition of the degraded broadcast channel, we observe that a degraded relay channel can be looked at as a family of physically degraded broadcast channels indexed by x2. A weaker form of degradation (stochastic) can be defined for relay channels, but Theorem 1 below then becomes only an inner bound to the capacity. The case in which the relay y1 is worse than y is less interesting (except for the converse) and is defined as follows.

Сака да каже дека во нормални услови сигналот во релето y1 е подобар од сигналот во приемникот. За обратно деградиран канал сигналот во приемникот е подобар од сигналот во релето.

Definition: (reversely degraded):

The relay channel

(X\mathnormal1xX\mathnormal2, \mathnormalp(y, y1|x1, x2), YxY\mathnormal1) is

reversely degraded if

p(y, y1|x1, x2) can be written in following form

The main contribution of this paper is summarized by the following three theorems.

Theorem 1:(degraded relay channel)

The capacity

C of the

degraded relay channel is given

where supremum is over all joint distributions p(x1, x2) on (X\mathnormal1, X\mathnormal2).

Theorem 2:(reversely degraded relay channel)

The capacity

C0 of the

reversely degraded relay channel is given by

Theorem 2 has a simple interpretation. Since the relay y1 sees a corrupted version of what y sees, x2 can contribute no new information to y - thus x2 is set constantly at the symbol that „opens“ the channel for the transmission of x1 directly to y at rate I(X1;Y|x2). The converse proves that one can do no better.

Theorem 1 has a more interesting interpretation. The first term in the brackets in

12↑ sugest that a rate

I(X1, X2;Y) can be achieved where

p(x1, x2) is arbitrary. However, this rate can only be achieved by complete cooperation of

x1 and

x2.

To set up this cooperation x2 must know x1. Thus the

x1 rate of transmission should be less than

I(X1;Y1|X2). (How they cooperate given these two conditions will be left to the proof.) Finally, both constraints lead to the minimum characterization in

12↑.

I(X1, X2;Y)

= \cancelto0 − x2can contribute no new information to y I(X2;Y1) + I(X1;Y1|X2) = I(X1;Y1) + I(X2;Y1|X1); I(X1, X2;Y) ≥ I(X1;Y1|X2)

I(X2;Y1|X1)

= H(X2|X1) − H(Y1|X1, X2) = − H(Y1|X1)

Сака да каже ако x2 може да придонесе со нова информација на y тогаш \strikeout off\uuline off\uwave offI(X1, X2;Y) ≥ I(X1;Y1|X2) ако не придонесува нова информација тогаш\uuline default\uwave default \strikeout off\uuline off\uwave offI(X1, X2;Y)=I(X1;Y1|X2)

\strikeout off\uuline off\uwave offКоја е поентата тогаш да зема минимум?

18.06.14

I(X1, X2;Y) = \overset(*)I(X2;Y1) + I(X1;Y1|X2) = I(X1;Y1) + I(X2;Y1|X1); I(X1, X2;Y) ≥ I(X1;Y1|X2)

(*) - Мислам дека овој член е 0 зошто X2 е функција од Y1.

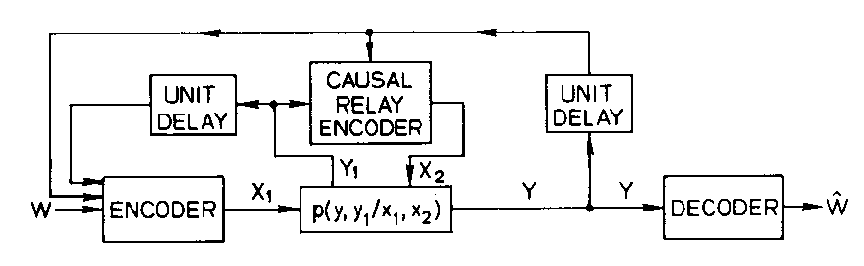

The obvious notion of an arbitrary relay channel with causal feedback (from both y and y1 to x1 and x2) will be formalized in Section V. The following theorem can then be proved.

Theorem 3:(arbitrary channel)

The Capacity of

CFB of an

arbitrary relay channel with feedback is given by:

Note that CFB is the same as C except that Y1 is replaced by (Y, Y1) in I(X1;Y1|X2). The reason is that the feedback changes an arbitrary relay channel into a degraded relay channel in which x1 transmits information to x2 by way of y1 and y. Clearly Y is a degraded form of (Y, Y1).

Theorem 2 is included for reasons of completeness, but it can be shown to follow from a chain of remarks in

[1] under slightly stronger conditions. Specifically in

[1] .

R1 = C1(1, 2),

\mathchoiceLema4.1.Lema4.1.Lema4.1.Lema4.1. C1(1, 3) ≤ C1[1, (2, 3)] with equality if both

P(y2, y3|x1x2x3) = P(y2|x1, x2, x3)P(y3|x1, x2, x3)

(i.e., the outputs y2 and y3 are conditionally independent given the inputs x1x2 and x3, and \mathchoiceC1(1, 2) = 0C1(1, 2) = 0C1(1, 2) = 0C1(1, 2) = 0.

\strikeout off\uuline off\uwave off\mathchoiceTheorem 10.1.Theorem 10.1.Theorem 10.1.Theorem 10.1. \uuline default\uwave defaultFor any n a (1, 3)-code (n, M, λ) satisfies

logM ≤ (n⋅U + 1)/(1 − λ)

\mathchoiceTheorem7.1.Theorem7.1.Theorem7.1.Theorem7.1.Let

ϵ > 0 and

0 < λ ≤ 1 be arbitrary. For any d.m. three-terminal channel and all

n sufficiently large there exists a

(1, 3) -code

(n, M, λ) with

M > 2n[C(1, 3) − ϵ].

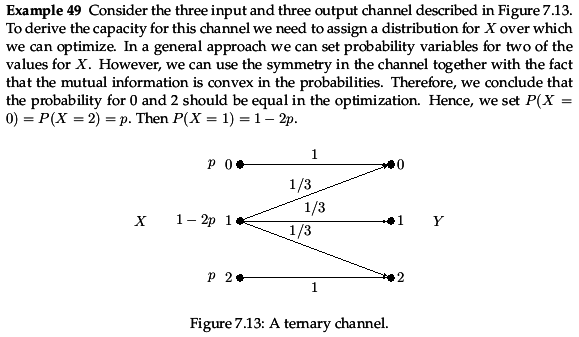

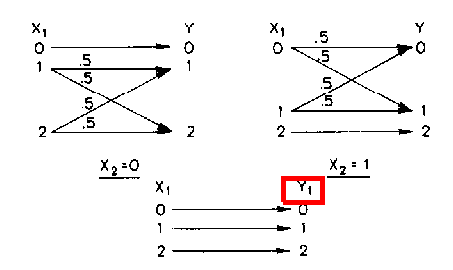

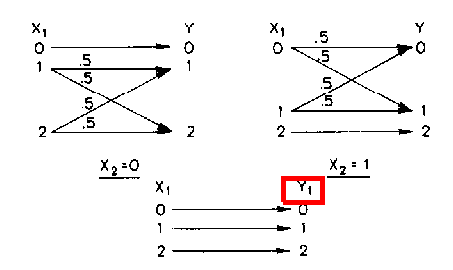

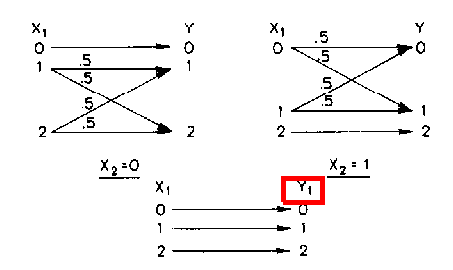

Before proving the theorems, we apply the result

12↑ to a

simple example introduced by Sato

[4]. The channel as shown in

2↑ has

X\mathnormal1 = Y = Y\mathnormal1 = \mathnormal\mathnormal\mathnormal{0, 1, 2},

X\mathnormal2 = \mathnormal{0, 1}, and the conditional probability

p(y, y1|x1, x2) satisfies

10↑. Specifically, the channel operation is:

and

p(y|y1, x2 = 0) =

y1 = 0

y1 = 1

y1 = 2

\overset

y = 0

y = 1

y = 2

⎡⎢⎢⎢⎣

1

0

0

0

0.5

0.5

0

0.5

0.5

⎤⎥⎥⎥⎦

Sato calculated a cooperative upper bound to the channel capacity of the channel, \mathchoiceRUG = maxp(x1, x2)I(X1, X2;Y) = 1.170RUG = maxp(x1, x2)I(X1, X2;Y) = 1.170RUG = maxp(x1, x2)I(X1, X2;Y) = 1.170RUG = maxp(x1, x2)I(X1, X2;Y) = 1.170.

By restricting the relay encoding functions to

i)

x2i = f(y11, ..., y1i − 1) = f(y1i − 1)

1 < i ≤ n,

ii)

x2i = f(y11, ..., y1i − 1) = f((y1i − 2, y1i − 1)

1 < i ≤ n.

Sato calculated two lower bounds to the capacity of the channel:

i)

R1 = 1.0437

ii)

R2 = 1.0549

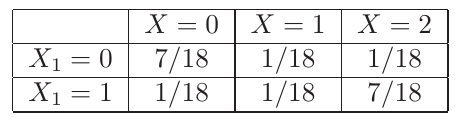

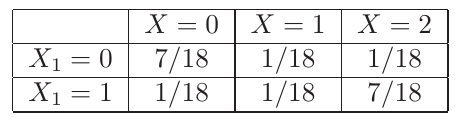

From Theorem 1 we obtain the true capacity C = 1.161878. The optimal joint distribution on X\mathnormal1xX\mathnormal2 is given in Table 1.

We shall see that instead of letting the encoding functions of the relay depend only on a finite number of previous

y1 transmissions, we can achieve

C by allowing

block Markovian dependence of x2 and y1 in a manner similar to

[6].

Од El Gamal NIT:

Since the relay codeword transmitted in a block depends statistically on the message transmited in the previous block, we reffer to this scheme as block Markov coding (BMC)

Подолу се обидите да го добијам C = 1.161878. Го добив во последниот обид 5↓. Пресметувам само I(X1, X2;Y) но не и I(X1;Y1|X2). Исто така сеуште не успеав да ја добијам сам оптималната дистрибуција од чланакот но ја добив 5↓ дистрибуцијата од NIT Lecture Notes Relay with limmited lookahead (која претходно ја проверував во 5↓). Тоа е таа дистрибуција од која Cover го добива RUG = maxp(x1, x2)I(X1, X2;Y).

02.07.14

Сега ми текна дека дистрибуцијата од чланакот ја имаат добиено со Lagrange Multipliers. Така немам пробано. Само легитимен ми е начинот со извод и не знам зошто би пробувал на дру начин.

04.07.14

Ова од 02.07 мислам дека не треба да се проверува. C = 1.161878 се добива со рестрикција на кодирачката функција да зависи од еден претходен симбол но не и порака. Не знам како се прави сега тоа. Веројатно треба да работам со n-битни низи. Засега ќе го оставам на on-hold.

Не, не, не!!! C = 1.161878 е true capacity изгледа треба да го пресметам I(X1;Y1Y|X2). Јас цело време се мотав пресметувајќи го I(X1;Y1|X2) а тоа е за деградиран релеен канал.

C = maxp(x1, x2)I(X1, X2;Y); I(X1, X2;Y) = H(Y) − H(Y|X1, X2) = H(X1, X2) − H(X1, X2|Y)H(Y|X2 = 0) = ∑xp(x1)⋅H(Y|X2 = 0, X1 = x1) = p(X1 = 0)⋅H(Y|X2 = 0, X1 = 0) + p(X1 = 1)⋅H(Y|X2 = 0, X1 = 1) + p(X1 = 2)⋅H(Y|X2 = 0, X1 = 2)

H(Y|X2 = 0, X1) = ∑x1p(x1)⋅H(Y|X2 = 0, X1 = x1) = p(X1 = 0)⋅0 + p(x1 = 1)⋅[ − p(Y = 1|X2 = 0, X1 = 1)⋅log(p(Y = 1|X2 = 0, X1 = 1)) − p(Y = 2|X2 = 0, X1 = 1)⋅log(p(Y = 2|X2 = 0, X1 = 1))] + p(X1 = 2)⋅[ − p(Y = 1|X2 = 0, X1 = 2)⋅log(p(Y = 1|X2 = 0, X1 = 2) − p(Y = 2|X2 = 0, X1 = 2)⋅log(p(Y = 2|X2 = 0, X1 = 2))] = 0 + p(X1 = 1)⋅1 + p(X1 = 2)⋅1

H(Y|X2 = 1, X1) = ∑x1p(x1)⋅H(Y|X2 = 1, X1 = x1) = p(X1 = 2)⋅0 + p(X1 = 0)⋅( − p(Y = 0|X2 = 1, X1 = 0)⋅log(p(Y = 0|X2 = 1, X1 = 0) − p(Y = 1|X2 = 1, X1 = 0)⋅log(p(Y = 1|X2 = 1, X1 = 0)) + p(X1 = 1)⋅( − p(Y = 1|X2 = 1, X1 = 1)⋅log(p(Y = 1|X2 = 1, X1 = 1) − p(Y = 0|X2 = 1, X1 = 1)⋅log(p(Y = 0|X2 = 1, X1 = 1)) = 0 + p(X1 = 0)⋅1 + p(X1 = 1)⋅1

\strikeout off\uuline off\uwave offH(Y|X1, X2) = ∑x2p(x2)⋅H(Y|X1, X2 = x2) = p(X2 = 0)⋅H(Y|X2 = 0, X1) + p(X2 = 1)⋅H(Y|X2 = 1, X1) =

= \mathchoicep(X2 = 0)⋅(p(X1 = 1) + p(X1 = 2)) + p(X2 = 1)⋅(p(X1 = 0) + p(X1 = 1))p(X2 = 0)⋅(p(X1 = 1) + p(X1 = 2)) + p(X2 = 1)⋅(p(X1 = 0) + p(X1 = 1))p(X2 = 0)⋅(p(X1 = 1) + p(X1 = 2)) + p(X2 = 1)⋅(p(X1 = 0) + p(X1 = 1))p(X2 = 0)⋅(p(X1 = 1) + p(X1 = 2)) + p(X2 = 1)⋅(p(X1 = 0) + p(X1 = 1))

\strikeout off\uuline off\uwave offI(X1, X2;Y) = H(Y) − (p(X2 = 0)⋅p(X1 = 1) + p(X2 = 0)⋅p(X1 = 2) + p(X2 = 1)⋅p(X1 = 0) + p(X2 = 1)⋅p(X1 = 1))

\strikeout off\uuline off\uwave offC = log(3) − H(Y|X1, X2) = log(3) − (p(X2 = 0)⋅p(X1 = 1) + p(X2 = 0)⋅p(X1 = 2) + p(X2 = 1)⋅p(X1 = 0) + p(X2 = 1)⋅p(X1 = 1)) = \normalcolor1.2935825010

\strikeout off\uuline off\uwave off

Ова последново во е согласно веројатностите дадени во чланакот

\strikeout off\uuline off\uwave off

x2 = 0

x2 = 1

\overset

x1 = 0

x2 = 1

x3 = 2

⎡⎢⎣

0.35431

0.072845

0.072845

0.072845

0.072845

0.35431

⎤⎥⎦

\strikeout off\uuline off\uwave offp(X2 = 0)⋅p(X1 = 1) + p(X2 = 0)⋅p(X1 = 2) + p(X2 = 1)⋅p(X1 = 0) + p(X2 = 1)⋅p(X1 = 1) = p(x2 = 0, x1 = 1) + p(02) + p(10) + p(11) = 0.2913800000

0.072845 + 0.072845 + 0.072845 + 0.072845 = 0.29138

\strikeout off\uuline off\uwave off

H(Y|X1, X2) = ∑x1, x2p(x1, x2)⋅H(Y|X2 = x2, X1 = x1) = p(0, 0)H(Y|00) + p(01)H(Y|01) + p(0, 2)H(Y|02) + p(1, 0)H(Y|10) + p(11)H(Y|11) + p(1, 2)H(Y|12)

H(Y|00) = H(Y|X2 = 0, X1 = 0) = 0; H(Y|01) = 1; H(Y|02) = 1H(Y|10) = H(Y|X2 = 1, X1 = 0) = 1; H(Y|11) = 1; H(Y|12) = 0

\strikeout off\uuline off\uwave offH(Y|X1, X2) = ∑x1, x2p(x1, x2)⋅H(Y|X2 = x2, X1 = x1) = 0 + 0.072845⋅1 + 0.072845⋅1 + 0.072845⋅1 + 0.072845⋅1 + 0 = 0.2913800000

log2(3) − H(Y|X1,X2) = 1.585 − 0.2913800000 = 1.29362

И на овој начин пак го добивам истиот капацитет

Мојот резултат

5↑ не одговара на резултатот од чланакот. Мислам дека лошо го рачунам

H(Y) !?

Дали можеби треба вака:

log3(3) − H(Y|X1,X2) = 1 − 0.2913800000 = 0.70862 ternary symbols/transmission

(0.70862)/(log3(2)) = 1.123136127 bits/transmission

x = logm(y) mx = y ⁄ log2(...) x⋅log2(m) = log2(y) x = (log2(y))/(log2(m)) logm(y) = (log2(y))/(log2(m))

x = log2(y) 2x = y ⁄ logm(...) x⋅logm(2) = logm(y) x = (logm(y))/(logm(2)) log2(y) = (logm(y))/(logm(2))

Ова е втор обид откако го поминав чланакот од van der Meulen

p(y|x1, x2 = 0) =

x1 = 0

x1 = 1

x1 = 2

\overset

y = 0

y = 1

y = 2

⎡⎢⎢⎢⎣

1

0

0

0

0.5

0.5

0

0.5

0.5

⎤⎥⎥⎥⎦

p(y|x1, x2 = 1) =

x1 = 0

x1 = 1

x1 = 2

\overset

y = 0

y = 1

y = 2

⎡⎢⎢⎢⎣

0.5

0.5

0

0.5

0.5

0

0

0

1

⎤⎥⎥⎥⎦

y1 ≡ x1

I(X1, X2;Y) = H(Y) − H(Y|X1, X2) = H(X1, X2) − H(X1, X2|Y)

H(Y|X1X2) = P(X2 = 0)⋅H(Y|X1X2 = 0) + P(X2 = 1)⋅H(Y|X1X2 = 1)

H(Y|X1X2 = 0) = p(X1 = 0)⋅H(Y|X1 = 0, X2 = 0) + p(X1 = 1)⋅H(Y|X1 = 1, X2 = 0) + p(X1 = 2)⋅H(Y|X1 = 2, X2 = 0) = p(X1 = 1) + p(X1 = 2)

H(Y|X1X2 = 1) = p(X1 = 0)⋅H(Y|X1 = 0, X2 = 1) + p(X1 = 1)⋅H(Y|X1 = 1, X2 = 1) + p(X1 = 2)⋅H(Y|X1 = 2, X2 = 1) = p(X1 = 0) + p(X1 = 1)

\strikeout off\uuline off\uwave offH(Y|X1X2) = P(X2 = 0)⋅H(Y|X1X2 = 0) + P(X2 = 1)⋅H(Y|X1X2 = 1) = P(X2 = 0)[p(X1 = 1) + p(X1 = 2)] + P(X2 = 1)⋅[p(X1 = 0) + p(X1 = 1)]

I(X1, X2;Y) = H(Y) − H(Y|X1, X2) = H(Y) − p(X2 = 0)⋅[p(X1 = 1) + p(X1 = 2)] − P(X2 = 1)⋅[p(X1 = 0) + p(X1 = 1)]

= H(Y) − p(X1 = 1) − (X2 = 0)⋅p(X1 = 2) + P(X2 = 1)⋅p(X1 = 0)

log2(3) = 1.585 − − 1 ⁄ 3 − 1 ⁄ 2⋅1 ⁄ 3 − 1 ⁄ 2⋅1 ⁄ 3 = 1.585 − (2)/(3) = 1.585 − 0.67 = 0.918 ternary symbols/transmission

(0.918)/(log2[3]) = 0.5791 bits/transmission

\strikeout off\uuline off\uwave offI(X1;Y1|X2) = H(Y1) − H(Y1|X1X2) = log2(3) зошто x1 ≡ y1

I(X1, X2;Y) ≥ I(X1;Y1|X2)

Ако одам со оптималните вредности за веројатноста од чланакот

\strikeout off\uuline off\uwave off

x2 = 0

x2 = 1

\overset

x1 = 0

x2 = 1

x3 = 2

⎡⎢⎣

0.35431

0.072845

0.072845

0.072845

0.072845

0.35431

⎤⎥⎦

I(X1, X2;Y)

= H(Y) − H(Y|X1, X2) = H(Y) − (X2 = 0)⋅[p(X1 = 1) + p(X1 = 2)] − P(X2 = 1)⋅[p(X1 = 0) + p(X1 = 1)] =

\mathchoiceH(Y) − p(X2 = 0)⋅p(X1 = 1) − p(X2 = 0)⋅p(X1 = 2) − p(X2 = 1)⋅p(X1 = 0) − p(X2 = 1)⋅p(X1 = 1) = H(Y) − p(X2 = 0)⋅p(X1 = 1) − p(X2 = 0)⋅p(X1 = 2) − p(X2 = 1)⋅p(X1 = 0) − p(X2 = 1)⋅p(X1 = 1) = H(Y) − p(X2 = 0)⋅p(X1 = 1) − p(X2 = 0)⋅p(X1 = 2) − p(X2 = 1)⋅p(X1 = 0) − p(X2 = 1)⋅p(X1 = 1) = H(Y) − p(X2 = 0)⋅p(X1 = 1) − p(X2 = 0)⋅p(X1 = 2) − p(X2 = 1)⋅p(X1 = 0) − p(X2 = 1)⋅p(X1 = 1) =

log2(3) − p(01) − p(02) − p(10) − p(11) = log2(3) − 4⋅0.072845 = 0.585 − 0.2913800000 = 1.29362

p(10) + p(20) + p(01) + p(11)

Сега сакам да го пресметам

H(Y) како што треба:

p(Y = 0|X2 = 0) = p(X1 = 0) = p10; p(Y = 1|X2 = 0) = (p(X1 = 1))/(2) + (p(X1 = 2))/(2) = (p11)/(2) + (p12)/(2); p(Y = 2|X2 = 0) = (p11)/(2) + (p12)/(2) пази индексот „12“ одговара на x1 = 2

p(Y = 0|X2 = 1) = (p(X1 = 0))/(2) + (p(X1 = 1))/(2) = (p10)/(2) + (p11)/(2); p(Y = 1|X2 = 1) = (p(X1 = 0))/(2) + (p(X1 = 1))/(2) = (p10)/(2) + (p11)/(2); p(Y = 2|X2 = 1) = p(X1 = 2) = p12;

p(Y = 0) = p20⋅p10 + p21⎛⎝(p10 + p11)/(2)⎞⎠; p(Y = 1) = p20⋅(p11 + p12)/(2) + p21⋅(p10 + p12)/(2); p(Y = 2) = p20⋅(p11 + p12)/(2) + p21⋅p12

Ова во квадратчево не е добро требa да биде p11.

А̀ко земам дека

p20 = p21 = (1)/(2)

p(Y = 0) = (p10)/(2) + (1)/(2)⋅⎛⎝(p10 + p11)/(2)⎞⎠ = (3p10)/(4) + (p11)/(4); p(Y = 1) = (1)/(2)⋅(p11 + p12)/(2) + (1)/(2)⋅(p10 + p11)/(2) = (p10)/(4) + (p11)/(2) + (p12)/(4); p(Y = 2) = (1)/(2)⋅(p11 + p12)/(2) + (p12)/(2) = (p11)/(4) + (3⋅p12)/(4)

− H(Y) = p(Y = 0)⋅log(p(Y = 0)) + p(Y = 1)⋅log(p(Y = 1)) + p(Y = 2)⋅log(p(Y = 2))

H(Y) = H⎛⎝(3⋅p10 + p11)/(4), (p10 + 2⋅p11 + p12)/(4), (p11 + 3⋅p12)/(4)⎞⎠

− H(Y) = (3⋅p10 + p11)/(4)⋅log⎛⎝(3⋅p10 + p11)/(4)⎞⎠ + (p10 + 2⋅p11 + p12)/(4)⋅log⎛⎝(p10 + 2⋅p11 + p12)/(4)⎞⎠ + (p11 + 3⋅p12)/(4)⋅log⎛⎝(p11 + 3⋅p12)/(4)⎞⎠

\strikeout off\uuline off\uwave offH(Y) = (3⋅p10 + p11)/(4)⋅log⎛⎝(3⋅p10 + p11)/(4)⎞⎠ + (p10 + 2⋅p11 + 1 − p10 − p11)/(4)⋅log⎛⎝(p10 + 2⋅p11 + 1 − p10 − p11)/(4)⎞⎠ + (p11 + 3⋅(1 − p10 − p11))/(4)⋅log⎛⎝(p11 + 3⋅(1 − p10 − p11))/(4)⎞⎠

H(Y) = (3⋅p10 + p11)/(4)⋅log⎛⎝(3⋅p10 + p11)/(4)⎞⎠ + (p11 + 1)/(4)⋅log⎛⎝(p11 + 1)/(4)⎞⎠ + (p11 + 3 − 3p10 − 3p11)/(4)⋅log⎛⎝(p11 + 3 − 3p10 − 3p11)/(4)⎞⎠

H(Y) = (3⋅p10 + p11)/(4)⋅log⎛⎝(3⋅p10 + p11)/(4)⎞⎠ + (p11 + 1)/(4)⋅log⎛⎝(p11 + 1)/(4)⎞⎠ + (3 − 3⋅p10 − 2⋅p11)/(4)⋅log⎛⎝(3 − 3⋅p10 − 2⋅p11)/(4)⎞⎠

Ако земам

5↑ тогаш со изводи во мапле најдов дека

H(Y) = log2(3).

Треба да се реши со произволни вредности на p20, p21. Го решив во maple ама пак добив дека H(Y) = log(3). Кога барав извод од изразот во магента не добивав резултат.

-

Треба да се реши каде p(x1) и p(x2) нема да ги третирам независно туку каде ќе зависат еден од друг т.е. да го претставам I(...) преку здружените веројатностиp(x1, x2) па потоа да вадам извод по p(x1, x2) .

p(y|x1, x2 = 0) =

x1 = 0

x1 = 1

x1 = 2

\overset

y = 0

y = 1

y = 2

⎡⎢⎢⎢⎣

1

0

0

0

0.5

0.5

0

0.5

0.5

⎤⎥⎥⎥⎦

p(y|x1, x2 = 1) =

x1 = 0

x1 = 1

x1 = 2

\overset

y = 0

y = 1

y = 2

⎡⎢⎢⎢⎣

0.5

0.5

0

0.5

0.5

0

0

0

1

⎤⎥⎥⎥⎦

p(x2) = 0; ⎡⎢⎢⎢⎣

p(y0)

p(y1)

p(y2)

⎤⎥⎥⎥⎦ = ⎡⎢⎢⎢⎣

1

0

0

0

0.5

0.5

0

0.5

0.5

⎤⎥⎥⎥⎦⎡⎢⎢⎢⎣

p(x0)

p(x1)

p(x2)

⎤⎥⎥⎥⎦ p(x2) = 1; ⎡⎢⎢⎢⎣

p(y0)

p(y1)

p(y2)

⎤⎥⎥⎥⎦ = ⎡⎢⎢⎢⎣

0.5

0.5

0

0.5

0.5

0

0

0

1

⎤⎥⎥⎥⎦⎡⎢⎢⎢⎣

p(x0)

p(x1)

p(x2)

⎤⎥⎥⎥⎦

\strikeout off\uuline off\uwave offH(Y|X1, X2) = ∑x1, x2p(x1, x2)⋅H(Y|X2 = x2, X1 = x1) = p(0, 0)H(Y|00) + p(10)H(Y|10) + p(20)H(Y|20) + p(01)H(Y|01) + p(11)H(Y|11) + p(2, 1)H(Y|21)

= p(00)⋅0 + \mathchoicep(10) + p(20) + p(01) + p(11)p(10) + p(20) + p(01) + p(11)p(10) + p(20) + p(01) + p(11)p(10) + p(20) + p(01) + p(11) + p(21)⋅0 го имав p(11) пропуштено!!!

Пази на индексите овде се разликуваат од индексите кога ги пресметував условните веројатности погоре. На пример овде „20” подразбира x1 = 2, x2 = 0

Подолниве изрази за p(Y) се точни овие погоре немаат врска!!!

p(Y = 0) = p(X1 = 0, X2 = 0)⋅p(Y = 0|X1 = 0, X2 = 0) + p(X1 = 0, X2 = 1)⋅p(Y = 0|X1 = 0, X2 = 1) + p(X1 = 1, X2 = 1)⋅p(Y = 0|X1 = 1, X2 = 1) = \mathchoicep(00) + (p(01) + p(11))/(2);p(00) + (p(01) + p(11))/(2);p(00) + (p(01) + p(11))/(2);p(00) + (p(01) + p(11))/(2);

p(Y = 1) = \mathchoice(p(10) + p(20))/(2) + (p(01) + p(11))/(2)(p(10) + p(20))/(2) + (p(01) + p(11))/(2)(p(10) + p(20))/(2) + (p(01) + p(11))/(2)(p(10) + p(20))/(2) + (p(01) + p(11))/(2); p(Y = 2) = \mathchoice(p(10) + p(20))/(2) + p(21)(p(10) + p(20))/(2) + p(21)(p(10) + p(20))/(2) + p(21)(p(10) + p(20))/(2) + p(21)

H(Y) = − ⎛⎝p(00) + (p(01) + p(11))/(2)⎞⎠⋅log2⎛⎝p(00) + (p(01) + p(11))/(2)⎞⎠ − ⎛⎝(p(10) + p(20))/(2) + (p(01) + p(11))/(2)⎞⎠⋅log2⎛⎝(p(10) + p(20))/(2) + (p(01) + p(11))/(2)⎞⎠ − ⎛⎝(p(10) + p(20))/(2) + p(21)⎞⎠⋅log2⎛⎝(p(10) + p(20))/(2) + p(21)⎞⎠

p(00) + p(10) + p(20) + p(01) + p(11) + p(21) = 1 p(00) = 1 − p(10) + p(20) + p(01) + p(11) + p(21)

\strikeout off\uuline off\uwave off\mathchoicep(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;p(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;p(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;p(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;

\strikeout off\uuline off\uwave offa + b + c + d + e + f = 1

\strikeout off\uuline off\uwave offH(Y) = − ⎛⎝a + (d + e)/(2)⎞⎠⋅log2⎛⎝a + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠⋅log2⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + f⎞⎠⋅log2⎛⎝(b + c)/(2) + f⎞⎠

I(X1, X2;Y) = H(Y) − H(Y|X1X2) = − ⎛⎝a + (d + e)/(2)⎞⎠⋅log2⎛⎝a + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠⋅log2⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + f⎞⎠⋅log2⎛⎝(b + c)/(2) + f⎞⎠ − b − c − d − e

Можеби решението е:

\mathchoiceC = max(I(X1, X2;Y)) = log2(3) − b − c − d − e = log2(3) − 4⋅0.072845 = 1.2936C = max(I(X1, X2;Y)) = log2(3) − b − c − d − e = log2(3) − 4⋅0.072845 = 1.2936C = max(I(X1, X2;Y)) = log2(3) − b − c − d − e = log2(3) − 4⋅0.072845 = 1.2936C = max(I(X1, X2;Y)) = log2(3) − b − c − d − e = log2(3) − 4⋅0.072845 = 1.2936

\strikeout off\uuline off\uwave offC = supp(x1, x2)min{I(X1, X2;Y), I(X1;Y1|X2)}

После бара минимум. Може долната граница ја диктира: \strikeout off\uuline off\uwave offI(X1;Y1|X2) но како да го пресметам?

Со овој пристап и со претпоставка \mathchoiced + e = p(01) + p(11) = hd + e = p(01) + p(11) = hd + e = p(01) + p(11) = hd + e = p(01) + p(11) = h и \mathchoiceb + c = p(10) + p(20) = kb + c = p(10) + p(20) = kb + c = p(10) + p(20) = kb + c = p(10) + p(20) = k во мапле со парцијални изводи добив:

\mathchoice{a = 1 ⁄ 2 k, h = 2 ⁄ 3 − k, k = k}{a = 1 ⁄ 2 k, h = 2 ⁄ 3 − k, k = k}{a = 1 ⁄ 2 k, h = 2 ⁄ 3 − k, k = k}{a = 1 ⁄ 2 k, h = 2 ⁄ 3 − k, k = k}

\strikeout off\uuline off\uwave off

x2 = 0

x2 = 1

\overset

x1 = 0

x1 = 1

x1 = 2

⎡⎢⎣

0.35431

0.072845

0.072845

0.072845

0.072845

0.35431

⎤⎥⎦

\strikeout off\uuline off\uwave offp(00) = a = 0.35431 p(10) = b = 0.072845; p(20) = c = 0.072845; p(01) = d = 0.072845; p(11) = e = 0.072845; p(21) = f = 0.35431;

Ако го пресметам I(X1, X2;Y) за овие вредности се добива: I(X1, X2;Y) = 1.04256 што приближно одговара на R1 дадено подолу.

k = 2⋅0.072845 = 0.14569 h = 2 ⁄ 3 − 0.14569 = 0.52097666666667

h = 2⋅0.072845 = 0.14569 0.14569⋅2 = 0.29138

H(Y|X1, X2) = p(10) + p(20) + p(01) + p(11) = b + c + d + e

{a = 1 ⁄ 3 − 1 ⁄ 2 d − 1 ⁄ 2 e, b = 2 ⁄ 3 − c − d − e, d = d}

d = 0.072845

\strikeout off\uuline off\uwave offb = 2 ⁄ 3 − 0.072845 − 0.072845 − 0.072845 = 0.44813166666667

a = (1)/(2) − (0.072845)/(2) − (0.072845)/(2) = 0.26048833333333

{a = 4 ⁄ 9 − 1 ⁄ 2 d − 1 ⁄ 2 e, b = 2 ⁄ 9 − c − d − e, d = d}

a = 4 ⁄ 9 − 0.072845 ⁄ 2 − 0.072845 ⁄ 2 = 0.37159944444444

b = 2 ⁄ 9 − 0.072845 − 0.072845 − 0.072845 = 0.0036872222222222 0.0036872222222222⋅2 = 0.0073744444444444

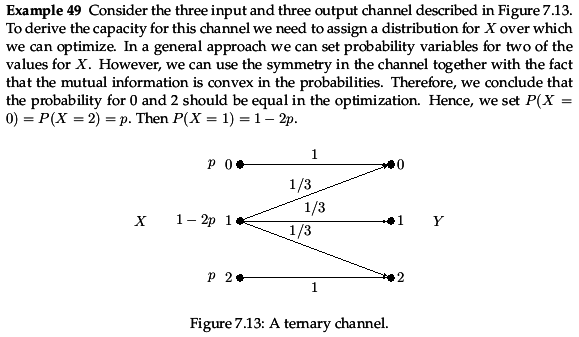

Пример за ternary channel од Lund University скриптата

I(X;Y) = H(Y) − H(Y|X); H(Y|X) = p⋅H(Y|X = 0) + (1 − 2p)⋅H(Y|X = 1) + p⋅H(Y|X = 2) = (1 − 2p)log3(3)

− H(Y) = p(Y = 0)⋅log(p(Y = 0)) + p(Y = 1)⋅log(p(Y = 1)) + p(Y = 2)⋅log(p(Y = 2))

p(Y = 0) = (p + (1 − 2p)/(3)) = (1)/(3) + (p)/(3); p(Y = 1) = (1)/(3)⋅(1 − 2p) = (1)/(3) − (2p)/(3); p(Y = 2) = p + (1)/(3)⋅(1 − 2p) = (1)/(3) + (p)/(3);

H(Y) = H⎛⎝(1)/(3) + (p)/(3), (1)/(3) − (2p)/(3), (1)/(3) + (p)/(3)⎞⎠

\strikeout off\uuline off\uwave offI(X;Y) = H⎛⎝(1)/(3) + (p)/(3), (1)/(3) − (2p)/(3), (1)/(3) + (p)/(3)⎞⎠ − (1 − 2p)log3(3)

(d)/(dx)(I(X;Y)) = 0 → p0 = ...

После потстетувањето на проблемите од EIT 7.18 и 7.28

2C = 2C1 + 2C2 = 21 − H(p) + 20 = 3 ако p = 0 C = log2(3) = 1.585

C = log2(21 − H(p) + 1)

\strikeout off\uuline off\uwave off\mathchoiceC = log2⎡⎣exp2⎛⎝((1 − β)Hα + (α − 1)Hβ)/(β − α)⎞⎠ + exp2⎛⎝( − βHα + αHβ)/(β − α)⎞⎠⎤⎦C = log2⎡⎣exp2⎛⎝((1 − β)Hα + (α − 1)Hβ)/(β − α)⎞⎠ + exp2⎛⎝( − βHα + αHβ)/(β − α)⎞⎠⎤⎦C = log2⎡⎣exp2⎛⎝((1 − β)Hα + (α − 1)Hβ)/(β − α)⎞⎠ + exp2⎛⎝( − βHα + αHβ)/(β − α)⎞⎠⎤⎦C = log2⎡⎣exp2⎛⎝((1 − β)Hα + (α − 1)Hβ)/(β − α)⎞⎠ + exp2⎛⎝( − βHα + αHβ)/(β − α)⎞⎠⎤⎦

\strikeout off\uuline off\uwave off⎡⎢⎣

p(y0)

p(y1)

⎤⎥⎦ = ⎡⎢⎣

1

0

0.5

0.5

⎤⎥⎦⎡⎢⎣

p(x0)

p(x1)

⎤⎥⎦

Дефинитивно се работи за канал како оној во EIT 7.28

⎡⎢⎢⎢⎣

p(y0)

p(y1)

p(y2)

⎤⎥⎥⎥⎦ = ⎡⎢⎢⎢⎣

1

0

0

0

0.5

0.5

0

0.5

0.5

⎤⎥⎥⎥⎦⎡⎢⎢⎢⎣

p(x0)

p(x1)

p(x2)

⎤⎥⎥⎥⎦

2C = 21 − H(p) + 21 − H(q) = 21 − 0 + 21 − 1 = 2 + 1 = 3

C = log2(3) = 1.585

Theorem 3.3.3 од Ash не можеш да ја користиш зошто каналната матрица е сингуларна (проверив во Maple)!!!!

-

Се навраќам повторно на 5↑

p(y|x1, x2 = 0) =

x1 = 0

x1 = 1

x1 = 2

\overset

y = 0

y = 1

y = 2

⎡⎢⎢⎢⎣

1

0

0

0

0.5

0.5

0

0.5

0.5

⎤⎥⎥⎥⎦

p(y|x1, x2 = 1) =

x1 = 0

x1 = 1

x1 = 2

\overset

y = 0

y = 1

y = 2

⎡⎢⎢⎢⎣

0.5

0.5

0

0.5

0.5

0

0

0

1

⎤⎥⎥⎥⎦

p(x2) = 0; ⎡⎢⎢⎢⎣

p(y0)

p(y1)

p(y2)

⎤⎥⎥⎥⎦ = ⎡⎢⎢⎢⎣

1

0

0

0

0.5

0.5

0

0.5

0.5

⎤⎥⎥⎥⎦⎡⎢⎢⎢⎣

p(x0)

p(x1)

p(x2)

⎤⎥⎥⎥⎦ p(x2) = 1; ⎡⎢⎢⎢⎣

p(y0)

p(y1)

p(y2)

⎤⎥⎥⎥⎦ = ⎡⎢⎢⎢⎣

0.5

0.5

0

0.5

0.5

0

0

0

1

⎤⎥⎥⎥⎦⎡⎢⎢⎢⎣

p(x0)

p(x1)

p(x2)

⎤⎥⎥⎥⎦

p(y|x1x2)

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

\strikeout off\uuline off\uwave offH(Y|X1, X2) = ∑x1, x2p(x1, x2)⋅H(Y|X2 = x2, X1 = x1) = p(0, 0)H(Y|00) + p(10)H(Y|10) + p(20)H(Y|20) + p(01)H(Y|01) + p(11)H(Y|11) + p(2, 1)H(Y|21)

= p(00)⋅0 + p(10) + p(20) + p(01) + p(11) + p(21)⋅0 го имав p(11) пропуштено!!!

p(Y = 0) = p(X1 = 0, X2 = 0)⋅p(Y = 0|X1 = 0, X2 = 0) + p(X1 = 0, X2 = 1)⋅p(Y = 0|X1 = 0, X2 = 1) + p(X1 = 1, X2 = 1)⋅p(Y = 0|X1 = 1, X2 = 1) = \mathchoicep(00) + (p(01) + p(11))/(2);p(00) + (p(01) + p(11))/(2);p(00) + (p(01) + p(11))/(2);p(00) + (p(01) + p(11))/(2);

p(Y = 1) = \mathchoice(p(10) + p(20))/(2) + (p(01) + p(11))/(2)(p(10) + p(20))/(2) + (p(01) + p(11))/(2)(p(10) + p(20))/(2) + (p(01) + p(11))/(2)(p(10) + p(20))/(2) + (p(01) + p(11))/(2); p(Y = 2) = \mathchoice(p(10) + p(20))/(2) + p(21)(p(10) + p(20))/(2) + p(21)(p(10) + p(20))/(2) + p(21)(p(10) + p(20))/(2) + p(21)

H(Y) = − ⎛⎝p(00) + (p(01) + p(11))/(2)⎞⎠⋅log2⎛⎝p(00) + (p(01) + p(11))/(2)⎞⎠ − ⎛⎝(p(10) + p(20))/(2) + (p(01) + p(11))/(2)⎞⎠⋅log2⎛⎝(p(10) + p(20))/(2) + (p(01) + p(11))/(2)⎞⎠ − ⎛⎝(p(10) + p(20))/(2) + p(21)⎞⎠⋅log2⎛⎝(p(10) + p(20))/(2) + p(21)⎞⎠

p(00) + p(10) + p(20) + p(01) + p(11) + p(21) = 1 p(00) = 1 − p(10) + p(20) + p(01) + p(11) + p(21)

\strikeout off\uuline off\uwave off\mathchoicep(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;p(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;p(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;p(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;

\strikeout off\uuline off\uwave offa + b + c + d + e + f = 1

\strikeout off\uuline off\uwave offH(Y) = − ⎛⎝a + (d + e)/(2)⎞⎠⋅log2⎛⎝a + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠⋅log2⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + f⎞⎠⋅log2⎛⎝(b + c)/(2) + f⎞⎠

\strikeout off\uuline off\uwave offH(Y|X1, X2) = p(10) + p(20) + p(01) + p(11) = b + c + d + e

I(X1, X2;Y) = − ⎛⎝a + (d + e)/(2)⎞⎠⋅log2⎛⎝a + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠⋅log2⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + f⎞⎠⋅log2⎛⎝(b + c)/(2) + f⎞⎠ − b − c − d − e

a = (4)/(9) − (d)/(2) − (e)/(2); b = (2)/(9) − c − d − e

p(x2) = 0; ⎡⎢⎢⎢⎣

p(y0)

p(y1)

p(y2)

⎤⎥⎥⎥⎦ = ⎡⎢⎢⎢⎣

1

0

0

0

0.5

0.5

0

0.5

0.5

⎤⎥⎥⎥⎦⎡⎢⎢⎢⎣

p(x0)

p(x1)

p(x2)

⎤⎥⎥⎥⎦

H(Y|X1|X2 = 0) = ∑x1, p(x1)⋅H(Y|X1 = x1) = p(x0)⋅0 + p(x1)⋅1 + p(x2)⋅1

p(x2) = 1; ⎡⎢⎢⎢⎣

p(y0)

p(y1)

p(y2)

⎤⎥⎥⎥⎦ = ⎡⎢⎢⎢⎣

0.5

0.5

0

0.5

0.5

0

0

0

1

⎤⎥⎥⎥⎦⎡⎢⎢⎢⎣

p(x0)

p(x1)

p(x2)

⎤⎥⎥⎥⎦

H(Y|X1|X2 = 0) = ∑x1, p(x1)⋅H(Y|X1 = x1) = p(x0)⋅1 + p(x1)⋅1

H(Y|X1X2) = ∑x2, p(x2)⋅H(Y|X1X2 = x2) = p(x2 = 0)⋅(p(x1) + p(x2)) + p(x2 = 1)⋅(p(x0) + p(x1)) = p2(0)⋅p1(1) + p2(0)⋅p1(2) + p2(1)⋅p1(0) + p2(1)⋅p1(1)

\strikeout off\uuline off\uwave offI(X1, X2;Y) = H(Y) − H(Y|X1, X2) = H(X1, X2) − H(X1, X2|Y)

Сега сакам да го пресметам H(Y) како што треба:

p(Y = 0|X2 = 0) = p(X1 = 0) = p1(0); p(Y = 1|X2 = 0) = (p(X1 = 1))/(2) + (p(X1 = 2))/(2) = (p1(1))/(2) + (p1(2))/(2); p(Y = 2|X2 = 0) = (p1(1))/(2) + (p1(2))/(2)

p(Y = 0|X2 = 1) = (p(X1 = 0))/(2) + (p(X1 = 1))/(2) = (p1(0))/(2) + (p1(1))/(2); p(Y = 1|X2 = 1) = (p(X1 = 0))/(2) + (p(X1 = 1))/(2) = (p1(0))/(2) + (p1(1))/(2); p(Y = 2|X2 = 1) = p(X1 = 2) = p1(2);

\mathchoicep(Y = 0) = p20p10 + p21⎛⎝(p10 + p11)/(2)⎞⎠; p(Y = 1) = p20⋅(p11 + p12)/(2) + p21⋅(p10 + p11)/(2); p(Y = 2) = p20⋅(p11 + p12)/(2) + p21⋅p12p(Y = 0) = p20p10 + p21⎛⎝(p10 + p11)/(2)⎞⎠; p(Y = 1) = p20⋅(p11 + p12)/(2) + p21⋅(p10 + p11)/(2); p(Y = 2) = p20⋅(p11 + p12)/(2) + p21⋅p12p(Y = 0) = p20p10 + p21⎛⎝(p10 + p11)/(2)⎞⎠; p(Y = 1) = p20⋅(p11 + p12)/(2) + p21⋅(p10 + p11)/(2); p(Y = 2) = p20⋅(p11 + p12)/(2) + p21⋅p12p(Y = 0) = p20p10 + p21⎛⎝(p10 + p11)/(2)⎞⎠; p(Y = 1) = p20⋅(p11 + p12)/(2) + p21⋅(p10 + p11)/(2); p(Y = 2) = p20⋅(p11 + p12)/(2) + p21⋅p12

\strikeout off\uuline off\uwave offI(X1, X2;Y) = H⎛⎝p20p10 + p21⎛⎝(p10 + p11)/(2)⎞⎠, p20⋅(p11 + p12)/(2) + p21⋅(p10 + p11)/(2), p20⋅(p11 + p12)/(2) + p21⋅p12⎞⎠ − p20⋅p11 − p20⋅p12 − p21⋅p10 − p21⋅p11

\strikeout off\uuline off\uwave off\mathchoicep20 = a; p21 = b; p10 = c; p11 = d; p12 = e;p20 = a; p21 = b; p10 = c; p11 = d; p12 = e;p20 = a; p21 = b; p10 = c; p11 = d; p12 = e;p20 = a; p21 = b; p10 = c; p11 = d; p12 = e;

\strikeout off\uuline off\uwave off(ac + b(1 ⁄ 2 c + 1 ⁄ 2 d))ln(ac + b(1 ⁄ 2 c + 1 ⁄ 2 d)) − (a(1 ⁄ 2 d + 1 ⁄ 2 e) + b(1 ⁄ 2 c + 1 ⁄ 2 d))ln(a(1 ⁄ 2 d + 1 ⁄ 2 e) + b(1 ⁄ 2 c + 1 ⁄ 2 d)) − a(1 ⁄ 2 d + 1 ⁄ 2 e) − be⋅log2(a(1 ⁄ 2 d + 1 ⁄ 2 e) − be)

\strikeout off\uuline off\uwave offI(X1, X2;Y) = H⎛⎝p20p10 + p21⎛⎝(p10 + p11)/(2)⎞⎠, p20⋅(p11 + p12)/(2) + p21⋅(p10 + p11)/(2), p20⋅(p11 + p12)/(2) + p21⋅p12⎞⎠ − p20⋅p11 − p20⋅p12 − p21⋅p10 − p21⋅p11

Мислам дека униформна распределба е оптимална за p(X1).

I(X1, X2;Y) = H⎛⎝(p20)/(3) + p21⎛⎝(1)/(3)⎞⎠, p20⋅(1)/(3) + p21⋅(1)/(3), p20⋅(1)/(3) + (p21)/(3)⎞⎠ − p20⋅(1)/(3) − p20⋅(1)/(3) − p21⋅(1)/(3) − p21⋅(1)/(3)

I(X1, X2;Y) = H⎛⎝(p20)/(3) + (p21)/(3), (p20)/(3) + (p21)/(3), (p20)/(3) + (p21)/(3)⎞⎠ − (p20)/(3) − (p20)/(3) − (p21)/(3) − (p21)/(3)

I(X1, X2;Y) = − log2⎛⎝(p20)/(3) + (p21)/(3)⎞⎠ − (2⋅p20)/(3) − (2⋅p21)/(3)

Во Maple ги добив следниве вредности

d = 1 − c − e

{{a = 0, c = 1 ⁄ 3, e = 1 ⁄ 2}, {a = 1, c = 1 ⁄ 2, e = 1 ⁄ 3}}

e = 1 − d − e

{{a = 0, d = 1 ⁄ 6, e = 1 ⁄ 2}, {a = 1, d = 1 ⁄ 6, e = 1 ⁄ 3}}

За првото решение:

c = 1 − d − e = 1 − (1)/(6) − (1)/(2) = 1 − (1 + 3)/(6) = 1 − (2)/(3) = (1)/(3) во согласност е со резултатот за d = 1 − c − e.

(a, b, c, d, e) = ⎛⎝0, 1, (1)/(3), (1)/(6), (1)/(2)⎞⎠

За второто решение:

c = 1 − d − e = 1 − (1)/(6) − (1)/(3) = 1 − (1 + 2)/(6) = 1 − (1)/(2) = (1)/(2) во согласност е со резултатот за d = 1 − c − e.

(a, b, c, d, e) = ⎛⎝1, 0, (1)/(2), (1)/(6), (1)/(3)⎞⎠

За овие вредности

I(X1, X2;Y) = 1

Моево изведување ми изгледа добро но се прашувам каде е шумот???

Врз основ на

5↑ да го најдам \strikeout off\uuline off\uwave off

I(X1;Y1|X2) за да потоa побарам минимум.

I(X1;Y1|X2) = H(Y1) − H(Y1|X1, X2) = H(Y1) − ⎲⎳x1, x2p(x1, x2)⋅H(Y1|X1X2 = x1, x2) = H(Y1) − p(0, 0)H(Y1|00) + p(01)H(Y1|01) + p(0, 2)H(Y1|02) + p(1, 0)H(Y1|10)

+ p(11)H(Y1|11) + p(1, 2)H(Y1|12) = H(Y1) − 0 = H(Y1)

C = supp(x1, x2)min{I(X1, X2;Y), I(X1;Y1|X2)}

I(X1;Y1|X2) = H(Y1)

\strikeout off\uuline off\uwave offI(X1, X2;Y) = H(Y) − (p(X2 = 0)⋅p(X1 = 1) + p(X2 = 0)⋅p(X1 = 2) + p(X2 = 1)⋅p(X1 = 0) + p(X2 = 1)⋅p(X1 = 1))

I(X1, X2;Y) ≤ I(X1;Y1|X2)

C = supp(x1, x2)(I(X1, X2;Y))

H(X, f(X)) = H(X) + H(f(X)|X) = H(f(X)) + H(X|f(X)); H(f(X)|X) = 0; H(X) > H(f(x));

Бараме капацитет за деградиран релеен канал, а не го користам изразот кој го дефинира

\strikeout off\uuline off\uwave offp(y, y1|x1, x2) = p(y|x1, x2)⋅p(y1|x1, x2)

\mathnormalH(Y, Y1|X1X2) = H(Y, Y1) − H(Y, Y1|X1, X2) = H(Y, Y1) − H(Y|X1X2) − H(Y1|X1X2)

I(X1X2;Y1Y2) = H(Y, Y1) − H(Y, Y1|X1X2) = H(Y, Y1) − H(Y, Y1) + H(Y|X1X2) + H(Y1|X1X2) = H(Y|X1X2) + H(Y1|X1X2)

= (p(X2 = 0)⋅p(X1 = 1) + p(X2 = 0)⋅p(X1 = 2) + p(X2 = 1)⋅p(X1 = 0) + p(X2 = 1)⋅p(X1 = 1)) + 0

p(X, Z|Y) = (p(X, Y, Z))/(p(Y)) = (p(X, Y)p(Z|XY))/(p(Y)) = p(X|Y)⋅p(Z|Y)

Revisited (откако го поминав чланакот од van der Meulen)

p(X, Z|Y) = (p(X, Y, Z))/(p(Y)) = (p(X, Y)p(Z|XY))/(p(Y)) = p(X|Y)⋅p(Z|Y)

\strikeout off\uuline off\uwave offp(y, y1|x1, x2) = p(y|x1, x2)⋅p(y1|x1, x2) Ова врска нема

for degraded: \mathchoiceX1 → (X2, Y1) → YX1 → (X2, Y1) → YX1 → (X2, Y1) → YX1 → (X2, Y1) → Y for reversly degraded:\mathchoiceX1 → (X2, Y) → Y1X1 → (X2, Y) → Y1X1 → (X2, Y) → Y1X1 → (X2, Y) → Y1

\strikeout off\uuline off\uwave off\mathchoicep(y, y1|x1, x2)\overset deg. = p(y1|x1, x2)⋅p(y|y1, x2)p(y, y1|x1, x2)\overset deg. = p(y1|x1, x2)⋅p(y|y1, x2)p(y, y1|x1, x2)\overset deg. = p(y1|x1, x2)⋅p(y|y1, x2)p(y, y1|x1, x2)\overset deg. = p(y1|x1, x2)⋅p(y|y1, x2) = p(y|x1, x2)⋅p(y1|y, x1, x2)\overset rev.deg = p(y|x1, x2)⋅p(y1|y, x2)

\mathnormalI(X1, X2;Y, Y1) = H(Y, Y1) − H(Y, Y1|X1, X2) = H(Y, Y1) − H(Y1|X1X2) − H(Y|Y1, X2)

I(X1X2;Y1Y) = H(Y, Y1) − H(Y, Y1|X1X2) = \mathchoiceH(Y1)H(Y1)H(Y1)H(Y1) + H(Y|Y1) − \mathchoiceH(Y1|X1X2)H(Y1|X1X2)H(Y1|X1X2)H(Y1|X1X2) − H(Y|Y1, X2) = \mathchoiceI(X1, X2;Y1) + I(X2;Y|Y1)I(X1, X2;Y1) + I(X2;Y|Y1)I(X1, X2;Y1) + I(X2;Y|Y1)I(X1, X2;Y1) + I(X2;Y|Y1)

I(X1X2;Y1Y) = \mathchoiceI(X1X2;Y)I(X1X2;Y)I(X1X2;Y)I(X1X2;Y) + I(X1X2;Y1|Y) = I(X1X2;Y1) + I(X1X2;Y|Y1) = \mathchoiceI(X1, X2;Y1) + I(X2;Y|Y1)I(X1, X2;Y1) + I(X2;Y|Y1)I(X1, X2;Y1) + I(X2;Y|Y1)I(X1, X2;Y1) + I(X2;Y|Y1) ⇒ I(X1X2;Y|Y1) = I(X2;Y|Y1)

I(X1X2;Y1) = I(X2;Y1) + I(X1;Y1|X2) = H(Y1) − \cancelto0H(Y1|X2) + I(X1;Y1|X2) = H(Y1) + I(X1;Y1|X2)

\strikeout off\uuline off\uwave offI(X1X2;Y) + I(X1X2;Y1|Y) = H(Y1) + I(X1;Y1|X2) + I(X2;Y|Y1)

I(X1X2;Y) + I(X1;Y1|Y) + I(X2;Y1|Y, X1)

Овој обид е од второто читање на чланакот (после EIT chapter 15).

Ми изгледа многу едноставен и точен. Најмногу му верувам. Сепак не се добиваат вредностите што ги добил Sato.

p(y|x1x2) p(x1x2, y)

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

y|x1x2

y0

y1

y2

p(x1x2)

00

p

0

0

p

10

0

0.5p

0.5p

p

20

0

0.5p

0.5p

p

01

0.5p

0.5p

0

p

11

0.5p

0.5p

0

p

21

0

0

p

p

p(y)

2p

2p

2p

I(X1X2;Y) = H(Y) − H(Y|X1X2) = − 6⋅plog(2p) − H(Y|X1X2)

H(Y|X1X2 = 00) = H(Y|X1X2 = 00) = (1⋅log1) = 0

H(Y|X1X2 = 10) = p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10)) + p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10))

= (1)/(2)log2 + (1)/(2)log2 = log2

H(Y|X1X2 = 20) = H(Y|X1X2 = 01) = H(Y|X1X2 = 11) = log2

H(Y|X1X2) = ⎲⎳x1x2yp(x1x2)H(y|x1x2) = 2⋅p⋅0 + 4⋅p⋅log2 = 4⋅p⋅log2 = 4⋅p⋅log2

I(X1X2;Y) = H(Y) − H(Y|X1X2) = − 6⋅plog(2p) − H(Y|X1X2) = − 6⋅p⋅log(2p) − 4p⋅log2

(d)/(dp)(I(X1X2;Y)) = 0 → p = 0.116; → maxpI(X1X2;Y) = 0.8 ternary symbols = 1.263 bits

0.116⋅6 = 0.696

Не бива со извод зошто после не излегува вкупната веројатност 1. Затоа мора со униформна распределба на p(x1x2) = p = (1)/(6).

N⎡⎣1 − 4⋅(1)/(6)⋅Log[3, 2]⎤⎦ = 0.57938 ternary digits/transmission

N⎡⎣Log[2, 3] − 4⋅(1)/(6)⋅Log[2, 2]⎤⎦ = 0.918296 bits/transmission

y = log3(x) → x = 3y|⋅log2() → log2x = y⋅log2(3) → y = (log2(x))/(log2(3))

y = log2(x) → x = 2y|⋅log3() → log3x = y⋅log3(2) → y = (log3(x))/(log3(2))

Види ги примерите во Network Information Theory од A. E. Gamal. Таму ќе ти стане јасно зошто не ти излегуваат вреднотите како горе.

Ова е добро ама капацитетот е

C ≥ maxp(x, y)min{I(X1, X2;Y2)I(X1;Y2|X2)}

- Значи не го имам пресметано I(X1;Y2|X2)

Ама што е во случајов Y2?????

I(X1;Y2|X2) = H(Y|X2) − H(Y|X1X2)

H(Y|X2) = ?

H(Y|X2) = p(X2 = 0)H(Y|X2 = 0) + p(X2 = 1)H(Y|X2 = 1)

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

y|x1x2

y0

y1

y2

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

H(Y|X2 = 0)

H(Y|X1X2 = 0) = p(X1 = 0, X2 = 0)⋅H(Y|X1 = 0, X2 = 0) + p(X1 = 1, X2 = 0)⋅H(Y|X1 = 1, X2 = 0) + p(X1 = 2, X2 = 0)⋅H(Y|X1 = 2, X2 = 0) =

= p(X1 = 1, X2 = 0)log2 + p(X1 = 2, X2 = 0)log2

H(Y|X1X2 = 1) = p(X1 = 0, X2 = 1)⋅H(Y|X1 = 0, X2 = 1) + p(X1 = 1, X2 = 1)⋅H(Y|X1 = 1, X2 = 1) + p(X1 = 2, X2 = 1)⋅H(Y|X1 = 2, X2 = 1) =

= p(X1 = 0, X2 = 1)log2 + p(X1 = 1, X2 = 1)log2

H(Y|X2) = p(X2 = 0)H(Y|X2 = 0) + p(X2 = 1)H(Y|X2 = 1) =

= p(X2 = 0)(p(X1 = 1|X2 = 0) + p(X1 = 2|X2 = 0))log2 + p(X2 = 1)(p(X1 = 0|X2 = 1) + p(X1 = 1|X2 = 1))log2

= (p(X1 = 1, X2 = 0) + p(X1 = 2, X2 = 0) + p(X1 = 0, X2 = 1) + p(X1 = 1, X2 = 1))log2 = 4plog2

I(X1;Y|X2) = H(Y|X2) − H(Y|X1X2) = 4plog2 − 2p⋅log2 = 2plog2 ternary digits/transmission

I(X1;Y|X2) = 2p bits/transmission

maxI(X1;Y|X2) = ||p = (1)/(6)|| = (1)/(3)

Овој обид е после El Gamal, Network Information Theory Ch.16.

p(y|x1x2) p(x1x2, y)

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

y|x1x2

y0

y1

y2

p(x1x2)

00

p1

0

0

p1

10

0

0.5p2

0.5p2

p2

20

0

0.5p3

0.5p3

p3

01

0.5p4

0.5p4

0

p4

11

0.5p5

0.5p5

0

p5

21

0

0

p6

p6

p(y)

1 ⁄ 3

1 ⁄ 3

1 ⁄ 3

I(X1X2;Y) = H(Y) − H(Y|X1X2) = − 6⋅plog(2p) − H(Y|X1X2)

H(Y|X1X2 = 00) = H(Y|X1X2 = 00) = (1⋅log1) = 0

H(Y|X1X2 = 10) = p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10)) + p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10))

= (1)/(2)log2 + (1)/(2)log2 = log2

H(Y|X1X2 = 20) = H(Y|X1X2 = 01) = H(Y|X1X2 = 11) = log2

H(Y|X1X2) = ⎲⎳x1x2yp(x1x2)H(y|x1x2) = 2⋅p⋅0 + 4⋅p⋅log2 = 4⋅p⋅log2 = 4⋅p⋅log2

I(X1X2;Y) = H(Y) − H(Y|X1X2) = − 6⋅plog(2p) − H(Y|X1X2) = − 6⋅p⋅log(2p) − 4p⋅log2

(d)/(dp)(I(X1X2;Y)) = 0 → p = 0.116; → maxpI(X1X2;Y) = 0.8 ternary symbols = 1.263 bits

0.116⋅6 = 0.696

Не бива со извод зошто после не излегува вкупната веројатност 1. Затоа мора со униформна распределба на p(x1x2) = p = (1)/(6).

N⎡⎣1 − 4⋅(1)/(6)⋅Log[3, 2]⎤⎦ = 0.57938 ternary digits/transmission

N⎡⎣Log[2, 3] − 4⋅(1)/(6)⋅Log[2, 2]⎤⎦ = 0.918296 bits/transmission

Следново изведување е ептен точно. Дефинитивно не можам да најдам грешка. Евентуално да дозволиш p(y) да не биде униформно

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

y|x1x2

y0

y1

y2

p(x1x2)

00

1 ⁄ 6

0

0

1 ⁄ 6

10

0

0.5(1 ⁄ 3 − x)

0.5(1 ⁄ 3 − x)

1 ⁄ 3 − x

20

0

0.5x

0.5x

x

01

0.5(1 ⁄ 3 − x)

0.5(1 ⁄ 3 − x)

0

1 ⁄ 3 − x

11

0.5x

0.5x

0

x

21

0

0

1 ⁄ 6

1 ⁄ 6

p(y)

1 ⁄ 3

1 ⁄ 3

1 ⁄ 3

p2 + p3 = (1)/(3) → p2 = 1 ⁄ 3 − p3 = 1 ⁄ 3 − x

p4 + p5 = (1)/(3) → p4 = 1 ⁄ 3 − p5 = 1 ⁄ 3 − y

I(X1X2;Y) = H(Y) − H(Y|X1X2) = log3 − H(Y|X1X2)

H(Y|X1X2 = 00) = H(Y|X1X2 = 21) = (1⋅log1) = 0

H(Y|X1X2 = 10) = p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10)) + p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10))

= (1)/(2)log2 + (1)/(2)log2 = log2

H(Y|X1X2 = 20) = H(Y|X1X2 = 01) = H(Y|X1X2 = 11) = log2

H(Y|X1X2) = ⎲⎳x1x2yp(x1x2)H(y|x1x2) = 2⋅(1)/(6)⋅0 + (2⋅x + 2 ⁄ 3 − 2x)⋅log2 = 2 ⁄ 3⋅log2

I(X1X2;Y) = H(Y) − H(Y|X1X2) = 1 − (2 ⁄ 3)⋅log2

max[I(X1X2;Y)] = 1 − (2)/(3)log2 ternary digits/transmission

N⎡⎣1 − (2)/(3)⋅Log[3, 2]⎤⎦ = 0.57938

N⎡⎣Log[2, 3] − (2)/(3)⋅Log[2, 2]⎤⎦ = 0.918296 bits/transmission

Исто како при претпоставката дека

p(x1x2) е униформно распределена

I(X1;Y2|X2) = I(X1;Y2) = H(X1) − H(Y2|X1) = log3 = 1 ternary digits/transmission

N[Log[2, 3]] = 1.58496

Min[0.92, 1.585] = 0.92

Не мора да се глупираш и да пишуваш

log2. Најлесно е цело време да работиш со

log2 и одма ќе добиеш резултат во бити.

Од NIT Lecture Notes Relay with limmited lookahead.

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

y|x1x2

y0

y1

y2

p(x1x2)

00

7 ⁄ 18

0

0

7 ⁄ 18

10

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

20

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

01

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

11

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

21

0

0

7 ⁄ 18

7 ⁄ 18

p(y)

4 ⁄ 9

1 ⁄ 9

4 ⁄ 9

(8)/(36) + (7)/(9) = 1 (7)/(18) + (2)/(36) = (4)/(9)

N[7 ⁄ 18] = 0.388889

I(X1X2;Y) = H(Y) − H(Y|X1X2)

H(Y) = N⎡⎣(8)/(9)Log[2, 9 ⁄ 4] + (1)/(9)Log[2, 9]⎤⎦ = 1.39215

H(Y|X1X2 = 00) = H(Y|X1X2 = 21) = (1⋅log1) = 0

H(Y|X1X2 = 10) = p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10)) + p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10))

= (1)/(2)log22 + (1)/(2)log22 = 1

H(Y|X1X2 = 20) = H(Y|X1X2 = 01) = H(Y|X1X2 = 11) = log22 = 1

H(Y|X1X2) = ⎲⎳x1x2yp(x1x2)H(y|x1x2) = 2⋅(7)/(8)⋅0 + (4)/(18)⋅1 = 2 ⁄ 9

N[2 ⁄ 9] = 0.222222

I(X1X2;Y) = H(Y) − H(Y|X1X2) = 1.39215 − 0.222222 = 1.16993

N[Log[2, 9 ⁄ 4]] = 1.16993

(8)/(9)log2⎛⎝(9)/(4)⎞⎠ + (1)/(9)log2(9) − (2)/(9) = (8)/(9)log2(9) + (1)/(9)log2(9) − (8)/(9)log2(4) − (2)/(9) = (8)/(9)log2(9) + (1)/(9)log2(9) − (16)/(9) − (2)/(9) = log(9) − (18)/(9) = log(9) − 2 = log(9) − log2(4) = \mathchoicelog2(9 ⁄ 4)log2(9 ⁄ 4)log2(9 ⁄ 4)log2(9 ⁄ 4)

\strikeout off\uuline off\uwave off

x2 = 0

x2 = 1

\overset

x1 = 0

x2 = 1

x3 = 2

⎡⎢⎣

0.35431

0.072845

0.072845

0.072845

0.072845

0.35431

⎤⎥⎦

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

y|x1x2

y0

y1

y2

p(x1x2)

00

0.35431

0

0

0.35431

10

0

0.0364225

0.0364225

0.072845

20

0

0.0364225

0.0364225

0.072845

01

0.0364225

0.0364225

0

0.072845

11

0.0364225

0.0364225

0

0.072845

21

0

0

0.35431

0.35431

p(y)

0.427155

0.14569

0.427155

0.35431 + 2⋅0.0364225 = 0.427155

0.0364225⋅4 = 0.14569

N[7 ⁄ 18] = 0.388889

I(X1X2;Y) = H(Y) − H(Y|X1X2)

H(Y) = N⎡⎣2⋅0.427155⋅Log⎡⎣2, (1)/(0.427155)⎤⎦ + 0.14569⋅Log⎡⎣2, (1)/(0.14569)⎤⎦⎤⎦ = 1.45326

H(Y|X1X2 = 00) = H(Y|X1X2 = 21) = (1⋅log1) = 0

H(Y|X1X2 = 10) = p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10)) + p(Y = 1|(X1X2) = 10)log(p(Y = 1|(X1X2) = 10))

= (1)/(2)log22 + (1)/(2)log22 = 1

H(Y|X1X2 = 20) = H(Y|X1X2 = 01) = H(Y|X1X2 = 11) = log22 = 1

H(Y|X1X2) = ⎲⎳x1x2yp(x1x2)H(y|x1x2) = 2⋅0.35431⋅0 + 4⋅0.072845⋅1

4⋅0.072845⋅1 = 0.29138

I(X1X2;Y) = H(Y) − H(Y|X1X2) = 1.45326 − 0.29138 = 1.16188

Фала богу!!! Немам поим каде сум грешел до сега!

Барање на отпимална дистрибуција

——————————————————————————–——————————

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

y|x1x2

y0

y1

y2

p(x1x2)

00

a

0

0

a

10

0

0.5b

0.5b

b

20

0

0.5c

0.5c

c

01

0.5d

0.5d

0

d

11

0.5e

0.5e

0

e

21

0

0

f

f

p(y)

a + 0.5(d + e)

0.5(b + c + d + e)

f + 0.5(b + c)

\strikeout off\uuline off\uwave offH(Y|X1, X2) = ∑x1, x2p(x1, x2)⋅H(Y|X2 = x2, X1 = x1) = p(0, 0)H(Y|00) + p(10)H(Y|10) + p(20)H(Y|20) + p(01)H(Y|01) + p(11)H(Y|11) + p(2, 1)H(Y|21)

= p(00)⋅0 + \mathchoicep(10) + p(20) + p(01) + p(11)p(10) + p(20) + p(01) + p(11)p(10) + p(20) + p(01) + p(11)p(10) + p(20) + p(01) + p(11) + p(21)⋅0 го имав p(11) пропуштено!!!

p(Y = 0) = \mathchoicep(00) + (p(01) + p(11))/(2);p(00) + (p(01) + p(11))/(2);p(00) + (p(01) + p(11))/(2);p(00) + (p(01) + p(11))/(2); = a + (d + e)/(2)

p(Y = 1) = \mathchoice(p(10) + p(20))/(2) + (p(01) + p(11))/(2)(p(10) + p(20))/(2) + (p(01) + p(11))/(2)(p(10) + p(20))/(2) + (p(01) + p(11))/(2)(p(10) + p(20))/(2) + (p(01) + p(11))/(2) = (b + c)/(2) + (d + e)/(2); p(Y = 2) = \mathchoice(p(10) + p(20))/(2) + p(21)(p(10) + p(20))/(2) + p(21)(p(10) + p(20))/(2) + p(21)(p(10) + p(20))/(2) + p(21) = (b + c)/(2) + f

H(Y) = − ⎛⎝p(00) + (p(01) + p(11))/(2)⎞⎠⋅log2⎛⎝p(00) + (p(01) + p(11))/(2)⎞⎠ − ⎛⎝(p(10) + p(20))/(2) + (p(01) + p(11))/(2)⎞⎠⋅log2⎛⎝(p(10) + p(20))/(2) + (p(01) + p(11))/(2)⎞⎠ − ⎛⎝(p(10) + p(20))/(2) + p(21)⎞⎠⋅log2⎛⎝(p(10) + p(20))/(2) + p(21)⎞⎠

p(00) + p(10) + p(20) + p(01) + p(11) + p(21) = 1 p(00) = 1 − p(10) + p(20) + p(01) + p(11) + p(21)

\strikeout off\uuline off\uwave off\mathchoicep(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;p(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;p(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;p(00) = a; p(10) = b; p(20) = c; p(01) = d; p(11) = e; p(21) = f;

\strikeout off\uuline off\uwave offa + b + c + d + e + f = 1

\strikeout off\uuline off\uwave offH(Y) = − ⎛⎝a + (d + e)/(2)⎞⎠⋅log2⎛⎝a + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠⋅log2⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + f⎞⎠⋅log2⎛⎝(b + c)/(2) + f⎞⎠

\mathchoiceI(X1, X2;Y) = H(Y) − H(Y|X1X2) = − ⎛⎝a + (d + e)/(2)⎞⎠⋅log2⎛⎝a + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠⋅log2⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + f⎞⎠⋅log2⎛⎝(b + c)/(2) + f⎞⎠ − b − c − d − eI(X1, X2;Y) = H(Y) − H(Y|X1X2) = − ⎛⎝a + (d + e)/(2)⎞⎠⋅log2⎛⎝a + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠⋅log2⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + f⎞⎠⋅log2⎛⎝(b + c)/(2) + f⎞⎠ − b − c − d − eI(X1, X2;Y) = H(Y) − H(Y|X1X2) = − ⎛⎝a + (d + e)/(2)⎞⎠⋅log2⎛⎝a + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠⋅log2⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + f⎞⎠⋅log2⎛⎝(b + c)/(2) + f⎞⎠ − b − c − d − eI(X1, X2;Y) = H(Y) − H(Y|X1X2) = − ⎛⎝a + (d + e)/(2)⎞⎠⋅log2⎛⎝a + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠⋅log2⎛⎝(b + c)/(2) + (d + e)/(2)⎞⎠ − ⎛⎝(b + c)/(2) + f⎞⎠⋅log2⎛⎝(b + c)/(2) + f⎞⎠ − b − c − d − e

Да симплифицирам:

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

y|x1x2

y0

y1

y2

p(x1x2)

00

a

0

0

a

10

0

0.5b

0.5b

b

20

0

0.5b

0.5b

b

01

0.5b

0.5b

0

b

11

0.5b

0.5b

0

b

21

0

0

a

a

p(y)

a + b

2b

a + b

I(X1, X2;Y) = H(Y) − H(Y|X1X2) = − 2(a + b)⋅log2(a + b) − (2b)⋅log2(2b) − 4b

2a + 4b = 1 → a = (1 − 4⋅b)/(2)

− 2⎛⎝(1 − 4⋅b)/(2) + b⎞⎠⋅log2⎛⎝(1 − 4⋅b)/(2) + b⎞⎠ − (2b)⋅log2(2b) − 4b = − 2⎛⎝(1 − 4⋅b + 2b)/(2)⎞⎠⋅log2⎛⎝(1 − 4⋅b + 2b)/(2)⎞⎠ − (2b)⋅log2(2b) − 4b = − 2⎛⎝(1 − 2⋅b)/(2)⎞⎠⋅log2⎛⎝(1 − 2⋅b)/(2)⎞⎠ − (2b)⋅log2(2b) − 4b

I(X1, X2;Y) = − (1 − 2⋅b)⋅log2⎛⎝(1 − 2⋅b)/(2)⎞⎠ − (2b)⋅log2(2b) − 4b

Од Maple добив:

(d)/(dp)(I(X1X2;Y)) = 0 → b = (1)/(8) ; a = (1 − 4⋅1 ⁄ 18)/(2) = (7)/(18)

maxpI(X1X2;Y) = \mathchoice1.16991.16991.16991.1699 bits

y|x1x2

y0

y1

y2

p(x1x2)

00

7 ⁄ 18

0

0

7 ⁄ 18

10

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

20

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

01

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

11

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

21

0

0

7 ⁄ 18

7 ⁄ 18

p(y)

4 ⁄ 9

1 ⁄ 9

4 ⁄ 9

Да ова е решението што го дава Cover за RUG = maxp(x1x2)I(X1X2;Y) докажано!!!

Пресметка на вториот член од cutset theorem.

p(y|x1x2) p(x1x2y)

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

y|x1x2

y0

y1

y2

p(x1x2)

p(x2)

00

7 ⁄ 18

0

0

7 ⁄ 18

x

10

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

y

20

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

y

01

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

y

11

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

y

21

0

0

7 ⁄ 18

7 ⁄ 18

x

p(y)

4 ⁄ 9

1 ⁄ 9

4 ⁄ 9

(p(x1x2))/(p(x2)) = p(x1|x2)

2x + 4y = 1 → x = (1 − 4y)/(2) → y = (1 − 2x)/(4)

I(X1;Y1|X2) = H(Y1|X2) − \cancelto0H(Y1|X1X2) = H(X1|X2) − \cancelto0H(X1|Y1X2) = H(Y1|X2) = H(X1|X2)?

H(X1|X2) = − ∑x1x2p(x1x2)logp(x1|x2) = − (4)/(18)log(1)/(18y) − (14)/(18)log⎛⎝(7)/(18x)⎞⎠ = (4)/(18)log18y + (14)/(18)log⎛⎝(18x)/(7)⎞⎠ = (4)/(18)log18⋅(1 − 2x)/(4) + (14)/(18)log⎛⎝(18x)/(7)⎞⎠

(d)/(dp)(I(X2;Y1|X2)) = 0 → x = 0.3181181 ;

(1 − 2⋅0.3181182)/(4) = 0.0909409

2⋅0.318181 + 4⋅0.0909409 = 1.00013

I(X2;Y1|X2) = 0.0453

——————————————————————————–——————————————————–

y|x1x2

y0

y1

y2

p(x1x2)

p(x2)

00

7 ⁄ 18

0

0

7 ⁄ 18

x

10

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

x

20

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

x

01

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

y

11

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

y

21

0

0

7 ⁄ 18

7 ⁄ 18

y

p(y)

4 ⁄ 9

1 ⁄ 9

4 ⁄ 9

x + y = 1 → y = 1 − x

H(X1|X2) = − ∑x1x2p(x1x2)logp(x1|x2) = − (2)/(18)log(1)/(18x) − (7)/(18)log⎛⎝(7)/(18x)⎞⎠ − (2)/(18)log(1)/(18y) − (7)/(18)log⎛⎝(7)/(18y)⎞⎠ = (2)/(18)log18x + (7)/(18)log⎛⎝(18x)/(7)⎞⎠ + (2)/(18)log18y + (7)/(18)log⎛⎝(18y)/(7)⎞⎠

= (2)/(18)log(182xy) + (7)/(18)log⎛⎝(182xy)/(72)⎞⎠ = (2)/(18)log(182x⋅(1 − x)) + (7)/(18)log⎛⎝(182)/(72)x⋅(1 − x)⎞⎠ = (2)/(18)log(324⋅(x − x2)) + (7)/(18)log⎛⎝(324)/(49)⋅(x − x2)⎞⎠

(182)/(72) = (324)/(49)

Со користење на Maple и изводи:

H(X1|X2) = 0.986

Нема потреба од изводи зошто се е дефинирано:

p(x1x2) p(x1|x2)

x1|x2

0

1

p(x1)

0

7 ⁄ 18

1 ⁄ 18

8 ⁄ 18

1

1 ⁄ 18

1 ⁄ 18

2 ⁄ 18

2

1 ⁄ 18

7 ⁄ 18

8 ⁄ 18

p(x2)

1 ⁄ 2

1 ⁄ 2

x1|x2

0

1

0

14 ⁄ 18

2 ⁄ 18

1

2 ⁄ 18

2 ⁄ 18

2

2 ⁄ 18

14 ⁄ 18

H(X1|X2) = (14)/(18)log2⎛⎝(18)/(14)⎞⎠ + (4)/(18)log2⎛⎝(18)/(2)⎞⎠

N⎡⎣(14)/(18)⋅Log⎡⎣2, (18)/(14)⎤⎦ + (4)/(18)⋅Log⎡⎣2, (18)/(2)⎤⎦⎤⎦ = 0.986427

Веројатно треба со башка вредности за p(x1x2):

p(x1x2) p(x1|x2)

x1|x2

0

1

p(x1)

0

a

b

a + b

1

b

b

2b

2

b

a

a + b

p(x2)

a + 2b

a + 2b

p(x2)

1 ⁄ 2

1 ⁄ 2

x1|x2

0

1

0

2a

2b

1

2b

2b

2

2b

2a

2a + 4b = 1 → b = (1 − 2a)/(4) → a + 2b = (1)/(2)

H(X1|X2) = − ∑x1x2p(x1x2)logp(x1|x2) = 2⋅a⋅log(1)/(2a) + 4⋅b⋅log(1)/(2b) = 2⋅a⋅log(1)/(2a) + 4⋅(1 − 2a)/(4)⋅log(4)/(2 − 4a)

Maple

a = 1 ⁄ 6

b = (1 − 2⋅1 ⁄ 6)/(4) = (1)/(6)

I(X1;Y1|X2) = H(X1|X2) = 1.585

Уште еднаш пресметка на вториот член од Cutset Theorem

y|x1x2

y0

y1

y2

00

1

0

0

10

0

0.5

0.5

20

0

0.5

0.5

01

0.5

0.5

0

11

0.5

0.5

0

21

0

0

1

y|x1x2

y0

y1

y2

p(x1x2)

00

a

0

0

a

10

0

0.5b

0.5b

b

20

0

0.5b

0.5b

b

01

0.5b

0.5b

0

b

11

0.5b

0.5b

0

b

21

0

0

a

a

p(y)

a + b

2b

a + b

I(X1, X2;Y) = H(Y) − H(Y|X1X2) = − 2(a + b)⋅log2(a + b) − (2b)⋅log2(2b) − 4b

2a + 4b = 1 → a = (1 − 4⋅b)/(2)

− 2⎛⎝(1 − 4⋅b)/(2) + b⎞⎠⋅log2⎛⎝(1 − 4⋅b)/(2) + b⎞⎠ − (2b)⋅log2(2b) − 4b = − 2⎛⎝(1 − 4⋅b + 2b)/(2)⎞⎠⋅log2⎛⎝(1 − 4⋅b + 2b)/(2)⎞⎠ − (2b)⋅log2(2b) − 4b = − 2⎛⎝(1 − 2⋅b)/(2)⎞⎠⋅log2⎛⎝(1 − 2⋅b)/(2)⎞⎠ − (2b)⋅log2(2b) − 4b

I(X1, X2;Y) = − (1 − 2⋅b)⋅log2⎛⎝(1 − 2⋅b)/(2)⎞⎠ − (2b)⋅log2(2b) − 4b

Од Maple добив:

(d)/(db)(I(X1X2;Y)) = 0 → b = (1)/(1g(8) ; a = (1 − 4⋅1 ⁄ 18)/(2) = (7)/(18)

maxpI(X1X2;Y) = \mathchoice1.16991.16991.16991.1699 bits

y|x1x2

y0

y1

y2

p(x1x2)

00

7 ⁄ 18

0

0

7 ⁄ 18

10

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

20

0

1 ⁄ 36

1 ⁄ 36

1 ⁄ 18

01

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

11

1 ⁄ 36

1 ⁄ 36

0

1 ⁄ 18

21

0

0

7 ⁄ 18

7 ⁄ 18

p(y)

4 ⁄ 9

1 ⁄ 9

4 ⁄ 9

Да ова е решението што го дава Cover за RUG = maxp(x1x2)I(X1X2;Y) докажано!!!

За време на читање на докторатот

I(X1;Y1|X2) = H(Y1|X2) − H(Y1|X1X2) = H(Y1) = H(X1) = − p1log(p1) − p2log(p2) − p3log(p3) = − 2⋅(a + b)log(a + b) − 2b⋅log(2b)

p(x1x2)

x1 ⁄ x2

0

1

p(x1)

0

a

b

a + b

1

b

b

2b

2

b

a

a + b

p(x2)

a + 2b

a + 2b

− 2⋅(a + b)log(a + b) − 2b⋅log(2b) = − (1 − 2⋅b)⋅log2⎛⎝(1 − 2⋅b)/(2)⎞⎠ − (2b)⋅log2(2b) − 4b

a = (1 − 4⋅b)/(2)

− 2⋅((1 − 4⋅b)/(2) + b)log⎛⎝(1 − 4⋅b)/(2) + b⎞⎠ − 2b⋅log(2b) = − (1 − 2⋅b)⋅log2⎛⎝(1 − 2⋅b)/(2)⎞⎠ − (2b)⋅log2(2b) − 4b

(1 − 4⋅b)/(2) + b = (1 − 4⋅b + 2b)/(2) = (1 − 2⋅b)/(2)

− 2⋅((1 − 2⋅b)/(2))log⎛⎝(1 − 2⋅b)/(2)⎞⎠ − 2b⋅log(2b) = − (1 − 2⋅b)⋅log2⎛⎝(1 − 2⋅b)/(2)⎞⎠ − (2b)⋅log2(2b) − 4b → b = 0

Значи двете функции се сечат за b = 0.

(d)/(db)I(X1;Y1|X2) = 0 → b = (1)/(6) → a = (1)/(6) → I(X1;Y1|X2) = 1.585

Дефинитивно I(X1X2;Y) ≥ I(X1;Y1|X2)

Пак истото се добива.

Последно што пробав во Maple да барам извод по a после по b и да ги решам равенките после. Не се добиваат добри резултати.

2 Achievability of C in Theorems 1,2,3

The achievability of

\mathchoiceC0 = supp(x1)maxx2I(X1;Y|x2)C0 = supp(x1)maxx2I(X1;Y|x2)C0 = supp(x1)maxx2I(X1;Y|x2)C0 = supp(x1)maxx2I(X1;Y|x2) in Theorem 2 follows immediately form Shannon’s basic result

[5] if we set

X2i = x2, i = 1, 2, ... . Also the achievability of

CFB in Theorem 3 is a simple corollary of Theorem 1,

when it is realized that the feedback relay channel is a degraded relay channel. The converses will be delayed until Section III.

We are left only with the proof of Theorem 1 - the achievability of C for the degraded relay channel. We begin with a brief outline of the proof. We consider B blocks , each of n symbols. A sequence of B − 1 messages wi ∈ [1, 2nR], i = 1, 2, ..., B − 1 will be sent over the channel in nB transmissions. (Note that as B → ∞, for fixed n, the rate \mathchoiceR(B − 1) ⁄ BR(B − 1) ⁄ BR(B − 1) ⁄ BR(B − 1) ⁄ B is arbitrary close to R.)

Многу е важно да се сконта дека праќа B − 1 пораки во B блокови. Ако претпоставиш дека еден блок се прaќа во една употреба на каналот (на пример n паралелни бинарни канали=еден блок) тогаш доколку нема грешки во преносот во приемникот ќе препознаеш B-1 блокови за B употреби на каналот т.е капацитетот ќе биде (B − 1) ⁄ B. Секогаш потсеќај се на noisy typewriter. Што и да правиш тој пола од буквите ги греши (шум) па на излез препознаваш само 13 од вкупно 26. Затоа капацитетот во бити беше log2(13) или во букви 0.5. (после EIT) Мислам дека едната порака не е стигната во приемникот затоа што сеуште се процесира во релето.

In each n-block b = 1, 2, ..., B we shall use the same doubly indexed set of codewords

We shall also need a partition

The partition S will allow us to send information to the receiver using the random binning proof of the source coding theorem of Seplian and Wolf [7].

The choice of C and S achieving C will be random, but the description of the random code and partition will be delayed until the use of the code is described. For the time being, the code should be assumed fixed.

We pick up the story in block i − 1. First, let us assume that the receiver y knows wi − 2 and si − 1 at the end of block i − 1. Let us also assume that the relay receiver knows wi − 1. We shall show that a good choice of {C, S} will allow the receiver to know (wi − 1, si) and the relay receiver to know wi at the end of block i (with probability of error ≤ ϵ). Thus the information state (wi − 1, si) of the receiver propagates forward, and a recursive calculation of the probability of error can be made, yielding probability of error ≤ Bϵ.

We summarize the use of the code as follows.

Transmission in block i − 1: x1(wi − 1|si − 1), x2(si − 1).

Received signals in block i − 1: Y1(i − 1), Y(i − 1) .

Computation at the end of block i − 1: the relay receiver

Y1(i − 1) is assumed to know

wi − 1.

The integer wi − 1 falls in some cell of the partition S. Call the index of this cell si. Then the relay is prepared to send

x2(si) in block

i. Transmitter

x1 also computes

si from

wi − 1.

Thus si will furnish the basis for cooperative resolution of the y uncertainty about wi − 1.

Remark: In the first block, the relay has no information s1 necessary for cooperation. However any good sequence x2 will allow the block Markov scheme to get started, and the slight loss in rate in the first block becomes asymptotically negligible as the number of blocks B → ∞.

Transmission in block i: x1(wi|si), x2(si).

Received signals in block i: y1(i), y(i).

Computation at end of block i: 1) The relay calculates wi from y1(i). 2) The unique jointly typical x2(si) with the received y(i) is calculated. Thus the si is known to the receiver. 3) The receiver calculates his ambiguity set J(\mathnormaly(i − 1)) i.e., the set of all wi − 1 such that (x1(wi − 1|si − 1), x2(si − 1), y(i − 1)) are jointly ϵ-typical.

The receiver then intersects \strikeout off\uuline off\uwave offJ(\mathnormaly(i − 1)) and the cell Ssi. By controlling the size of J, we shall (1 − ϵ)-guarantee that this intersection has one and only one member- the correct value wi − 1. We conclude that the receiver y(i) has correctly computed (wi − 1, si) from (wi − 2, si − 1)

\strikeout off\uuline off\uwave offЗошто му треба (wi − 2, si − 1)???

and (y(i − 1), y(i)).

Proof of achievability of C in Theorem 1:

We shall use the code as outlined previously in this section. It is important to note that, although Theorem 1 treats degraded relay channels, the proof of achievability of C and all constructions in this section apply without change to arbitrary relay channels. It is only in the converse that degradedness is needed to establish that the achievable rate C is indeed the capacity. the converse is proved in Section III.

We shall now describe the random codes. Fix a probability mass function p(x1, x2).

First generate at random M0 = 2nR0 independent identically distributed n-sequences in X\mathnormaln\mathnormal2 , each drawn according to p(x2) = ∏ni = 1p(x2i). Index them as x2(s), s ∈ [1, 2nR0]. For each x2(s),

Ова е многу важно! Сака да каже дека за секоj bin s генерираш посебна кодна подкнига од 2nR елементи. Вкупната кодна книга содржи 2nR0 x2nR1 елементи.

generate M = 2nR conditionally independent n-sequences x1(w|s), w ∈ [1, 2nR] drawn according to p(x1|x2(s)) = ∏ni = 1p(x1i|x2i(s)). This defines a random code book C\mathnormal = {x1(w|s), x2}.

Reveal the assignments to both the encoder and the decoder.

Тhe random partition S\mathnormal = {S1, S2..., S2nR0} of {1, 2, ..., 2nR} is defined as follows. Let each integer w ∈ [1, 2nR] be assigned independently, according to a uniform distribution over the indices s = 1, 2, ..., 2nR0, to cell Ss. We shall use the functional notation s(w) to denote the index of the cell in which w lies.

Reveal the s(w) assignments to Relay and Destination.

We recall some basic results concerning typical sequences. Let

{X(1), X(2)..., X(k)} denote a finite collection of discrete random variables with some fixed joint distribution

p(x(1), x(2), ..., x(k)), for

(x(1), x(2), ..., x(k)) ∈ X(1) xX(2) x...xX\mathnormal(k).

Let S denote an ordered subset of these random variables, and consider n independent copies of S. Thus

Let \mathchoiceN(s;s)N(s;s)N(s;s)N(s;s) be the number of indices i ∈ {1, 2, ..., n} such that Si = s. By the law of large numbers, for any subset S of random variables and for all s ∈ S,

N(s;s) го замислувам во експеримент на фрлање на коцка како број на пати коцката паѓа на одредена бројка. n го замислувам е колку пати е направен експериментот (фрлање на коцка). За подолу истото го замислуваш за случаен процес кој се состои од секвенца од k коцки.

18.06.14

Внимавај не се работи за секвенца од случајни променливи како гореш што замислувам туку се работи за секвенца од случајни процеси.

Последното замислување е следново. Множеството s е секвенца од секвенци од кое вадиш примерок од n обзервации. (1)/(n)N(s, s) сака да каже дека

количникот од бројот на појавувања на секвенцата s во примерокот од обзервации и вкупната должина на примерокот, стреми кон веројатноста на појавување на секвенцата s.

Also

Convergence in

20↑ and

21↑ takes place simultaneously with probability one for all

2k subsets

S ⊆ {X(1), X(2), ..., X(k)}

Consider the following definition of joint typicality.

The set Aϵ of ϵ-typical n-sequences (x(1), x(2), ..., x(k)) is defined by:

Aϵ(X(1), X(2)..., X(k)) = Aϵ = ⎧⎩(x(1), x(2), ..., x(k)):||(1)/(n)N(x(1), x(2), ..., x(k);x(1), x(2), ..., x(k)) − p(x(1), x(2), ..., x(k))|| <

< ϵ||X(1) xX(2) x...xX\mathnormal(k)|| for (x(1), x(2), ..., x(k)) ∈ X(1) xX(2) x...xX\mathnormal(k)}

where ||ℛ|| is cardinality of the set ℛ.

Remark: The definition of typicality, sometimes called strong typicality

Се потсетив на дефиницијата на strong typicality во EIT Chapter 15.8.

can be found in work of Wolfowitz

[13] and Berger

[12]. Strong typicality implies (week) typicality used in

[8],

[14]. The distinction is not needed until the proof of Theorem 6 in Section VI of this paper.

The following is a version of the asymptotic equipartition property involving simultaneous constraints [12],

[14].

For any ϵ > 0 , there exist and integer n such that Aϵ(S) satisfies

i) Pr{Aϵ(S)} ≥ 1 − ϵ, for all S ⊆ {X(1), X(2)..., X(k)}

ii) s ∈ Aϵ(S) ⇒ || − (1)/(n)logp(s) − H(s)|| ≤ ϵ

We shall need to know the probability that conditionally independent sequences are jointly typical. Let S1, S2 and S3 be three subsets of X(1), X(2)..., X(k). Let S’1, S’2 be conditionally independent given S3 with the marginals

p(s1|s3) = ∑s2p(s1, s2, s3) ⁄ p(s3)

p(s2|s3) = ∑s1p(s1, s2, s3) ⁄ p(s3)

∑s2(p(s1, s2, s3))/(p(s3)) = ∑s2p(s1, s2, s3|s3) = ∑s2p(s1, s3|s3)⋅p(s2, s3|s3) = p(s1, s3|s3)∑s2p(s2, s3|s3) = p(s1, s3)⋅1;

The following lemma is provided in

[14].

Let (S1, S2, S3) ~ ∏ni = 1p(s1i, s2i, s3i) and \strikeout off\uuline off\uwave off(S’1, S’2, S’3) ~ ∏ni = 1p(s1i|s3i)p(s2i|s3i)p(s3i). Then, for n such that P{Aϵ(S1, S2, S3)} > 1 − ϵ,

(1 − ϵ)2 − n⋅(I(S1;S2|S3 + 7⋅ϵ) ≤ P{(S’1, S’2, S3) ∈ Aϵ(S1, S2, S3)} ≤ 2 − n⋅(I(S1;S2|S3) − 7ϵ)

Ова лема мислам ја дава веројатноста некоја друга секвенца да припаѓа на типичното множество. (потсeти се на EIT Theorem 7.6.1 - Joint AEP)

18.06.2014

Да ова е всушност Теоремата 15.2.3 од EIT

Доказот од EIT е:

P{(S1’, S2’, S3’) ∈ A(n)ϵ} = ⎲⎳(s1, s2, s3) ∈ A(n)ϵp(s3)p(s1|s3)p(s2|s3)≐|A(n)ϵ(S1S2S3)|2 − n(H(S3)±2ϵ)2 − n(H(S1|S3)±2ϵ)2 − n(H(S2|S3)±2ϵ)

≐2n(H(S1S2S3)±ϵ) − n(H(S3)±ϵ) − n(H(S1|S3)±2ϵ) − n(H(S2|S3)±2ϵ)≐2 − n(I(S1;S2|S3)±6ϵ)

n(H(S1S2S3)±ϵ) − n(H(S3)±ϵ) − n(H(S1|S3)±2ϵ) − n(H(S2|S3)±2ϵ) =

n[H(S1S2S3)±ϵ − H(S3)∓ϵ − H(S1|S3)∓2ϵ − H(S2|S3)∓2ϵ]

n[H(S1S2S3) − H(S3) − H(S1|S3) − H(S2|S3)] = (*)

I(S1;S2|S3) = H(S1|S3) − H(S1|S2S3)

H(S1S2S3) = H(S3) + H(S2|S3) + H(S1|S2S3)

(*) = n[\cancelH(S3) + \cancelH(S2|S3) + H(S1|S2S3) − \cancelH(S3) − H(S1|S3) − \cancelH(S2|S3)] = − n⋅I(S1;S2|S3)

Ако се земат во предвид проментие на знаците во

± тогаш:

n[H(S1S2S3)±ϵ − H(S3)∓ϵ − H(S1|S3)∓2ϵ − H(S2|S3)∓2ϵ] = − n⋅(I(S1;S2|S3)±4ϵ)

Ако не се земат во предвид промените на знаците во

± тогаш:

n[H(S1S2S3)±ϵ − H(S3)±ϵ − H(S1|S3)±2ϵ − H(S2|S3)±2ϵ] = − n⋅(I(S1;S2|S3)±6ϵ)

Повторување од EIT

recall AEP from EIT textbook let: X = Xn1, Y = Yn1

2 − n(H(X, Y) + ϵ) ≤ p(X, Y) ≤ 2 − n(H(X, Y) − ϵ)

1) Pr(p(X, Y) ∈ A(n)ϵ) ≥ 1 − ϵ

2) (1 − ϵ)⋅2n(H(X, Y) − ϵ) ≤ |A(n)ϵ| ≤ 2n(H(X, Y) + ϵ)

3) (1 − ϵ)2 − n(I(X, Y) + 3ϵ) ≤ Pr((\widetildeX, \widetildeY) ∈ A(n)ϵ) ≤ 2 − n(I(X, Y) − 3ϵ)

1 = ⎲⎳x, yp(X, Y) ≥ ⎲⎳x, y2 − n(H(X, Y) + ϵ) ≥ ⎲⎳(x, y) ∈ A(n)ϵ2 − n(H(X, Y) + ϵ) = |A(n)ϵ|⋅2 − n(H(X, Y) + ϵ) ⇒ |A(n)ϵ| ≤ 2n(H(X, Y) + ϵ)

1 − ϵ ≤ ⎲⎳(X, Y) ∈ A(n)ϵp(X, Y) ≤ ⎲⎳(X, Y) ∈ A(n)ϵ2 − n(H(X, Y) − ϵ) = |A(n)ϵ|⋅2 − n(H(X, Y) − ϵ) ⇒ |A(n)ϵ| ≥ (1 − ϵ)⋅2n(H(X, Y) − ϵ)

\strikeout off\uuline off\uwave off

Pr((\widetildeX, \widetildeY) ∈ A(n)ϵ) = ⎲⎳(\widetildeX, \widetildeY) ∈ A(n)ϵp(\widetildeX, \widetildeY) = |A(n)ϵ|p(\widetildeX)⋅p(\widetildeY) ≤ 2n(H(X, Y) + ϵ)⋅2 − n(H(\widetildeX) − ϵ)⋅2 − n(H(\widetildeY) − ϵ) = 2n⋅H(X, Y) + nϵ − nH(\widetildeX) + nϵ − nH(\widetildeY) + nϵ

= 2 − n⋅( − H(X, Y) + H(\widetildeX) + H(\widetildeY)) + 3nϵ = 2 − n⋅( − H(\widetildeY|\widetildeX) + H(\widetildeY)) + 3nϵ = 2 − n(I(\widetildeX:\widetildeY) − 3ϵ) ⇒ Pr((\widetildeX, \widetildeY) ∈ A(n)ϵ) ≤ 2 − n(I(\widetildeX;\widetildeY) − 3ϵ)

Pr((\widetildeX, \widetildeY) ∈ A(n)ϵ) = ⎲⎳(\widetildeX, \widetildeY) ∈ A(n)ϵp(\widetildeX, \widetildeY) = ⎲⎳(\widetildeX, \widetildeY) ∈ A(n)ϵp(\widetildeX)⋅p(\widetildeY) = |A(n)ϵ|p(\widetildeX)⋅p(\widetildeY) ≥ (1 − ϵ)⋅2n(H(X, Y) − ϵ)⋅2 − n(H(\widetildeX) + ϵ)⋅2 − n(H(\widetildeY) + ϵ) =

(1 − ϵ)⋅2nH(X, Y) − n⋅ϵ − nH(\widetildeX) − n⋅ϵ − nH(\widetildeY) − n⋅ϵ = (1 − ϵ)⋅2 − n( − H(X, Y) + H(\widetildeX) + H(\widetildeY)) − 3n⋅ϵ = (1 − ϵ)⋅2 − n( − H(\widetildeY|\widetildeX) + H(\widetildeY)) − 3n⋅ϵ = (1 − ϵ)⋅2 − n(I(X;Y) + 3⋅ϵ)

⇒ Pr((\widetildeX, \widetildeY) ∈ A(n)ϵ) ≥ (1 − ϵ)⋅2 − n(I(X;Y) + 3⋅ϵ) i.e. (1 − ϵ)⋅2 − n(I(X;Y) + 3⋅ϵ) ≤ Pr((\widetildeX, \widetildeY) ∈ A(n)ϵ) ≤ 2 − n(I(\widetildeX;\widetildeY) − 3ϵ)

Let wi ∈ [1, 2nR] be the next index to be sent in block i, and assume that wi − 1 ∈ Ssi. The encoder then sends x1(wi|si). The relay has an estimate

ŵ̂i − 1 of the previous index

wi − 1 ∈ Ssi. (This will be made precise in the decoding section.)

Assume that ŵ̂i − 1 ∈ Sŝ̂i. Then the relay encoder sends the codeword x2(sî̂) in block i.

Ова е многу важно! Значи релето гледа во којa клетка припаѓа примениот сигнал и врз основ на индексот на таа клетка го праќа содветниот симбол x2 во наредниот блок.

i

1

2

3

4

...

i

...

b

X1

x1(w1|s1)

x1(w2|s2)

x1(w3|s3)

x1(w4|s4)

x1(wj|sj)

x1(wb|sb)

X2

0

x2(s2)

x2(s3)

x2(s4)

x2(sj)

x2(sb)

Y

y(1)

y(2)

y(3)

y(4)

y(j)

y(b)

We assume that at the end of block (i − 1) the receiver knows (w1, w2, ...wi − 2) and (s1, s2, ..., si − 1) and the relay knows (w1, w2, ...wi − 1) and consequently \mathchoice(s1, s2, ..., si)(s1, s2, ..., si)(s1, s2, ..., si)(s1, s2, ..., si).

The decoding procedures at the end of block i are as follows.

\mathchoice1.1.1.1. Knowing si, and upon receiving y1(i), the relay receiver estimates the message of the transmitter wî̂ = w if there exists a unique w such that (x1(w|si), x2(si), y1(i)) are jointly ϵ-typical. Using Lemma 2, it can be shown that \mathchoicewî̂ = wiwî̂ = wiwî̂ = wiwî̂ = wi with arbitrary small probability of error if

and n is sufficiently large.

Овој израз ја дефинира горната граница на брзината на пренос во каналот!!! Односно изразот R < I(X1, X2;Y).

\mathchoice2.2.2.2. The

receiver declares that

sî = s was sent if there exist one and only one

s such that

(x2(s), Y(i)) are jointly

ϵ -typical. From Lemma 2 we know that

si can be decoded with arbitrary small probability of error if

si can be decoded with arbitrarily small probability of error if si takes on less than 2nI(X2;Y) values , i.e. if

and n is sufficiently large.

\mathchoice3.3.3.3. Assuming that si is decoded successfully at the receiver, then ŵi − 1 = w is declared to be the index sent in bloc i − 1 if there is a unique \mathchoicew ∈ Ssi∩ℒ\mathnormal(y(i − 1))w ∈ Ssi∩ℒ\mathnormal(y(i − 1))w ∈ Ssi∩ℒ\mathnormal(y(i − 1))w ∈ Ssi∩ℒ\mathnormal(y(i − 1)). It will be shown that if n is sufficiently large and if

then \mathchoiceŵi − 1 = wi − 1ŵi − 1 = wi − 1ŵi − 1 = wi − 1ŵi − 1 = wi − 1 with arbitrary small probability of error.

Претпоставувам дека ℒ(y(i-1)) функцијата за декодирање (decoding function - g(...)) која го дава излезниот симбол во приемникот. Да ама излезот од оваа функција е множество. Подолу има формула за кардиналност на тоа множество. Веројатно го дава множеството на можни излезни симболи.

25.06.2014

Накратко сакам да ја опишам целата постапка како функционира релејниот систем и како се остварува кооперацијата.